Jeong-Hyun Cho

Hybrid Paradigm-based Brain-Computer Interface for Robotic Arm Control

Dec 14, 2022Abstract:Brain-computer interface (BCI) uses brain signals to communicate with external devices without actual control. Particularly, BCI is one of the interfaces for controlling the robotic arm. In this study, we propose a knowledge distillation-based framework to manipulate robotic arm through hybrid paradigm induced EEG signals for practical use. The teacher model is designed to decode input data hierarchically and transfer knowledge to student model. To this end, soft labels and distillation loss functions are applied to the student model training. According to experimental results, student model achieved the best performance among the singular architecture-based methods. It is confirmed that using hierarchical models and knowledge distillation, the performance of a simple architecture can be improved. Since it is uncertain what knowledge is transferred, it is important to clarify this part in future studies.

Target-centered Subject Transfer Framework for EEG Data Augmentation

Nov 24, 2022Abstract:Data augmentation approaches are widely explored for the enhancement of decoding electroencephalogram signals. In subject-independent brain-computer interface system, domain adaption and generalization are utilized to shift source subjects' data distribution to match the target subject as an augmentation. However, previous works either introduce noises (e.g., by noise addition or generation with random noises) or modify target data, thus, cannot well depict the target data distribution and hinder further analysis. In this paper, we propose a target-centered subject transfer framework as a data augmentation approach. A subset of source data is first constructed to maximize the source-target relevance. Then, the generative model is applied to transfer the data to target domain. The proposed framework enriches the explainability of target domain by adding extra real data, instead of noises. It shows superior performance compared with other data augmentation methods. Extensive experiments are conducted to verify the effectiveness and robustness of our approach as a prosperous tool for further research.

Factorization Approach for Sparse Spatio-Temporal Brain-Computer Interface

Jun 17, 2022

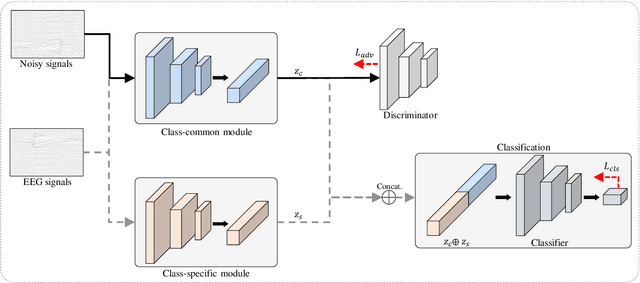

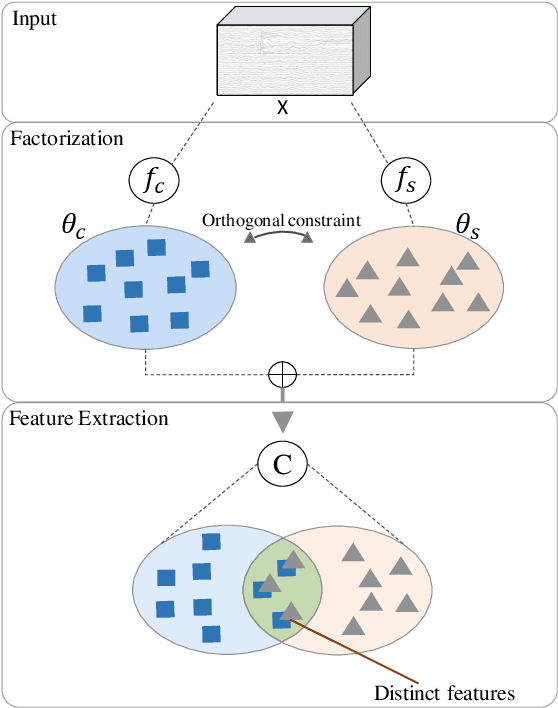

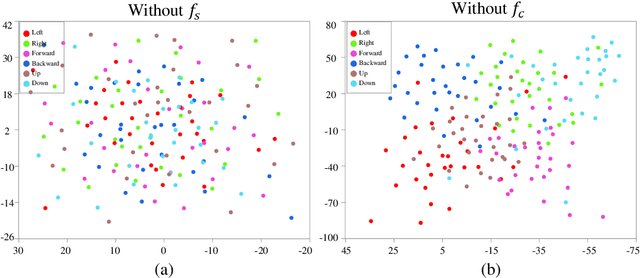

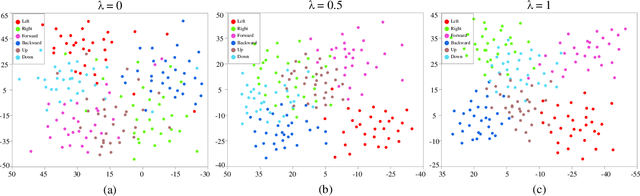

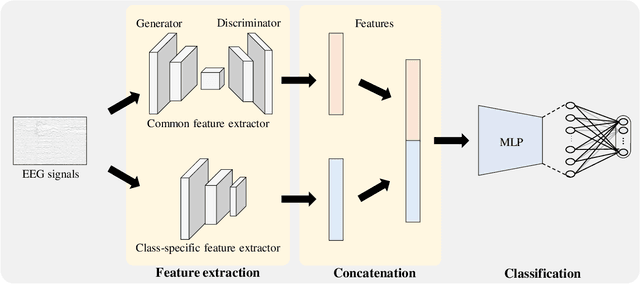

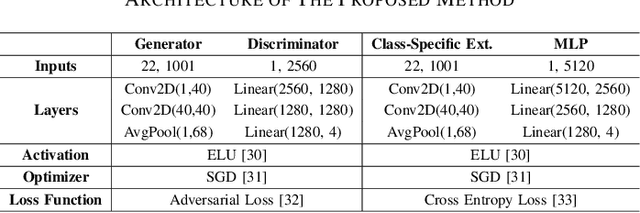

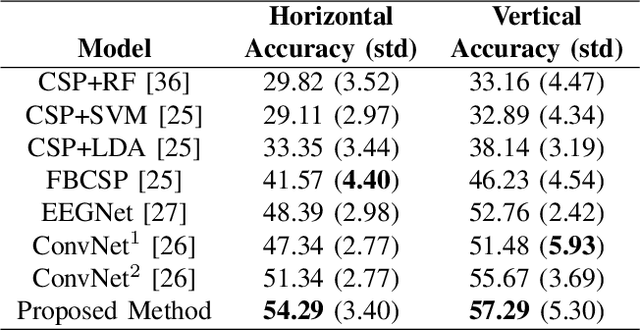

Abstract:Recently, advanced technologies have unlimited potential in solving various problems with a large amount of data. However, these technologies have yet to show competitive performance in brain-computer interfaces (BCIs) which deal with brain signals. Basically, brain signals are difficult to collect in large quantities, in particular, the amount of information would be sparse in spontaneous BCIs. In addition, we conjecture that high spatial and temporal similarities between tasks increase the prediction difficulty. We define this problem as sparse condition. To solve this, a factorization approach is introduced to allow the model to obtain distinct representations from latent space. To this end, we propose two feature extractors: A class-common module is trained through adversarial learning acting as a generator; Class-specific module utilizes loss function generated from classification so that features are extracted with traditional methods. To minimize the latent space shared by the class-common and class-specific features, the model is trained under orthogonal constraint. As a result, EEG signals are factorized into two separate latent spaces. Evaluations were conducted on a single-arm motor imagery dataset. From the results, we demonstrated that factorizing the EEG signal allows the model to extract rich and decisive features under sparse condition.

Recognition of Tactile-related EEG Signals Generated by Self-touch

Dec 14, 2021

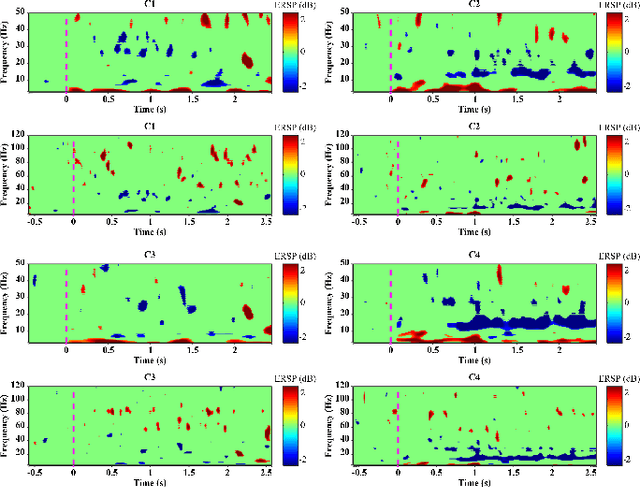

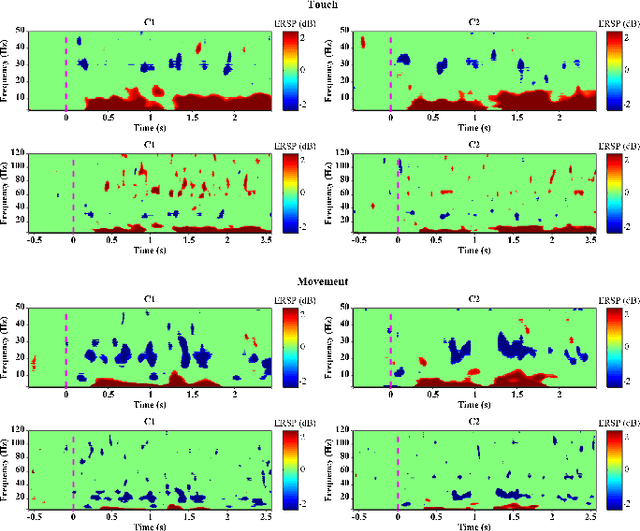

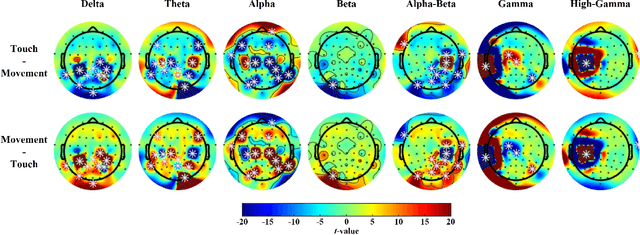

Abstract:Touch is the first sense among human senses. Not only that, but it is also one of the most important senses that are indispensable. However, compared to sight and hearing, it is often neglected. In particular, since humans use the tactile sense of the skin to recognize and manipulate objects, without tactile sensation, it is very difficult to recognize or skillfully manipulate objects. In addition, the importance and interest of haptic technology related to touch are increasing with the development of technologies such as VR and AR in recent years. So far, the focus is only on haptic technology based on mechanical devices. Especially, there are not many studies on tactile sensation in the field of brain-computer interface based on EEG. There have been some studies that measured the surface roughness of artificial structures in relation to EEG-based tactile sensation. However, most studies have used passive contact methods in which the object moves, while the human subject remains still. Additionally, there have been no EEG-based tactile studies of active skin touch. In reality, we directly move our hands to feel the sense of touch. Therefore, as a preliminary study for our future research, we collected EEG signals for tactile sensation upon skin touch based on active touch and compared and analyzed differences in brain changes during touch and movement tasks. Through time-frequency analysis and statistical analysis, significant differences in power changes in alpha, beta, gamma, and high-gamma regions were observed. In addition, major spatial differences were observed in the sensory-motor region of the brain.

A Factorization Approach for Motor Imagery Classification

Dec 13, 2021

Abstract:Brain-computer interface uses brain signals to communicate with external devices without actual control. Many studies have been conducted to classify motor imagery based on machine learning. However, classifying imagery data with sparse spatial characteristics, such as single-arm motor imagery, remains a challenge. In this paper, we proposed a method to factorize EEG signals into two groups to classify motor imagery even if spatial features are sparse. Based on adversarial learning, we focused on extracting common features of EEG signals which are robust to noise and extracting only signal features. In addition, class-specific features were extracted which are specialized for class classification. Finally, the proposed method classifies the classes by representing the features of the two groups as one embedding space. Through experiments, we confirmed the feasibility that extracting features into two groups is advantageous for datasets that contain sparse spatial features.

Decoding of Intuitive Visual Motion Imagery Using Convolutional Neural Network under 3D-BCI Training Environment

May 15, 2020

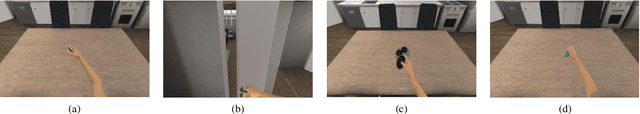

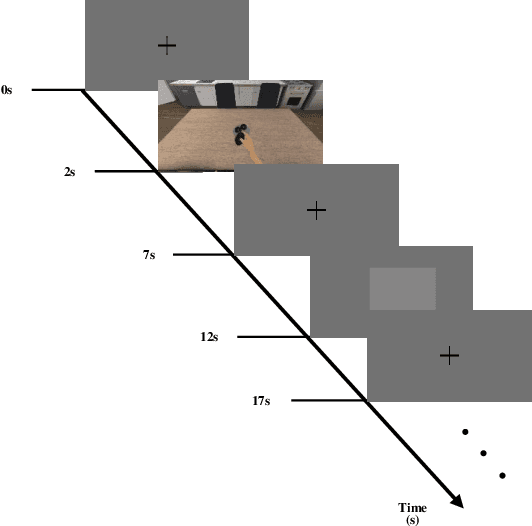

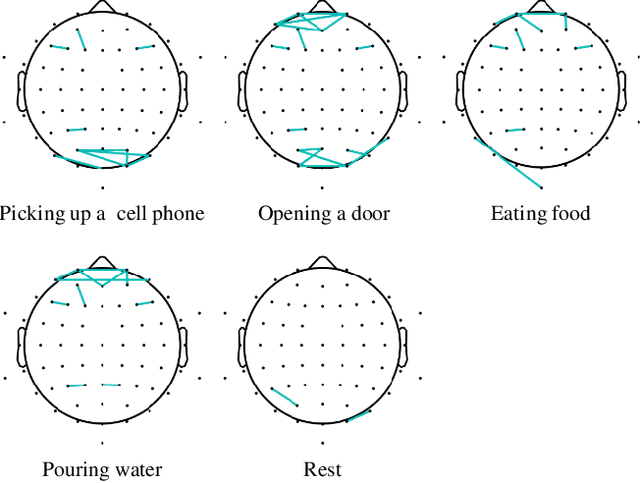

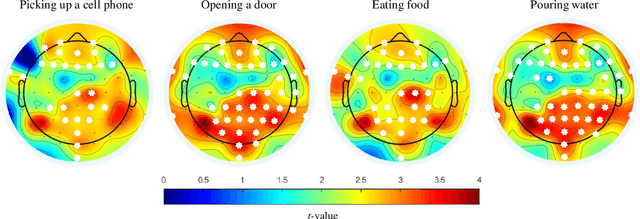

Abstract:In this study, we adopted visual motion imagery, which is a more intuitive brain-computer interface (BCI) paradigm, for decoding the intuitive user intention. We developed a 3-dimensional BCI training platform and applied it to assist the user in performing more intuitive imagination in the visual motion imagery experiment. The experimental tasks were selected based on the movements that we commonly used in daily life, such as picking up a phone, opening a door, eating food, and pouring water. Nine subjects participated in our experiment. We presented statistical evidence that visual motion imagery has a high correlation from the prefrontal and occipital lobes. In addition, we selected the most appropriate electroencephalography channels using a functional connectivity approach for visual motion imagery decoding and proposed a convolutional neural network architecture for classification. As a result, the averaged classification performance of the proposed architecture for 4 classes from 16 channels was 67.50 % across all subjects. This result is encouraging, and it shows the possibility of developing a BCI-based device control system for practical applications such as neuroprosthesis and a robotic arm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge