Byoung-Hee Kwon

Meta-cognitive Multi-scale Hierarchical Reasoning for Motor Imagery Decoding

Nov 11, 2025Abstract:Brain-computer interface (BCI) aims to decode motor intent from noninvasive neural signals to enable control of external devices, but practical deployment remains limited by noise and variability in motor imagery (MI)-based electroencephalogram (EEG) signals. This work investigates a hierarchical and meta-cognitive decoding framework for four-class MI classification. We introduce a multi-scale hierarchical signal processing module that reorganizes backbone features into temporal multi-scale representations, together with an introspective uncertainty estimation module that assigns per-cycle reliability scores and guides iterative refinement. We instantiate this framework on three standard EEG backbones (EEGNet, ShallowConvNet, and DeepConvNet) and evaluate four-class MI decoding using the BCI Competition IV-2a dataset under a subject-independent setting. Across all backbones, the proposed components improve average classification accuracy and reduce inter-subject variance compared to the corresponding baselines, indicating increased robustness to subject heterogeneity and noisy trials. These results suggest that combining hierarchical multi-scale processing with introspective confidence estimation can enhance the reliability of MI-based BCI systems.

Sample Dominance Aware Framework via Non-Parametric Estimation for Spontaneous Brain-Computer Interface

Nov 15, 2023Abstract:Deep learning has shown promise in decoding brain signals, such as electroencephalogram (EEG), in the field of brain-computer interfaces (BCIs). However, the non-stationary characteristics of EEG signals pose challenges for training neural networks to acquire appropriate knowledge. Inconsistent EEG signals resulting from these non-stationary characteristics can lead to poor performance. Therefore, it is crucial to investigate and address sample inconsistency to ensure robust performance in spontaneous BCIs. In this study, we introduce the concept of sample dominance as a measure of EEG signal inconsistency and propose a method to modulate its effect on network training. We present a two-stage dominance score estimation technique that compensates for performance degradation caused by sample inconsistencies. Our proposed method utilizes non-parametric estimation to infer sample inconsistency and assigns each sample a dominance score. This score is then aggregated with the loss function during training to modulate the impact of sample inconsistency. Furthermore, we design a curriculum learning approach that gradually increases the influence of inconsistent signals during training to improve overall performance. We evaluate our proposed method using public spontaneous BCI dataset. The experimental results confirm that our findings highlight the importance of addressing sample dominance for achieving robust performance in spontaneous BCIs.

Target-centered Subject Transfer Framework for EEG Data Augmentation

Nov 24, 2022Abstract:Data augmentation approaches are widely explored for the enhancement of decoding electroencephalogram signals. In subject-independent brain-computer interface system, domain adaption and generalization are utilized to shift source subjects' data distribution to match the target subject as an augmentation. However, previous works either introduce noises (e.g., by noise addition or generation with random noises) or modify target data, thus, cannot well depict the target data distribution and hinder further analysis. In this paper, we propose a target-centered subject transfer framework as a data augmentation approach. A subset of source data is first constructed to maximize the source-target relevance. Then, the generative model is applied to transfer the data to target domain. The proposed framework enriches the explainability of target domain by adding extra real data, instead of noises. It shows superior performance compared with other data augmentation methods. Extensive experiments are conducted to verify the effectiveness and robustness of our approach as a prosperous tool for further research.

Factorization Approach for Sparse Spatio-Temporal Brain-Computer Interface

Jun 17, 2022

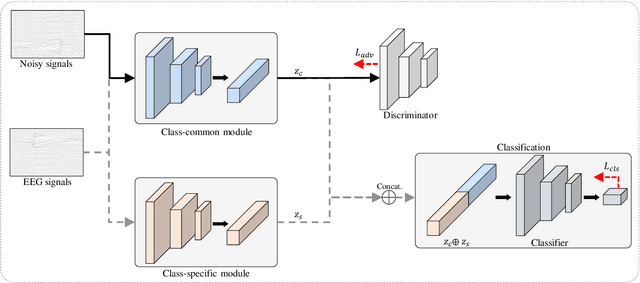

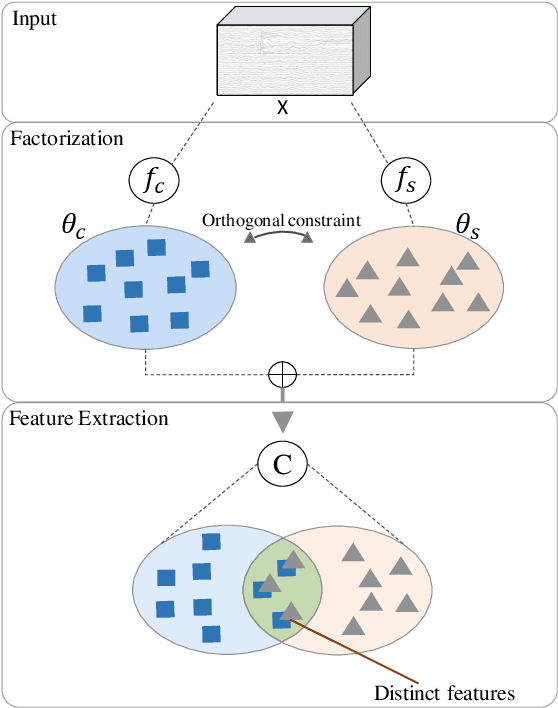

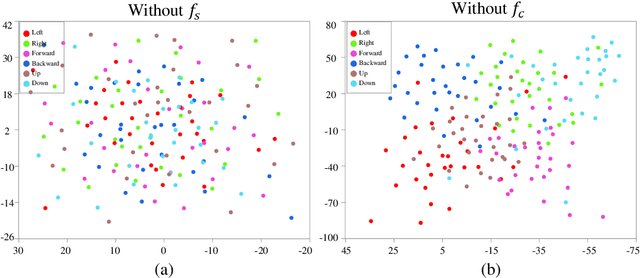

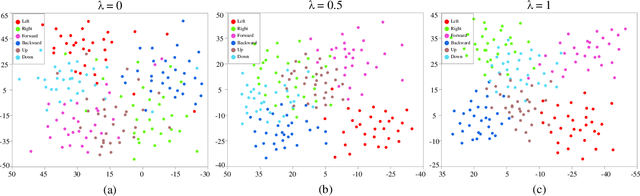

Abstract:Recently, advanced technologies have unlimited potential in solving various problems with a large amount of data. However, these technologies have yet to show competitive performance in brain-computer interfaces (BCIs) which deal with brain signals. Basically, brain signals are difficult to collect in large quantities, in particular, the amount of information would be sparse in spontaneous BCIs. In addition, we conjecture that high spatial and temporal similarities between tasks increase the prediction difficulty. We define this problem as sparse condition. To solve this, a factorization approach is introduced to allow the model to obtain distinct representations from latent space. To this end, we propose two feature extractors: A class-common module is trained through adversarial learning acting as a generator; Class-specific module utilizes loss function generated from classification so that features are extracted with traditional methods. To minimize the latent space shared by the class-common and class-specific features, the model is trained under orthogonal constraint. As a result, EEG signals are factorized into two separate latent spaces. Evaluations were conducted on a single-arm motor imagery dataset. From the results, we demonstrated that factorizing the EEG signal allows the model to extract rich and decisive features under sparse condition.

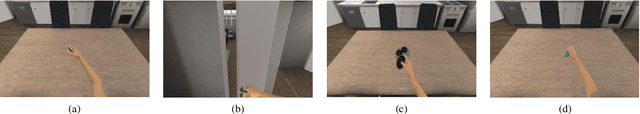

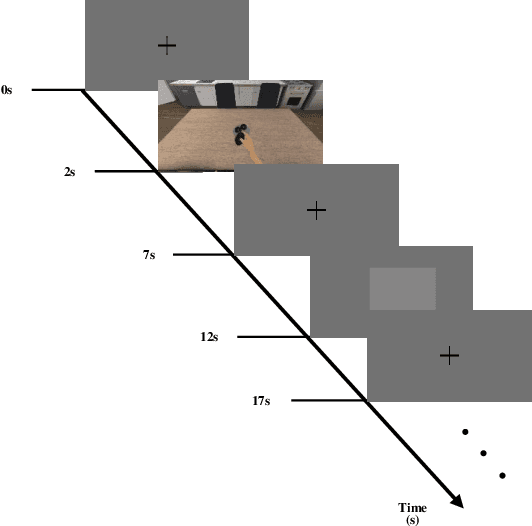

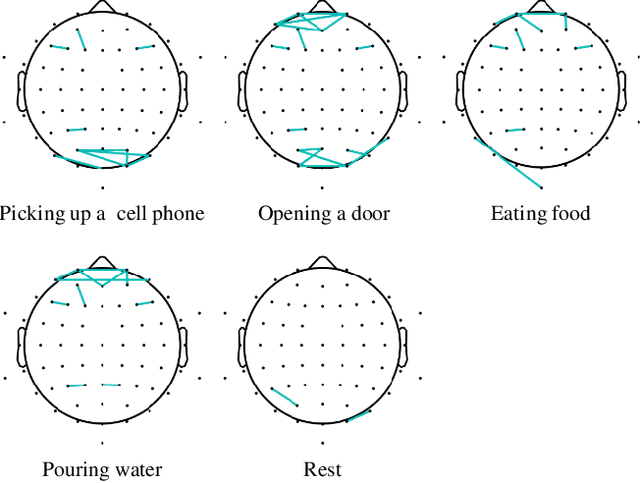

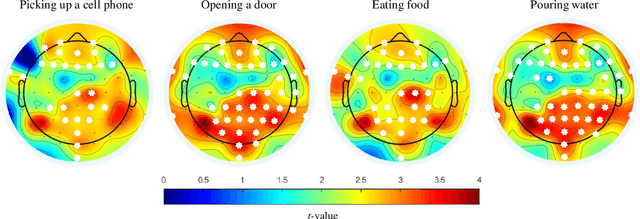

Decoding of Intuitive Visual Motion Imagery Using Convolutional Neural Network under 3D-BCI Training Environment

May 15, 2020

Abstract:In this study, we adopted visual motion imagery, which is a more intuitive brain-computer interface (BCI) paradigm, for decoding the intuitive user intention. We developed a 3-dimensional BCI training platform and applied it to assist the user in performing more intuitive imagination in the visual motion imagery experiment. The experimental tasks were selected based on the movements that we commonly used in daily life, such as picking up a phone, opening a door, eating food, and pouring water. Nine subjects participated in our experiment. We presented statistical evidence that visual motion imagery has a high correlation from the prefrontal and occipital lobes. In addition, we selected the most appropriate electroencephalography channels using a functional connectivity approach for visual motion imagery decoding and proposed a convolutional neural network architecture for classification. As a result, the averaged classification performance of the proposed architecture for 4 classes from 16 channels was 67.50 % across all subjects. This result is encouraging, and it shows the possibility of developing a BCI-based device control system for practical applications such as neuroprosthesis and a robotic arm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge