Hye-Bin Shin

Cross-Modal Consistency-Guided Active Learning for Affective BCI Systems

Nov 19, 2025

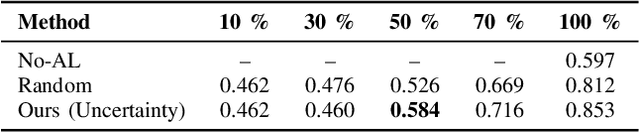

Abstract:Deep learning models perform best with abundant, high-quality labels, yet such conditions are rarely achievable in EEG-based emotion recognition. Electroencephalogram (EEG) signals are easily corrupted by artifacts and individual variability, while emotional labels often stem from subjective and inconsistent reports-making robust affective decoding particularly difficult. We propose an uncertainty-aware active learning framework that enhances robustness to label noise by jointly leveraging model uncertainty and cross-modal consistency. Instead of relying solely on EEG-based uncertainty estimates, the method evaluates cross-modal alignment to determine whether uncertainty originates from cognitive ambiguity or sensor noise. A representation alignment module embeds EEG and face features into a shared latent space, enforcing semantic coherence between modalities. Residual discrepancies are treated as noise-induced inconsistencies, and these samples are selectively queried for oracle feedback during active learning. This feedback-driven process guides the network toward reliable, informative samples and reduces the impact of noisy labels. Experiments on the ASCERTAIN dataset examine the efficiency and robustness of ours, highlighting its potential as a data-efficient and noise-tolerant approach for EEG-based affective decoding in brain-computer interface systems.

NeuroLex: A Lightweight Domain Language Model for EEG Report Understanding and Generation

Nov 17, 2025

Abstract:Clinical electroencephalogram (EEG) reports encode domain-specific linguistic conventions that general-purpose language models (LMs) fail to capture. We introduce NeuroLex, a lightweight domain-adaptive language model trained purely on EEG report text from the Harvard Electroencephalography Database. Unlike existing biomedical LMs, NeuroLex is tailored to the linguistic and diagnostic characteristics of EEG reporting, enabling it to serve as both an independent textual model and a decoder backbone for multimodal EEG-language systems. Using span-corruption pretraining and instruction-style fine-tuning on report polishing, paragraph summarization, and terminology question answering, NeuroLex learns the syntax and reasoning patterns characteristic of EEG interpretation. Comprehensive evaluations show that it achieves lower perplexity, higher extraction and summarization accuracy, better label efficiency, and improved robustness to negation and factual hallucination compared with general models of the same scale. With an EEG-aware linguistic backbone, NeuroLex bridges biomedical text modeling and brain-computer interface applications, offering a foundation for interpretable and language-driven neural decoding.

Toward Adaptive BCIs: Enhancing Decoding Stability via User State-Aware EEG Filtering

Nov 11, 2025Abstract:Brain-computer interfaces (BCIs) often suffer from limited robustness and poor long-term adaptability. Model performance rapidly degrades when user attention fluctuates, brain states shift over time, or irregular artifacts appear during interaction. To mitigate these issues, we introduce a user state-aware electroencephalogram (EEG) filtering framework that refines neural representations before decoding user intentions. The proposed method continuously estimates the user's cognitive state (e.g., focus or distraction) from EEG features and filters unreliable segments by applying adaptive weighting based on the estimated attention level. This filtering stage suppresses noisy or out-of-focus epochs, thereby reducing distributional drift and improving the consistency of subsequent decoding. Experiments on multiple EEG datasets that emulate real BCI scenarios demonstrate that the proposed state-aware filtering enhances classification accuracy and stability across different user states and sessions compared with conventional preprocessing pipelines. These findings highlight that leveraging brain-derived state information--even without additional user labels--can substantially improve the reliability of practical EEG-based BCIs.

Uncertainty-Aware Cross-Modal Knowledge Distillation with Prototype Learning for Multimodal Brain-Computer Interfaces

Jul 17, 2025Abstract:Electroencephalography (EEG) is a fundamental modality for cognitive state monitoring in brain-computer interfaces (BCIs). However, it is highly susceptible to intrinsic signal errors and human-induced labeling errors, which lead to label noise and ultimately degrade model performance. To enhance EEG learning, multimodal knowledge distillation (KD) has been explored to transfer knowledge from visual models with rich representations to EEG-based models. Nevertheless, KD faces two key challenges: modality gap and soft label misalignment. The former arises from the heterogeneous nature of EEG and visual feature spaces, while the latter stems from label inconsistencies that create discrepancies between ground truth labels and distillation targets. This paper addresses semantic uncertainty caused by ambiguous features and weakly defined labels. We propose a novel cross-modal knowledge distillation framework that mitigates both modality and label inconsistencies. It aligns feature semantics through a prototype-based similarity module and introduces a task-specific distillation head to resolve label-induced inconsistency in supervision. Experimental results demonstrate that our approach improves EEG-based emotion regression and classification performance, outperforming both unimodal and multimodal baselines on a public multimodal dataset. These findings highlight the potential of our framework for BCI applications.

Aligning Humans and Robots via Reinforcement Learning from Implicit Human Feedback

Jul 17, 2025Abstract:Conventional reinforcement learning (RL) ap proaches often struggle to learn effective policies under sparse reward conditions, necessitating the manual design of complex, task-specific reward functions. To address this limitation, rein forcement learning from human feedback (RLHF) has emerged as a promising strategy that complements hand-crafted rewards with human-derived evaluation signals. However, most existing RLHF methods depend on explicit feedback mechanisms such as button presses or preference labels, which disrupt the natural interaction process and impose a substantial cognitive load on the user. We propose a novel reinforcement learning from implicit human feedback (RLIHF) framework that utilizes non-invasive electroencephalography (EEG) signals, specifically error-related potentials (ErrPs), to provide continuous, implicit feedback without requiring explicit user intervention. The proposed method adopts a pre-trained decoder to transform raw EEG signals into probabilistic reward components, en abling effective policy learning even in the presence of sparse external rewards. We evaluate our approach in a simulation environment built on the MuJoCo physics engine, using a Kinova Gen2 robotic arm to perform a complex pick-and-place task that requires avoiding obstacles while manipulating target objects. The results show that agents trained with decoded EEG feedback achieve performance comparable to those trained with dense, manually designed rewards. These findings validate the potential of using implicit neural feedback for scalable and human-aligned reinforcement learning in interactive robotics.

EEG-based Multimodal Representation Learning for Emotion Recognition

Oct 29, 2024

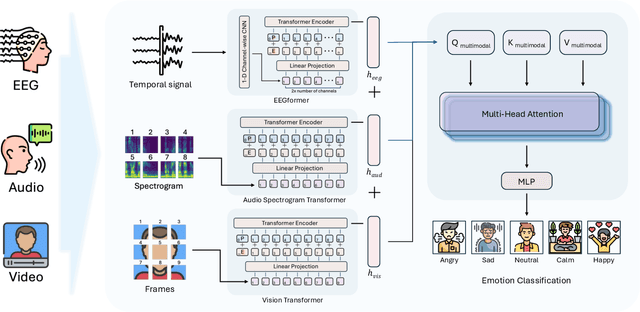

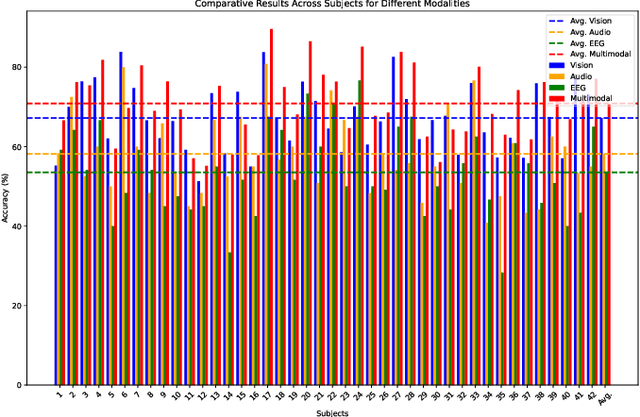

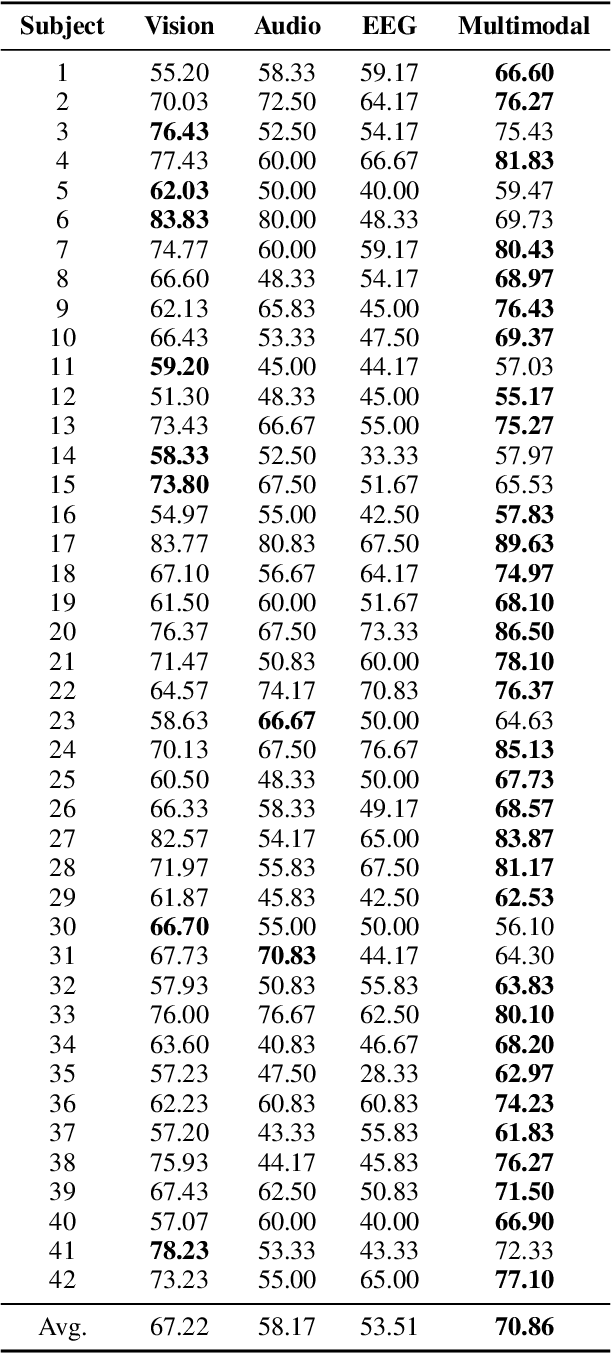

Abstract:Multimodal learning has been a popular area of research, yet integrating electroencephalogram (EEG) data poses unique challenges due to its inherent variability and limited availability. In this paper, we introduce a novel multimodal framework that accommodates not only conventional modalities such as video, images, and audio, but also incorporates EEG data. Our framework is designed to flexibly handle varying input sizes, while dynamically adjusting attention to account for feature importance across modalities. We evaluate our approach on a recently introduced emotion recognition dataset that combines data from three modalities, making it an ideal testbed for multimodal learning. The experimental results provide a benchmark for the dataset and demonstrate the effectiveness of the proposed framework. This work highlights the potential of integrating EEG into multimodal systems, paving the way for more robust and comprehensive applications in emotion recognition and beyond.

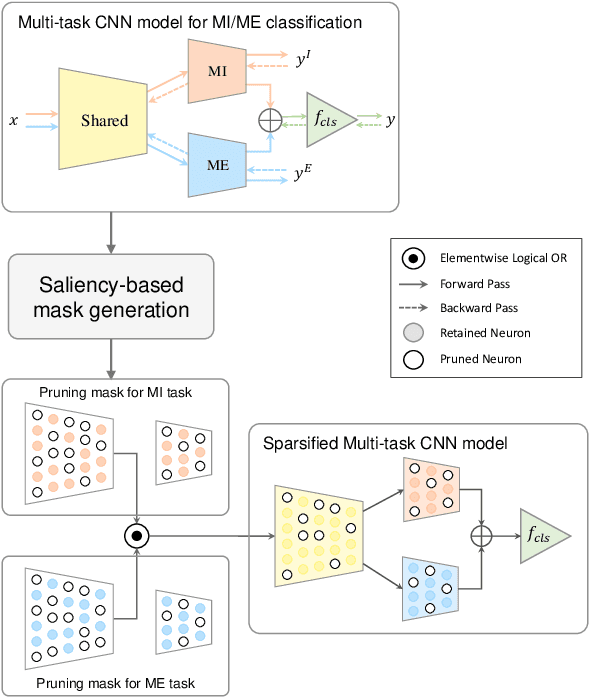

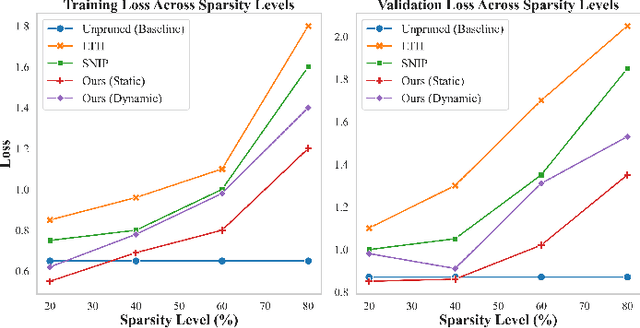

Sparse Multitask Learning for Efficient Neural Representation of Motor Imagery and Execution

Dec 10, 2023

Abstract:In the quest for efficient neural network models for neural data interpretation and user intent classification in brain-computer interfaces (BCIs), learning meaningful sparse representations of the underlying neural subspaces is crucial. The present study introduces a sparse multitask learning framework for motor imagery (MI) and motor execution (ME) tasks, inspired by the natural partitioning of associated neural subspaces observed in the human brain. Given a dual-task CNN model for MI-ME classification, we apply a saliency-based sparsification approach to prune superfluous connections and reinforce those that show high importance in both tasks. Through our approach, we seek to elucidate the distinct and common neural ensembles associated with each task, employing principled sparsification techniques to eliminate redundant connections and boost the fidelity of neural signal decoding. Our results indicate that this tailored sparsity can mitigate the overfitting problem and improve the test performance with small amount of data, suggesting a viable path forward for computationally efficient and robust BCI systems.

Calibration-Free Driver Drowsiness Classification based on Manifold-Level Augmentation

Dec 14, 2022

Abstract:Drowsiness reduces concentration and increases response time, which causes fatal road accidents. Monitoring drivers' drowsiness levels by electroencephalogram (EEG) and taking action may prevent road accidents. EEG signals effectively monitor the driver's mental state as they can monitor brain dynamics. However, calibration is required in advance because EEG signals vary between and within subjects. Because of the inconvenience, calibration has reduced the accessibility of the brain-computer interface (BCI). Developing a generalized classification model is similar to domain generalization, which overcomes the domain shift problem. Especially data augmentation is frequently used. This paper proposes a calibration-free framework for driver drowsiness state classification using manifold-level augmentation. This framework increases the diversity of source domains by utilizing features. We experimented with various augmentation methods to improve the generalization performance. Based on the results of the experiments, we found that deeper models with smaller kernel sizes improved generalizability. In addition, applying an augmentation at the manifold-level resulted in an outstanding improvement. The framework demonstrated the capability for calibration-free BCI.

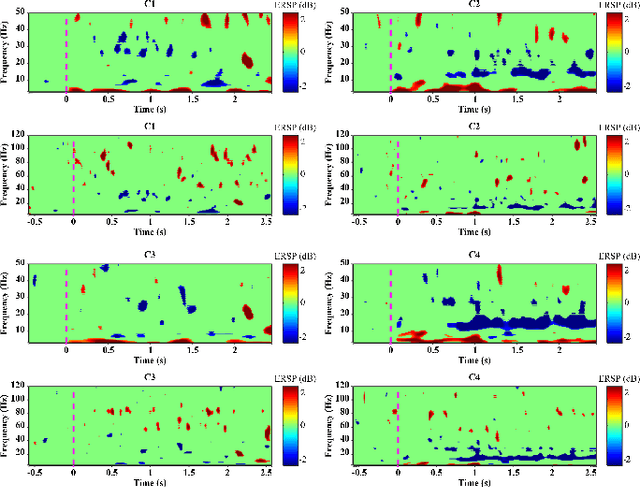

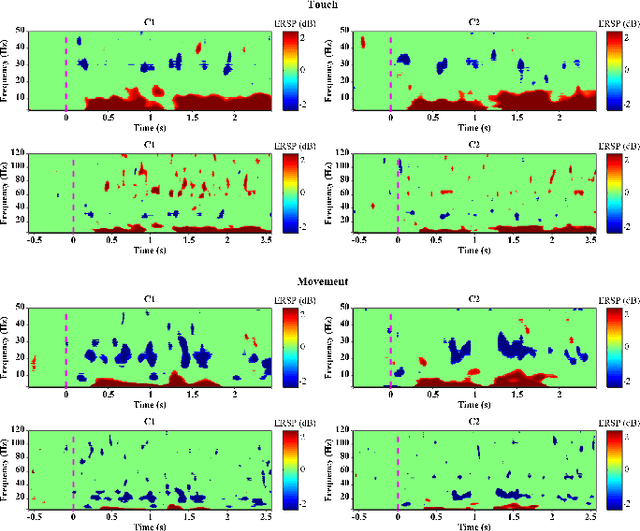

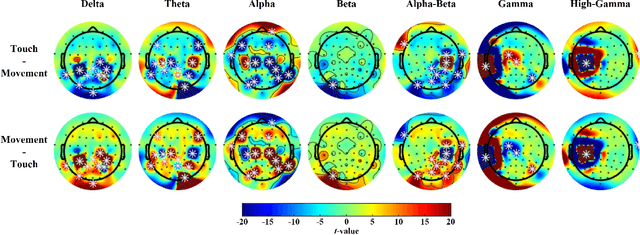

Recognition of Tactile-related EEG Signals Generated by Self-touch

Dec 14, 2021

Abstract:Touch is the first sense among human senses. Not only that, but it is also one of the most important senses that are indispensable. However, compared to sight and hearing, it is often neglected. In particular, since humans use the tactile sense of the skin to recognize and manipulate objects, without tactile sensation, it is very difficult to recognize or skillfully manipulate objects. In addition, the importance and interest of haptic technology related to touch are increasing with the development of technologies such as VR and AR in recent years. So far, the focus is only on haptic technology based on mechanical devices. Especially, there are not many studies on tactile sensation in the field of brain-computer interface based on EEG. There have been some studies that measured the surface roughness of artificial structures in relation to EEG-based tactile sensation. However, most studies have used passive contact methods in which the object moves, while the human subject remains still. Additionally, there have been no EEG-based tactile studies of active skin touch. In reality, we directly move our hands to feel the sense of touch. Therefore, as a preliminary study for our future research, we collected EEG signals for tactile sensation upon skin touch based on active touch and compared and analyzed differences in brain changes during touch and movement tasks. Through time-frequency analysis and statistical analysis, significant differences in power changes in alpha, beta, gamma, and high-gamma regions were observed. In addition, major spatial differences were observed in the sensory-motor region of the brain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge