Jeff Yan

Wallcamera: Reinventing the Wheel?

Jul 22, 2024

Abstract:Developed at MIT CSAIL, the Wallcamera has captivated the public's imagination. Here, we show that the key insight underlying the Wallcamera is the same one that underpins the concept and the prototype of differential imaging forensics (DIF), both of which were validated and reported several years prior to the Wallcamera's debut. Rather than being the first to extract and amplify invisible signals -- aka latent evidence in the forensics context -- from wall reflections in a video, or the first to propose activity recognition following that approach, the Wallcamera's actual innovation is achieving activity recognition at a finer granularity than DIF demonstrated. In addition to activity recognition, DIF as conceived has a number of other applications in forensics, including 1) the recovery of a photographer's personal identifiable information such as body width, height, and even the color of their clothing, from a single photo, and 2) the detection of image tampering and deepfake videos.

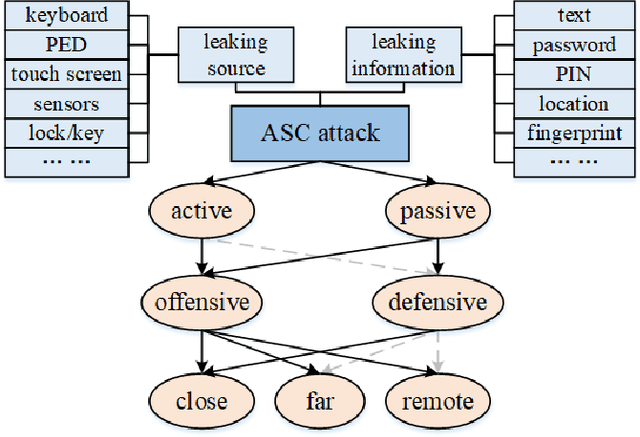

SoK: Acoustic Side Channels

Aug 06, 2023

Abstract:We provide a state-of-the-art analysis of acoustic side channels, cover all the significant academic research in the area, discuss their security implications and countermeasures, and identify areas for future research. We also make an attempt to bridge side channels and inverse problems, two fields that appear to be completely isolated from each other but have deep connections.

Differential Imaging Forensics

Jun 12, 2019

Abstract:We introduce some new forensics based on differential imaging, where a novel category of visual evidence created via subtle interactions of light with a scene, such as dim reflections, can be computationally extracted and amplified from an image of interest through a comparative analysis with an additional reference baseline image acquired under similar conditions. This paradigm of differential imaging forensics (DIF) enables forensic examiners for the first time to retrieve the said visual evidence that is readily available in an image or video footage but would otherwise remain faint or even invisible to a human observer. We demonstrate the relevance and effectiveness of our approach through practical experiments. We also show that DIF provides a novel method for detecting forged images and video clips, including deep fakes.

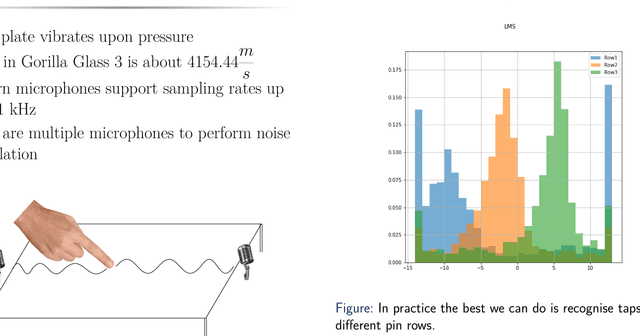

Hearing your touch: A new acoustic side channel on smartphones

Mar 26, 2019

Abstract:We present the first acoustic side-channel attack that recovers what users type on the virtual keyboard of their touch-screen smartphone or tablet. When a user taps the screen with a finger, the tap generates a sound wave that propagates on the screen surface and in the air. We found the device's microphone(s) can recover this wave and "hear" the finger's touch, and the wave's distortions are characteristic of the tap's location on the screen. Hence, by recording audio through the built-in microphone(s), a malicious app can infer text as the user enters it on their device. We evaluate the effectiveness of the attack with 45 participants in a real-world environment on an Android tablet and an Android smartphone. For the tablet, we recover 61% of 200 4-digit PIN-codes within 20 attempts, even if the model is not trained with the victim's data. For the smartphone, we recover 9 words of size 7--13 letters with 50 attempts in a common side-channel attack benchmark. Our results suggest that it not always sufficient to rely on isolation mechanisms such as TrustZone to protect user input. We propose and discuss hardware, operating-system and application-level mechanisms to block this attack more effectively. Mobile devices may need a richer capability model, a more user-friendly notification system for sensor usage and a more thorough evaluation of the information leaked by the underlying hardware.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge