Jeff Ichnowski

ExpReS-VLA: Specializing Vision-Language-Action Models Through Experience Replay and Retrieval

Nov 09, 2025

Abstract:Vision-Language-Action models such as OpenVLA show impressive zero-shot generalization across robotic manipulation tasks but often fail to adapt efficiently to new deployment environments. In many real-world applications, consistent high performance on a limited set of tasks is more important than broad generalization. We propose ExpReS-VLA, a method for specializing pre-trained VLA models through experience replay and retrieval while preventing catastrophic forgetting. ExpReS-VLA stores compact feature representations from the frozen vision backbone instead of raw image-action pairs, reducing memory usage by approximately 97 percent. During deployment, relevant past experiences are retrieved using cosine similarity and used to guide adaptation, while prioritized experience replay emphasizes successful trajectories. We also introduce Thresholded Hybrid Contrastive Loss, which enables learning from both successful and failed attempts. On the LIBERO simulation benchmark, ExpReS-VLA improves success rates from 82.6 to 93.1 percent on spatial reasoning tasks and from 61 to 72.3 percent on long-horizon tasks. On physical robot experiments with five manipulation tasks, it reaches 98 percent success on both seen and unseen settings, compared to 84.7 and 32 percent for naive fine-tuning. Adaptation takes 31 seconds using 12 demonstrations on a single RTX 5090 GPU, making the approach practical for real robot deployment.

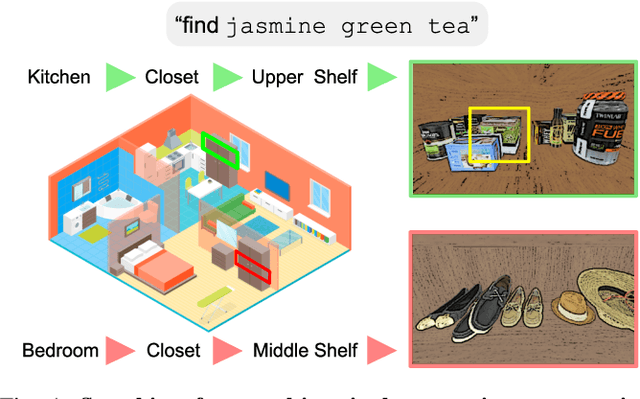

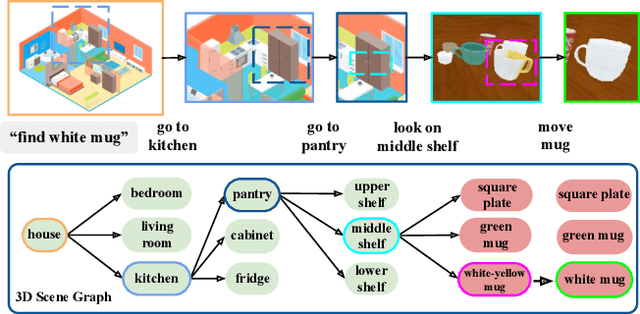

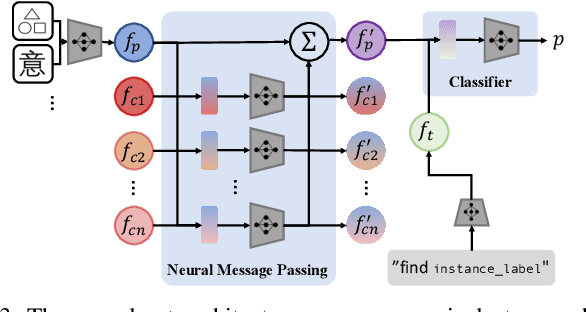

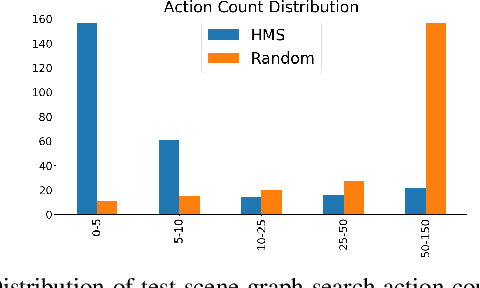

Semantic and Geometric Modeling with Neural Message Passing in 3D Scene Graphs for Hierarchical Mechanical Search

Dec 07, 2020

Abstract:Searching for objects in indoor organized environments such as homes or offices is part of our everyday activities. When looking for a target object, we jointly reason about the rooms and containers the object is likely to be in; the same type of container will have a different probability of having the target depending on the room it is in. We also combine geometric and semantic information to infer what container is best to search, or what other objects are best to move, if the target object is hidden from view. We propose to use a 3D scene graph representation to capture the hierarchical, semantic, and geometric aspects of this problem. To exploit this representation in a search process, we introduce Hierarchical Mechanical Search (HMS), a method that guides an agent's actions towards finding a target object specified with a natural language description. HMS is based on a novel neural network architecture that uses neural message passing of vectors with visual, geometric, and linguistic information to allow HMS to reason across layers of the graph while combining semantic and geometric cues. HMS is evaluated on a novel dataset of 500 3D scene graphs with dense placements of semantically related objects in storage locations, and is shown to be significantly better than several baselines at finding objects and close to the oracle policy in terms of the median number of actions required. Additional qualitative results can be found at https://ai.stanford.edu/mech-search/hms.

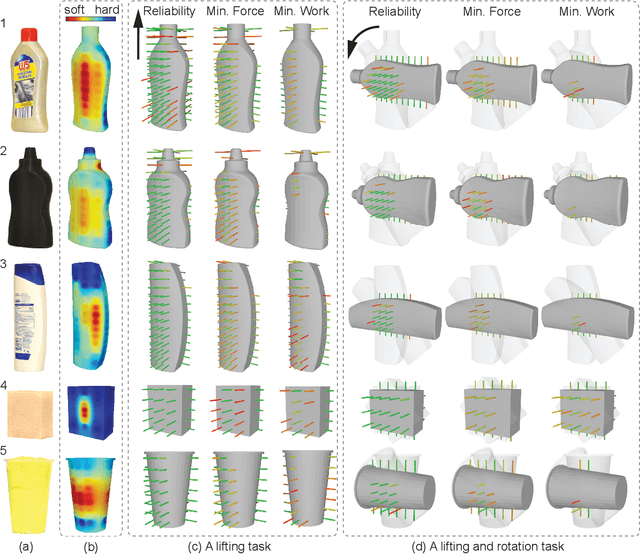

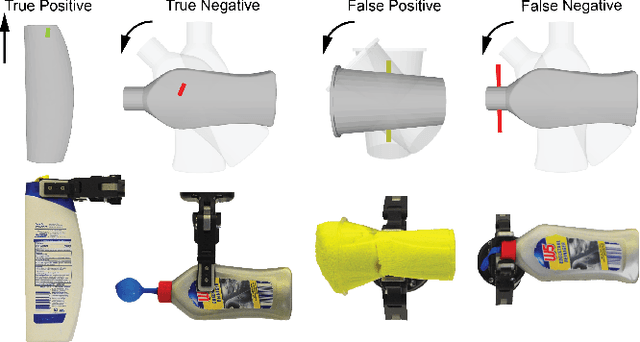

Minimal Work: A Grasp Quality Metric for Deformable Hollow Objects

Sep 24, 2019

Abstract:Robot grasping of deformable hollow objects such as plastic bottles and cups is challenging as the grasp should resist disturbances while minimally deforming the object so as not to damage it or dislodge liquids. We propose minimal work as a novel grasp quality metric that combines wrench resistance and the object deformation. We introduce an efficient algorithm to compute required work to resist an external wrench for a manipulation task by solving a linear program. The algorithm first computes the minimum required grasp force and an estimation of the gripper jaw displacements based on the object deformability at different locations measured with physical experiments. The work done by the jaws is the product of the grasp force and the displacements. The grasp quality metric is computed based on the required work under perturbations of grasp poses to address uncertainties in actuation. We collect 460 physical grasps with a UR5 robot and a Robotiq gripper. Physical experiments suggest the minimal work quality metric reaches 74.2% balanced accuracy and is up to 24.2% higher than classical wrench-based quality metrics, where the balanced accuracy is the raw accuracy normalized by the number of successful and failed real-world grasps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge