Javier Santos

ARCS: Agentic Retrieval-Augmented Code Synthesis with Iterative Refinement

Apr 29, 2025Abstract:In supercomputing, efficient and optimized code generation is essential to leverage high-performance systems effectively. We propose Agentic Retrieval-Augmented Code Synthesis (ARCS), an advanced framework for accurate, robust, and efficient code generation, completion, and translation. ARCS integrates Retrieval-Augmented Generation (RAG) with Chain-of-Thought (CoT) reasoning to systematically break down and iteratively refine complex programming tasks. An agent-based RAG mechanism retrieves relevant code snippets, while real-time execution feedback drives the synthesis of candidate solutions. This process is formalized as a state-action search tree optimization, balancing code correctness with editing efficiency. Evaluations on the Geeks4Geeks and HumanEval benchmarks demonstrate that ARCS significantly outperforms traditional prompting methods in translation and generation quality. By enabling scalable and precise code synthesis, ARCS offers transformative potential for automating and optimizing code development in supercomputing applications, enhancing computational resource utilization.

Machine learned reconstruction of tsunami dynamics from sparse observations

Nov 20, 2024Abstract:We investigate the use of the Senseiver, a transformer neural network designed for sparse sensing applications, to estimate full-field surface height measurements of tsunami waves from sparse observations. The model is trained on a large ensemble of simulated data generated via a shallow water equations solver, which we show to be a faithful reproduction for the underlying dynamics by comparison to historical events. We train the model on a dataset consisting of 8 tsunami simulations whose epicenters correspond to historical USGS earthquake records, and where the model inputs are restricted to measurements obtained at actively deployed buoy locations. We test the Senseiver on a dataset consisting of 8 simulations not included in training, demonstrating its capability for extrapolation. The results show remarkable resolution of fine scale phase and amplitude features from the true field, provided that at least a few of the sensors have obtained a non-zero signal. Throughout, we discuss which forecasting techniques can be improved by this method, and suggest ways in which the flexibility of the architecture can be leveraged to incorporate arbitrary remote sensing data (eg. HF Radar and satellite measurements) as well as investigate optimal sensor placements.

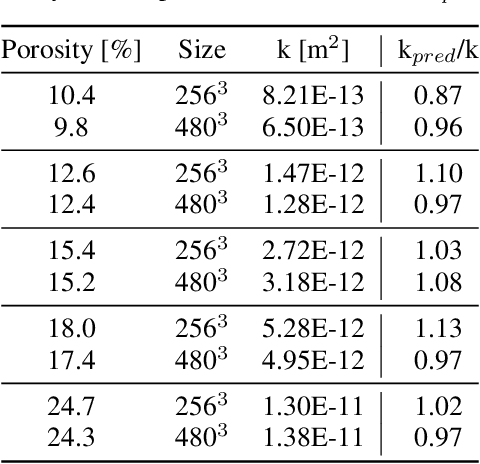

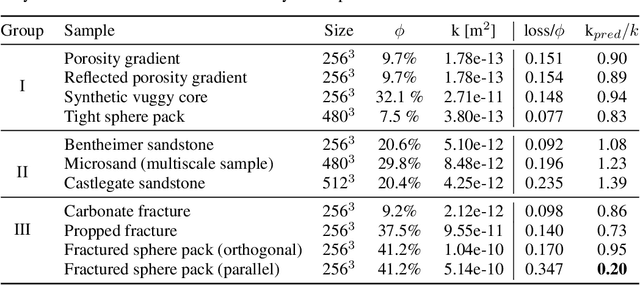

Multi-Scale Neural Networks for to Fluid Flow in 3D Porous Media

Feb 10, 2021

Abstract:The permeability of complex porous materials can be obtained via direct flow simulation, which provides the most accurate results, but is very computationally expensive. In particular, the simulation convergence time scales poorly as simulation domains become tighter or more heterogeneous. Semi-analytical models that rely on averaged structural properties (i.e. porosity and tortuosity) have been proposed, but these features only summarize the domain, resulting in limited applicability. On the other hand, data-driven machine learning approaches have shown great promise for building more general models by virtue of accounting for the spatial arrangement of the domains solid boundaries. However, prior approaches building on the Convolutional Neural Network (ConvNet) literature concerning 2D image recognition problems do not scale well to the large 3D domains required to obtain a Representative Elementary Volume (REV). As such, most prior work focused on homogeneous samples, where a small REV entails that that the global nature of fluid flow could be mostly neglected, and accordingly, the memory bottleneck of addressing 3D domains with ConvNets was side-stepped. Therefore, important geometries such as fractures and vuggy domains could not be well-modeled. In this work, we address this limitation with a general multiscale deep learning model that is able to learn from porous media simulation data. By using a coupled set of neural networks that view the domain on different scales, we enable the evaluation of large images in approximately one second on a single Graphics Processing Unit. This model architecture opens up the possibility of modeling domain sizes that would not be feasible using traditional direct simulation tools on a desktop computer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge