Michael Pyrcz

Optimizing Oil and Gas Acquisitions Using Recommender Systems

Oct 07, 2021

Abstract:Well acquisition in the oil and gas industry can often be a hit or miss process, with a poor purchase resulting in substantial loss. Recommender systems suggest items (wells) that users (companies) are likely to buy based on past activity, and applying this system to well acquisition can increase company profits. While traditional recommender systems are impactful enough on their own, they are not optimized. This is because they ignore many of the complexities involved in human decision-making, and frequently make subpar recommendations. Using a preexisting Python implementation of a Factorization Machine results in more accurate recommendations based on a user-level ranking system. We train a Factorization Machine model on oil and gas well data that includes features such as elevation, total depth, and location. The model produces recommendations by using similarities between companies and wells, as well as their interactions. Our model has a hit rate of 0.680, reciprocal rank of 0.469, precision of 0.229, and recall of 0.463. These metrics imply that while our model is able to recommend the correct wells in a general sense, it does not match exact wells to companies via relevance. To improve the model's accuracy, future models should incorporate additional features such as the well's production data and ownership duration as these features will produce more accurate recommendations.

StackGAN: Facial Image Generation Optimizations

Aug 30, 2021

Abstract:Current state-of-the-art photorealistic generators are computationally expensive, involve unstable training processes, and have real and synthetic distributions that are dissimilar in higher-dimensional spaces. To solve these issues, we propose a variant of the StackGAN architecture. The new architecture incorporates conditional generators to construct an image in many stages. In our model, we generate grayscale facial images in two different stages: noise to edges (stage one) and edges to grayscale (stage two). Our model is trained with the CelebA facial image dataset and achieved a Fr\'echet Inception Distance (FID) score of 73 for edge images and a score of 59 for grayscale images generated using the synthetic edge images. Although our model achieved subpar results in relation to state-of-the-art models, dropout layers could reduce the overfitting in our conditional mapping. Additionally, since most images can be broken down into important features, improvements to our model can generalize to other datasets. Therefore, our model can potentially serve as a superior alternative to traditional means of generating photorealistic images.

Multi-Scale Neural Networks for to Fluid Flow in 3D Porous Media

Feb 10, 2021

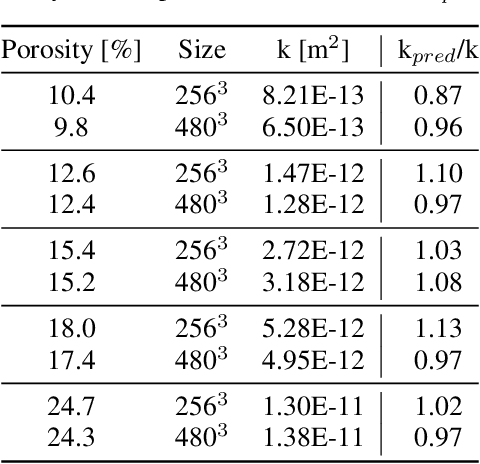

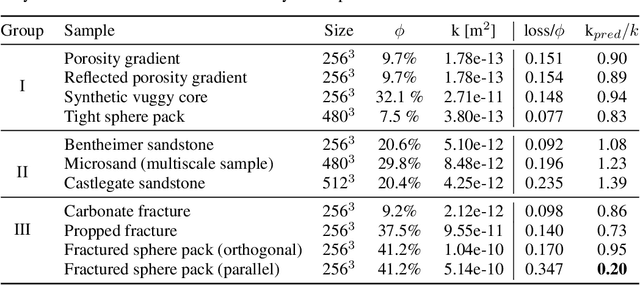

Abstract:The permeability of complex porous materials can be obtained via direct flow simulation, which provides the most accurate results, but is very computationally expensive. In particular, the simulation convergence time scales poorly as simulation domains become tighter or more heterogeneous. Semi-analytical models that rely on averaged structural properties (i.e. porosity and tortuosity) have been proposed, but these features only summarize the domain, resulting in limited applicability. On the other hand, data-driven machine learning approaches have shown great promise for building more general models by virtue of accounting for the spatial arrangement of the domains solid boundaries. However, prior approaches building on the Convolutional Neural Network (ConvNet) literature concerning 2D image recognition problems do not scale well to the large 3D domains required to obtain a Representative Elementary Volume (REV). As such, most prior work focused on homogeneous samples, where a small REV entails that that the global nature of fluid flow could be mostly neglected, and accordingly, the memory bottleneck of addressing 3D domains with ConvNets was side-stepped. Therefore, important geometries such as fractures and vuggy domains could not be well-modeled. In this work, we address this limitation with a general multiscale deep learning model that is able to learn from porous media simulation data. By using a coupled set of neural networks that view the domain on different scales, we enable the evaluation of large images in approximately one second on a single Graphics Processing Unit. This model architecture opens up the possibility of modeling domain sizes that would not be feasible using traditional direct simulation tools on a desktop computer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge