Ying Yin

Privacy-Preserving Models for Legal Natural Language Processing

Nov 05, 2022

Abstract:Pre-training large transformer models with in-domain data improves domain adaptation and helps gain performance on the domain-specific downstream tasks. However, sharing models pre-trained on potentially sensitive data is prone to adversarial privacy attacks. In this paper, we asked to which extent we can guarantee privacy of pre-training data and, at the same time, achieve better downstream performance on legal tasks without the need of additional labeled data. We extensively experiment with scalable self-supervised learning of transformer models under the formal paradigm of differential privacy and show that under specific training configurations we can improve downstream performance without sacrifying privacy protection for the in-domain data. Our main contribution is utilizing differential privacy for large-scale pre-training of transformer language models in the legal NLP domain, which, to the best of our knowledge, has not been addressed before.

Multi-Scale Neural Networks for to Fluid Flow in 3D Porous Media

Feb 10, 2021

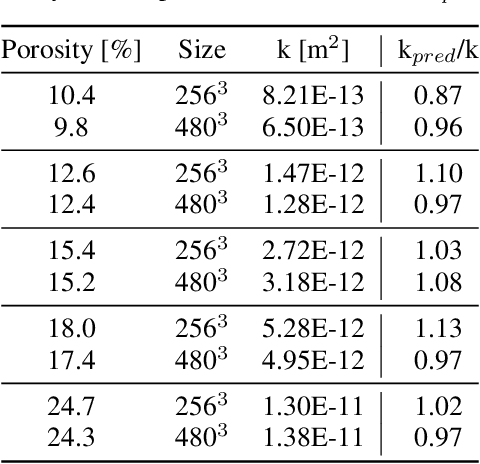

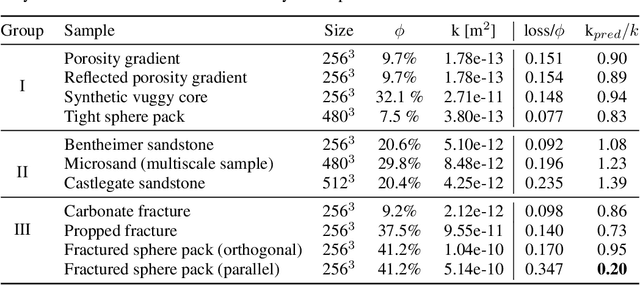

Abstract:The permeability of complex porous materials can be obtained via direct flow simulation, which provides the most accurate results, but is very computationally expensive. In particular, the simulation convergence time scales poorly as simulation domains become tighter or more heterogeneous. Semi-analytical models that rely on averaged structural properties (i.e. porosity and tortuosity) have been proposed, but these features only summarize the domain, resulting in limited applicability. On the other hand, data-driven machine learning approaches have shown great promise for building more general models by virtue of accounting for the spatial arrangement of the domains solid boundaries. However, prior approaches building on the Convolutional Neural Network (ConvNet) literature concerning 2D image recognition problems do not scale well to the large 3D domains required to obtain a Representative Elementary Volume (REV). As such, most prior work focused on homogeneous samples, where a small REV entails that that the global nature of fluid flow could be mostly neglected, and accordingly, the memory bottleneck of addressing 3D domains with ConvNets was side-stepped. Therefore, important geometries such as fractures and vuggy domains could not be well-modeled. In this work, we address this limitation with a general multiscale deep learning model that is able to learn from porous media simulation data. By using a coupled set of neural networks that view the domain on different scales, we enable the evaluation of large images in approximately one second on a single Graphics Processing Unit. This model architecture opens up the possibility of modeling domain sizes that would not be feasible using traditional direct simulation tools on a desktop computer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge