Javad Ghaderi

A Vision-Based Analysis of Congestion Pricing in New York City

Feb 03, 2026Abstract:We examine the impact of New York City's congestion pricing program through automated analysis of traffic camera data. Our computer vision pipeline processes footage from over 900 cameras distributed throughout Manhattan and New York, comparing traffic patterns from November 2024 through the program's implementation in January 2025 until January 2026. We establish baseline traffic patterns and identify systematic changes in vehicle density across the monitored region.

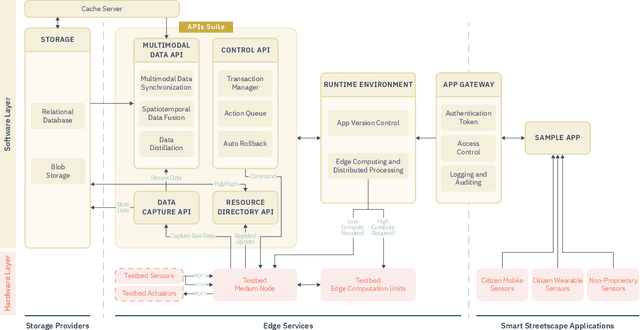

The Streetscape Application Services Stack (SASS): Towards a Distributed Sensing Architecture for Urban Applications

Nov 29, 2024

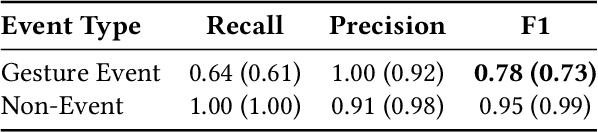

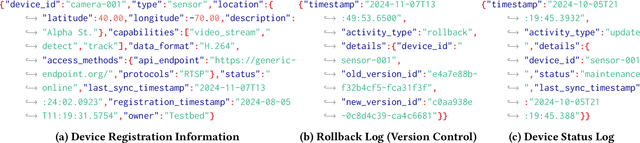

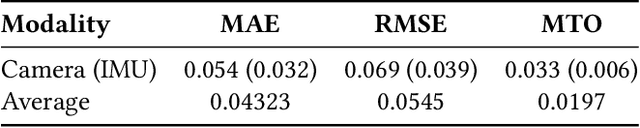

Abstract:As urban populations grow, cities are becoming more complex, driving the deployment of interconnected sensing systems to realize the vision of smart cities. These systems aim to improve safety, mobility, and quality of life through applications that integrate diverse sensors with real-time decision-making. Streetscape applications-focusing on challenges like pedestrian safety and adaptive traffic management-depend on managing distributed, heterogeneous sensor data, aligning information across time and space, and enabling real-time processing. These tasks are inherently complex and often difficult to scale. The Streetscape Application Services Stack (SASS) addresses these challenges with three core services: multimodal data synchronization, spatiotemporal data fusion, and distributed edge computing. By structuring these capabilities as clear, composable abstractions with clear semantics, SASS allows developers to scale streetscape applications efficiently while minimizing the complexity of multimodal integration. We evaluated SASS in two real-world testbed environments: a controlled parking lot and an urban intersection in a major U.S. city. These testbeds allowed us to test SASS under diverse conditions, demonstrating its practical applicability. The Multimodal Data Synchronization service reduced temporal misalignment errors by 88%, achieving synchronization accuracy within 50 milliseconds. Spatiotemporal Data Fusion service improved detection accuracy for pedestrians and vehicles by over 10%, leveraging multicamera integration. The Distributed Edge Computing service increased system throughput by more than an order of magnitude. Together, these results show how SASS provides the abstractions and performance needed to support real-time, scalable urban applications, bridging the gap between sensing infrastructure and actionable streetscape intelligence.

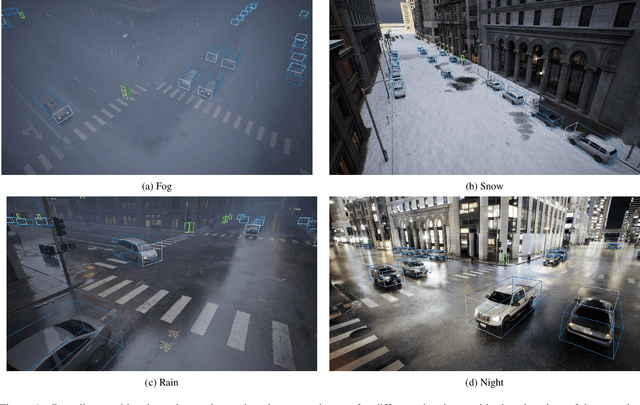

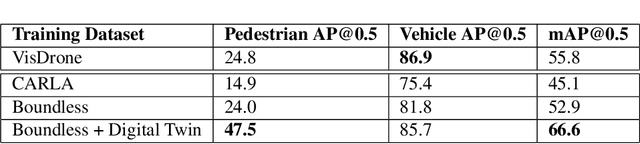

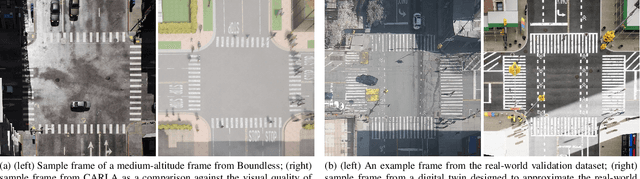

Boundless: Generating Photorealistic Synthetic Data for Object Detection in Urban Streetscapes

Sep 04, 2024

Abstract:We introduce Boundless, a photo-realistic synthetic data generation system for enabling highly accurate object detection in dense urban streetscapes. Boundless can replace massive real-world data collection and manual ground-truth object annotation (labeling) with an automated and configurable process. Boundless is based on the Unreal Engine 5 (UE5) City Sample project with improvements enabling accurate collection of 3D bounding boxes across different lighting and scene variability conditions. We evaluate the performance of object detection models trained on the dataset generated by Boundless when used for inference on a real-world dataset acquired from medium-altitude cameras. We compare the performance of the Boundless-trained model against the CARLA-trained model and observe an improvement of 7.8 mAP. The results we achieved support the premise that synthetic data generation is a credible methodology for training/fine-tuning scalable object detection models for urban scenes.

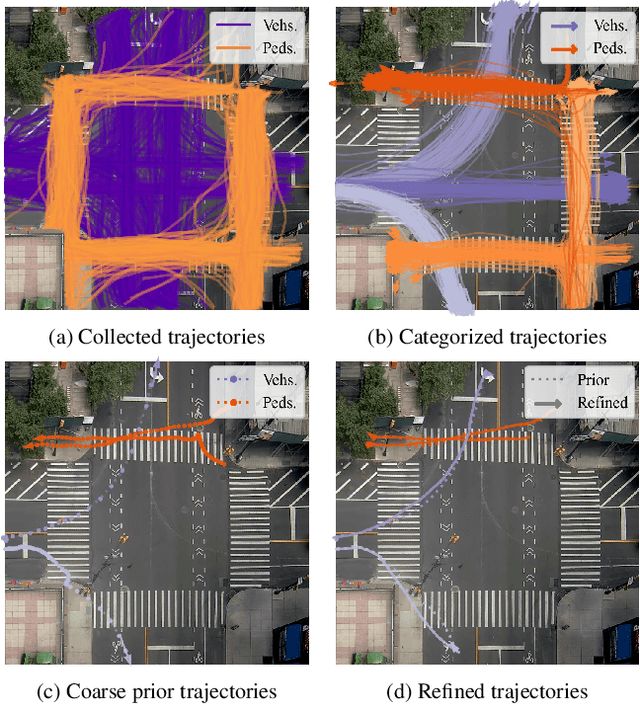

Data-Driven Traffic Simulation for an Intersection in a Metropolis

Aug 01, 2024

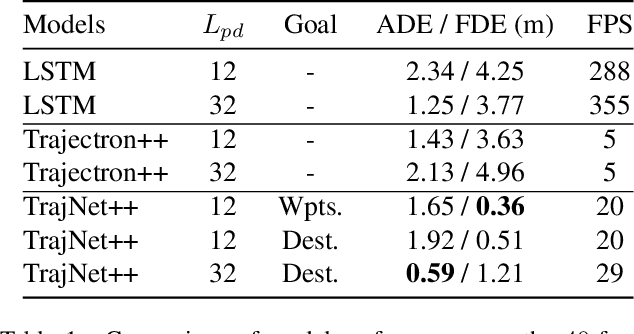

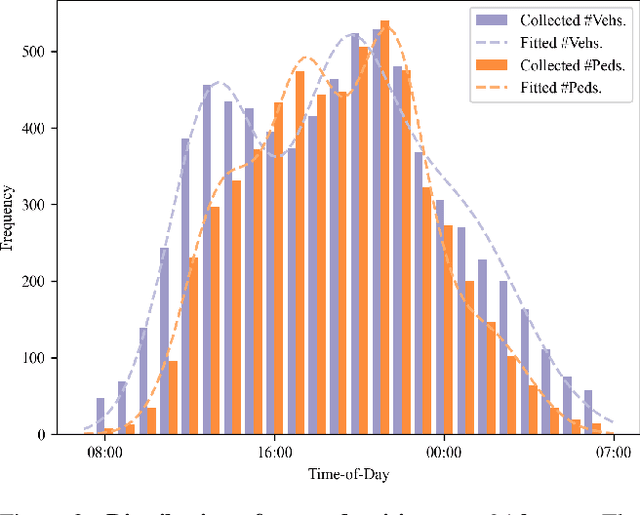

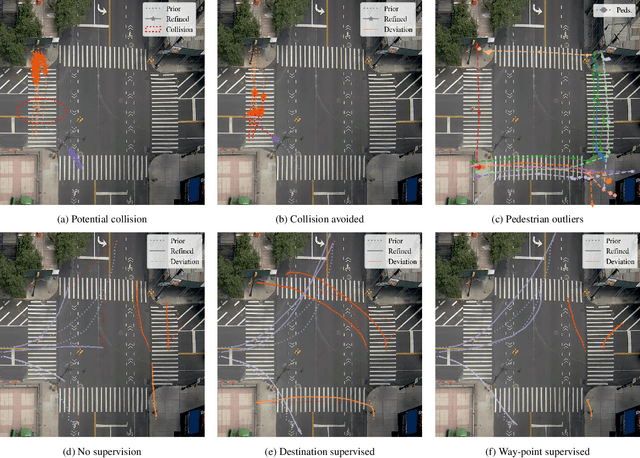

Abstract:We present a novel data-driven simulation environment for modeling traffic in metropolitan street intersections. Using real-world tracking data collected over an extended period of time, we train trajectory forecasting models to learn agent interactions and environmental constraints that are difficult to capture conventionally. Trajectories of new agents are first coarsely generated by sampling from the spatial and temporal generative distributions, then refined using state-of-the-art trajectory forecasting models. The simulation can run either autonomously, or under explicit human control conditioned on the generative distributions. We present the experiments for a variety of model configurations. Under an iterative prediction scheme, the way-point-supervised TrajNet++ model obtained 0.36 Final Displacement Error (FDE) in 20 FPS on an NVIDIA A100 GPU.

Constellation Dataset: Benchmarking High-Altitude Object Detection for an Urban Intersection

Apr 25, 2024

Abstract:We introduce Constellation, a dataset of 13K images suitable for research on detection of objects in dense urban streetscapes observed from high-elevation cameras, collected for a variety of temporal conditions. The dataset addresses the need for curated data to explore problems in small object detection exemplified by the limited pixel footprint of pedestrians observed tens of meters from above. It enables the testing of object detection models for variations in lighting, building shadows, weather, and scene dynamics. We evaluate contemporary object detection architectures on the dataset, observing that state-of-the-art methods have lower performance in detecting small pedestrians compared to vehicles, corresponding to a 10% difference in average precision (AP). Using structurally similar datasets for pretraining the models results in an increase of 1.8% mean AP (mAP). We further find that incorporating domain-specific data augmentations helps improve model performance. Using pseudo-labeled data, obtained from inference outcomes of the best-performing models, improves the performance of the models. Finally, comparing the models trained using the data collected in two different time intervals, we find a performance drift in models due to the changes in intersection conditions over time. The best-performing model achieves a pedestrian AP of 92.0% with 11.5 ms inference time on NVIDIA A100 GPUs, and an mAP of 95.4%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge