Jared J. Beard

Probabilistically Informed Robot Object Search with Multiple Regions

Apr 05, 2024

Abstract:The increasing use of autonomous robot systems in hazardous environments underscores the need for efficient search and rescue operations. Despite significant advancements, existing literature on object search often falls short in overcoming the difficulty of long planning horizons and dealing with sensor limitations, such as noise. This study introduces a novel approach that formulates the search problem as a belief Markov decision processes with options (BMDP-O) to make Monte Carlo tree search (MCTS) a viable tool for overcoming these challenges in large scale environments. The proposed formulation incorporates sequences of actions (options) to move between regions of interest, enabling the algorithm to efficiently scale to large environments. This approach also enables the use of customizable fields of view, for use with multiple types of sensors. Experimental results demonstrate the superiority of this approach in large environments when compared to the problem without options and alternative tools such as receding horizon planners. Given compute time for the proposed formulation is relatively high, a further approximated "lite" formulation is proposed. The lite formulation finds objects in a comparable number of steps with faster computation.

Feeling Optimistic? Ambiguity Attitudes for Online Decision Making

Mar 07, 2023

Abstract:As autonomous agents enter complex environments, it becomes more difficult to adequately model the interactions between the two. Agents must therefore cope with greater ambiguity (e.g., unknown environments, underdefined models, and vague problem definitions). Despite the consequences of ignoring ambiguity, tools for decision making under ambiguity are understudied. The general approach has been to avoid ambiguity (exploit known information) using robust methods. This work contributes ambiguity attitude graph search (AAGS), generalizing robust methods with ambiguity attitudes--the ability to trade-off between seeking and avoiding ambiguity in the problem. AAGS solves online decision making problems with limited budget to learn about their environment. To evaluate this approach AAGS is tasked with path planning in static and dynamic environments. Results demonstrate that appropriate ambiguity attitudes are dependent on the quality of information from the environment. In relatively certain environments, AAGS can readily exploit information with robust policies. Conversely, model complexity reduces the information conveyed by individual samples; this allows the risks taken by optimistic policies to achieve better performance.

Team Mountaineers Space Robotic Challenge Phase-2 Qualification Round Preparation Report

Mar 22, 2020

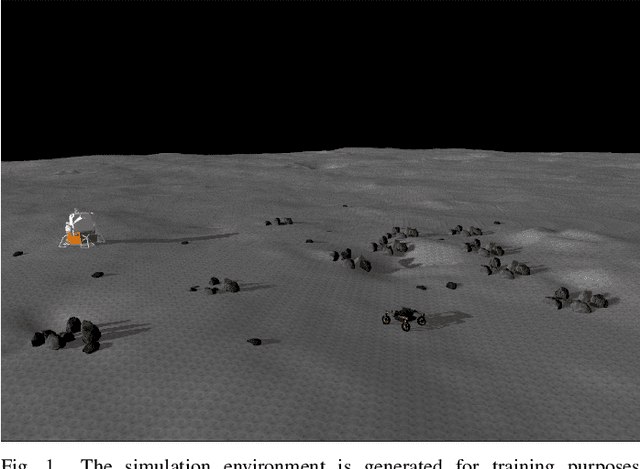

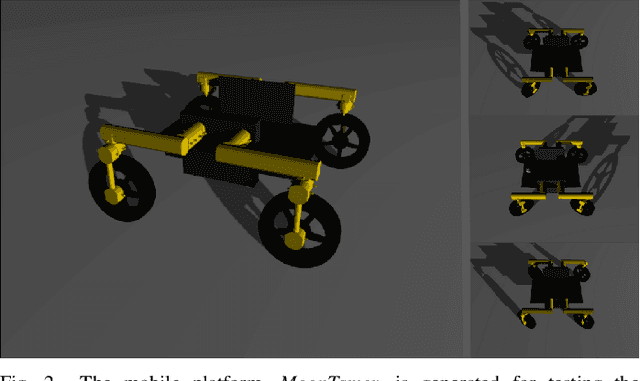

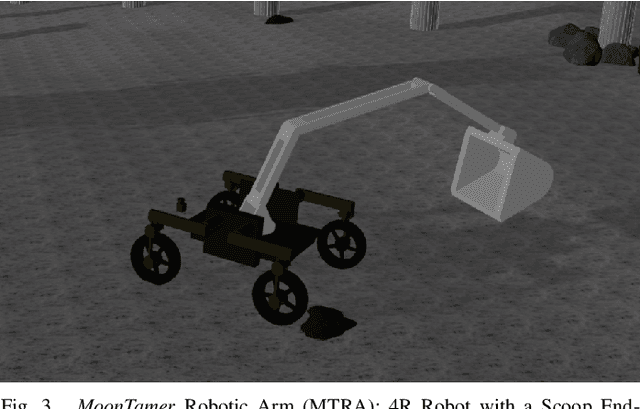

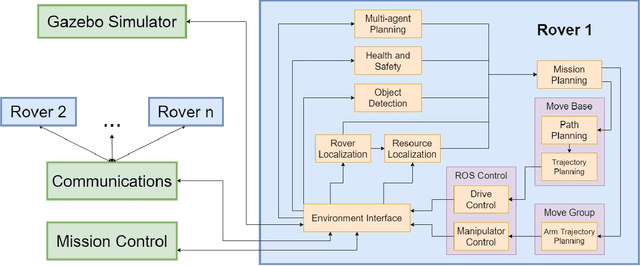

Abstract:Team Mountaineers launched efforts on the NASA Space Robotics Challenge Phase-2 (SRC2). The challenge will be held on the lunar terrain with virtual robotic platforms to establish an in-situ resource utilization process. In this report, we provide an overview of a simulation environment, a virtual mobile robot, and a software architecture that was created by Team Mountaineers in order to prepare for the competition's qualification round before the competition environment was released.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge