Janghoon Choi

Meta-Learning with Task-Adaptive Loss Function for Few-Shot Learning

Oct 17, 2021

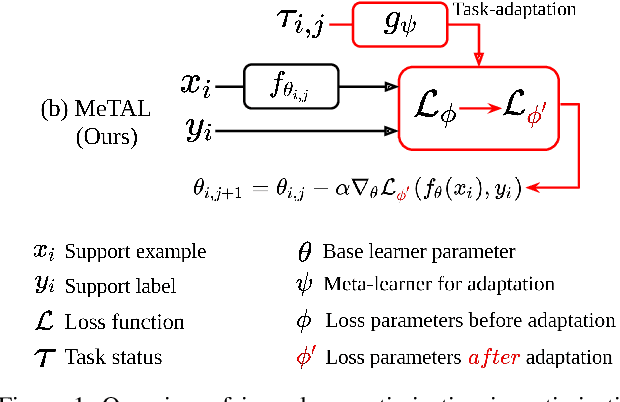

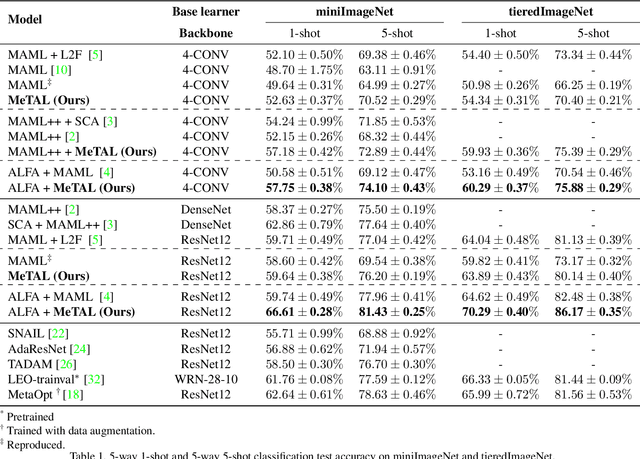

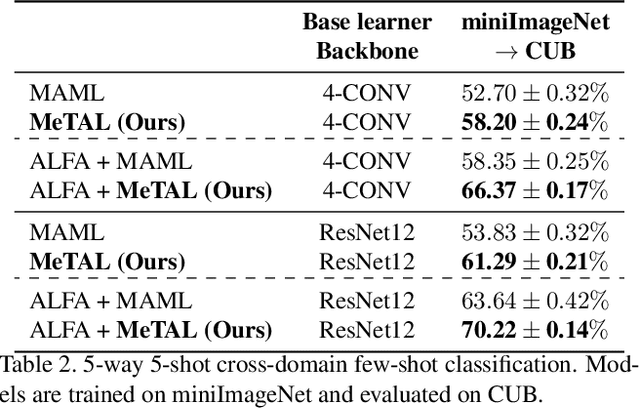

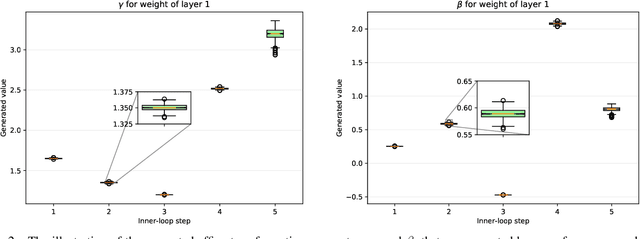

Abstract:In few-shot learning scenarios, the challenge is to generalize and perform well on new unseen examples when only very few labeled examples are available for each task. Model-agnostic meta-learning (MAML) has gained the popularity as one of the representative few-shot learning methods for its flexibility and applicability to diverse problems. However, MAML and its variants often resort to a simple loss function without any auxiliary loss function or regularization terms that can help achieve better generalization. The problem lies in that each application and task may require different auxiliary loss function, especially when tasks are diverse and distinct. Instead of attempting to hand-design an auxiliary loss function for each application and task, we introduce a new meta-learning framework with a loss function that adapts to each task. Our proposed framework, named Meta-Learning with Task-Adaptive Loss Function (MeTAL), demonstrates the effectiveness and the flexibility across various domains, such as few-shot classification and few-shot regression.

Searching for Controllable Image Restoration Networks

Dec 21, 2020

Abstract:Diverse user preferences over images have recently led to a great amount of interest in controlling the imagery effects for image restoration tasks. However, existing methods require separate inference through the entire network per each output, which hinders users from readily comparing multiple imagery effects due to long latency. To this end, we propose a novel framework based on a neural architecture search technique that enables efficient generation of multiple imagery effects via two stages of pruning: task-agnostic and task-specific pruning. Specifically, task-specific pruning learns to adaptively remove the irrelevant network parameters for each task, while task-agnostic pruning learns to find an efficient architecture by sharing the early layers of the network across different tasks. Since the shared layers allow for feature reuse, only a single inference of the task-agnostic layers is needed to generate multiple imagery effects from the input image. Using the proposed task-agnostic and task-specific pruning schemes together significantly reduces the FLOPs and the actual latency of inference compared to the baseline. We reduce 95.7% of the FLOPs when generating 27 imagery effects, and make the GPU latency 73.0% faster on 4K-resolution images.

Meta-Learning with Adaptive Hyperparameters

Oct 31, 2020

Abstract:Despite its popularity, several recent works question the effectiveness of MAML when test tasks are different from training tasks, thus suggesting various task-conditioned methodology to improve the initialization. Instead of searching for better task-aware initialization, we focus on a complementary factor in MAML framework, inner-loop optimization (or fast adaptation). Consequently, we propose a new weight update rule that greatly enhances the fast adaptation process. Specifically, we introduce a small meta-network that can adaptively generate per-step hyperparameters: learning rate and weight decay coefficients. The experimental results validate that the Adaptive Learning of hyperparameters for Fast Adaptation (ALFA) is the equally important ingredient that was often neglected in the recent few-shot learning approaches. Surprisingly, fast adaptation from random initialization with ALFA can already outperform MAML.

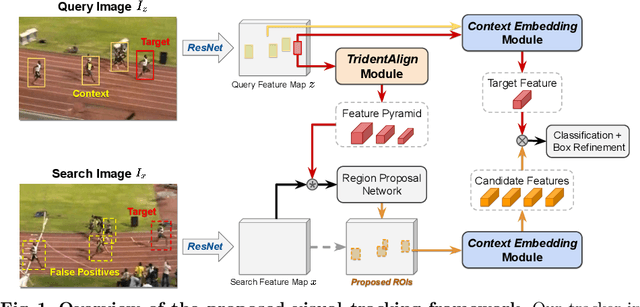

Visual Tracking by TridentAlign and Context Embedding

Jul 14, 2020

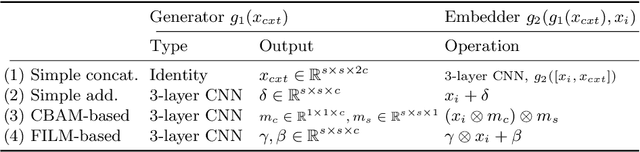

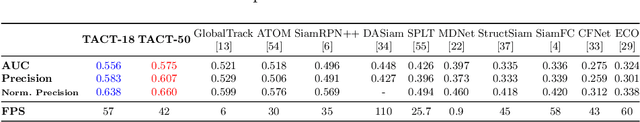

Abstract:Recent advances in Siamese network-based visual tracking methods have enabled high performance on numerous tracking benchmarks. However, extensive scale variations of the target object and distractor objects with similar categories have consistently posed challenges in visual tracking. To address these persisting issues, we propose novel TridentAlign and context embedding modules for Siamese network-based visual tracking methods. The TridentAlign module facilitates adaptability to extensive scale variations and large deformations of the target, where it pools the feature representation of the target object into multiple spatial dimensions to form a feature pyramid, which is then utilized in the region proposal stage. Meanwhile, context embedding module aims to discriminate the target from distractor objects by accounting for the global context information among objects. The context embedding module extracts and embeds the global context information of a given frame into a local feature representation such that the information can be utilized in the final classification stage. Experimental results obtained on multiple benchmark datasets show that the performance of the proposed tracker is comparable to that of state-of-the-art trackers, while the proposed tracker runs at real-time speed.

Scene-Adaptive Video Frame Interpolation via Meta-Learning

Apr 02, 2020

Abstract:Video frame interpolation is a challenging problem because there are different scenarios for each video depending on the variety of foreground and background motion, frame rate, and occlusion. It is therefore difficult for a single network with fixed parameters to generalize across different videos. Ideally, one could have a different network for each scenario, but this is computationally infeasible for practical applications. In this work, we propose to adapt the model to each video by making use of additional information that is readily available at test time and yet has not been exploited in previous works. We first show the benefits of `test-time adaptation' through simple fine-tuning of a network, then we greatly improve its efficiency by incorporating meta-learning. We obtain significant performance gains with only a single gradient update without any additional parameters. Finally, we show that our meta-learning framework can be easily employed to any video frame interpolation network and can consistently improve its performance on multiple benchmark datasets.

Real-time visual tracking by deep reinforced decision making

Aug 17, 2018

Abstract:One of the major challenges of model-free visual tracking problem has been the difficulty originating from the unpredictable and drastic changes in the appearance of objects we target to track. Existing methods tackle this problem by updating the appearance model on-line in order to adapt to the changes in the appearance. Despite the success of these methods however, inaccurate and erroneous updates of the appearance model result in a tracker drift. In this paper, we introduce a novel real-time visual tracking algorithm based on a template selection strategy constructed by deep reinforcement learning methods. The tracking algorithm utilizes this strategy to choose the appropriate template for tracking a given frame. The template selection strategy is self-learned by utilizing a simple policy gradient method on numerous training episodes randomly generated from a tracking benchmark dataset. Our proposed reinforcement learning framework is generally applicable to other confidence map based tracking algorithms. The experiment shows that our tracking algorithm runs in real-time speed of 43 fps and the proposed policy network effectively decides the appropriate template for successful visual tracking.

Deep Meta Learning for Real-Time Visual Tracking based on Target-Specific Feature Space

Dec 26, 2017

Abstract:In this paper, we propose a novel on-line visual tracking framework based on Siamese matching network and meta-learner network which runs at real-time speed. Conventional deep convolutional feature based discriminative visual tracking algorithms require continuous re-training of classifiers or correlation filters for solving complex optimization tasks to adapt to the new appearance of a target object. To remove this process, our proposed algorithm incorporates and utilizes a meta-learner network to provide the matching network with new appearance information of the target object by adding the target-aware feature space. The parameters for the target-specific feature space are provided instantly from a single forward-pass of the meta-learner network. By eliminating the necessity of continuously solving the complex optimization tasks in the course of tracking, experimental results demonstrate that our algorithm performs at a real-time speed of $62$ fps while maintaining a competitive performance among other state-of-the-art tracking algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge