Jane Chandlee

Learning with Partially Ordered Representations

Jun 23, 2019

Abstract:This paper examines the characterization and learning of grammars defined with enriched representational models. Model-theoretic approaches to formal language theory traditionally assume that each position in a string belongs to exactly one unary relation. We consider unconventional string models where positions can have multiple, shared properties, which are arguably useful in many applications. We show the structures given by these models are partially ordered, and present a learning algorithm that exploits this ordering relation to effectively prune the hypothesis space. We prove this learning algorithm, which takes positive examples as input, finds the most general grammar which covers the data.

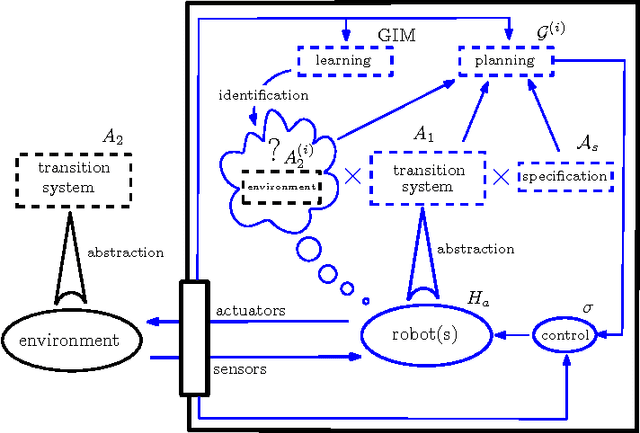

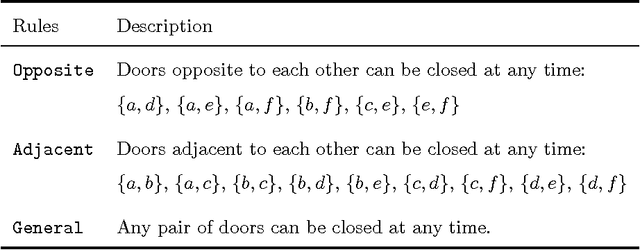

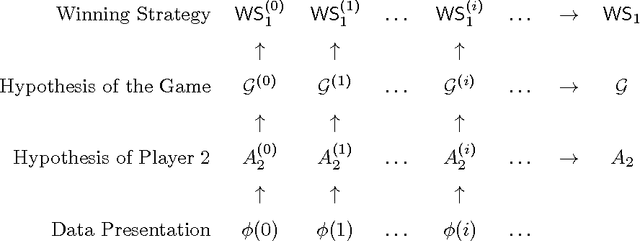

Symbolic Planning and Control Using Game Theory and Grammatical Inference

Oct 05, 2012

Abstract:This paper presents an approach that brings together game theory with grammatical inference and discrete abstractions in order to synthesize control strategies for hybrid dynamical systems performing tasks in partially unknown but rule-governed adversarial environments. The combined formulation guarantees that a system specification is met if (a) the true model of the environment is in the class of models inferable from a positive presentation, (b) a characteristic sample is observed, and (c) the task specification is satisfiable given the capabilities of the system (agent) and the environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge