James M. Keller

Heartbeat Detection from Ballistocardiogram using Transformer Network

Dec 18, 2024Abstract:Longitudinal monitoring of heart rate (HR) and heart rate variability (HRV) can aid in tracking cardiovascular diseases (CVDs), sleep quality, sleep disorders, and reflect autonomic nervous system activity, stress levels, and overall well-being. These metrics are valuable in both clinical and everyday settings. In this paper, we present a transformer network aimed primarily at detecting the precise timing of heart beats from predicted electrocardiogram (ECG), derived from input Ballistocardiogram (BCG). We compared the performance of segment and subject models across three datasets: a lab dataset with 46 young subjects, an elder dataset with 28 elderly adults, and a combined dataset. The segment model demonstrated superior performance, with correlation coefficients of 0.97 for HR and mean heart beat interval (MHBI) when compared to ground truth. This non-invasive method offers significant potential for long-term, in-home HR and HRV monitoring, aiding in the early indication and prevention of cardiovascular issues.

StreamSoNG: A Soft Streaming Classification Approach

Oct 01, 2020

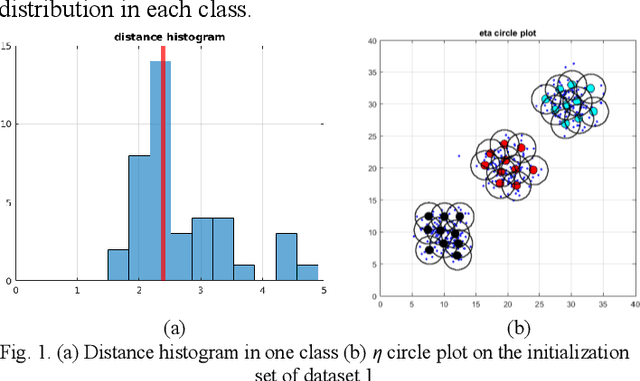

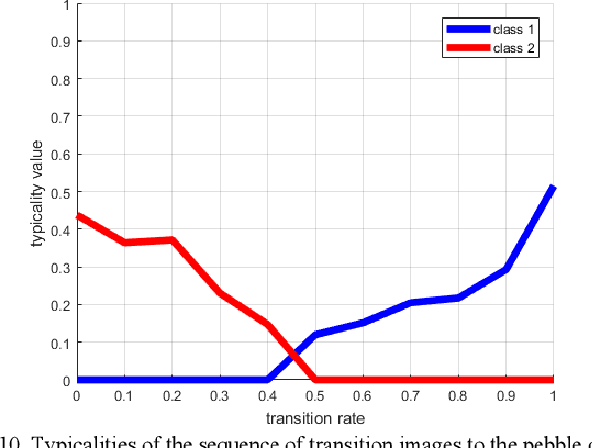

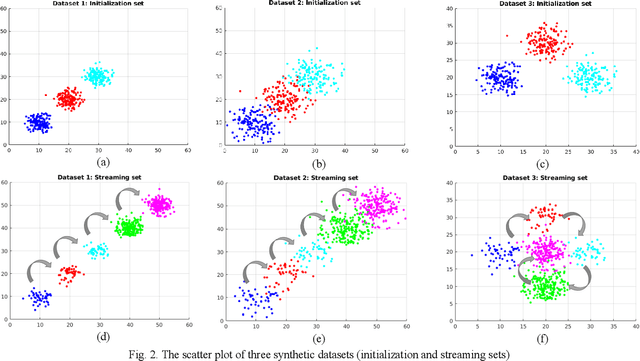

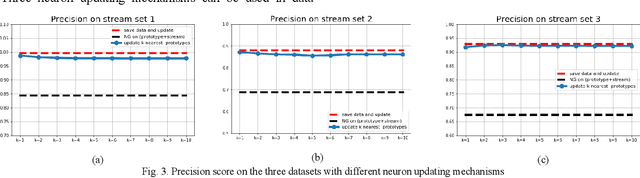

Abstract:Examining most streaming clustering algorithms leads to the understanding that they are actually incremental classification models. They model existing and newly discovered structures via summary information that we call footprints. Incoming data is normally assigned crisp labels (into one of the structures) and that structure's footprints are incrementally updated. There is no reason that these assignments need to be crisp. In this paper, we propose a new streaming classification algorithm that uses Neural Gas prototypes as footprints and produces a possibilistic label vector (typicalities) for each incoming vector. These typicalities are generated by a modified possibilistic k-nearest neighbor algorithm. The approach is tested on synthetic and real image datasets with excellent results.

Enabling Explainable Fusion in Deep Learning with Fuzzy Integral Neural Networks

May 10, 2019

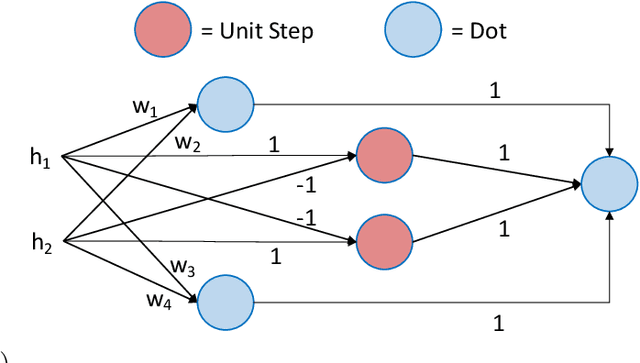

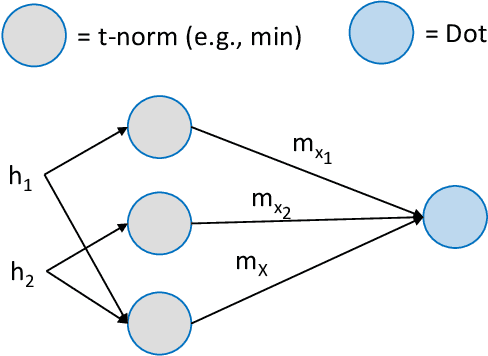

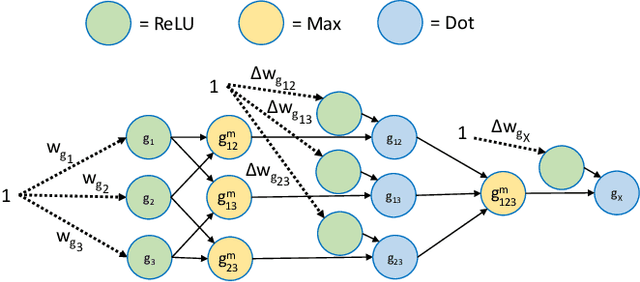

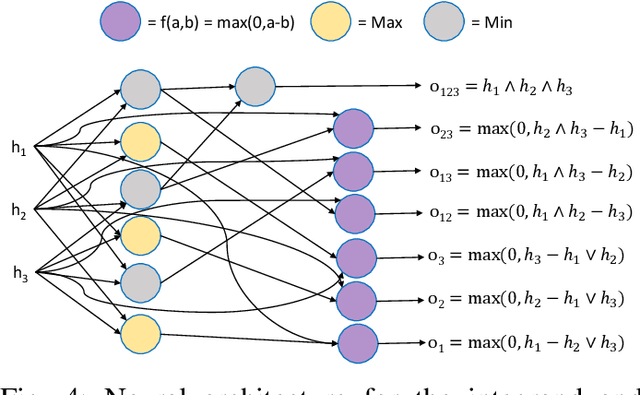

Abstract:Information fusion is an essential part of numerous engineering systems and biological functions, e.g., human cognition. Fusion occurs at many levels, ranging from the low-level combination of signals to the high-level aggregation of heterogeneous decision-making processes. While the last decade has witnessed an explosion of research in deep learning, fusion in neural networks has not observed the same revolution. Specifically, most neural fusion approaches are ad hoc, are not understood, are distributed versus localized, and/or explainability is low (if present at all). Herein, we prove that the fuzzy Choquet integral (ChI), a powerful nonlinear aggregation function, can be represented as a multi-layer network, referred to hereafter as ChIMP. We also put forth an improved ChIMP (iChIMP) that leads to a stochastic gradient descent-based optimization in light of the exponential number of ChI inequality constraints. An additional benefit of ChIMP/iChIMP is that it enables eXplainable AI (XAI). Synthetic validation experiments are provided and iChIMP is applied to the fusion of a set of heterogeneous architecture deep models in remote sensing. We show an improvement in model accuracy and our previously established XAI indices shed light on the quality of our data, model, and its decisions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge