James A. Yorke

Network Deconvolution

May 28, 2019

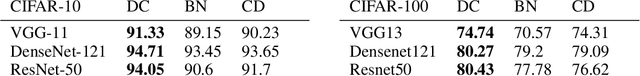

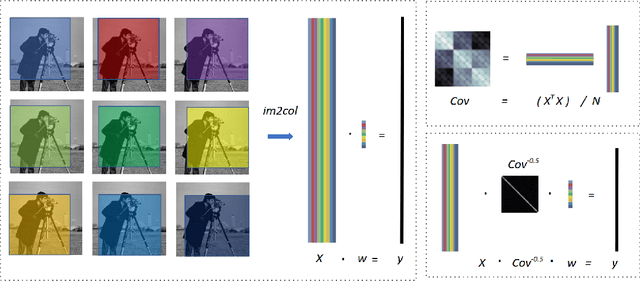

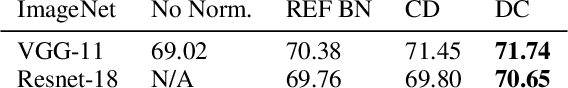

Abstract:Convolution is a central operation in Convolutional Neural Networks (CNNs), which applies a kernel or mask to overlapping regions shifted across the image. In this work we show that the underlying kernels are trained with highly correlated data, which leads to co-adaptation of model weights. To address this issue we propose what we call network deconvolution, a procedure that aims to remove pixel-wise and channel-wise correlations before the data is fed into each layer. We show that by removing this correlation we are able to achieve better convergence rates during model training with superior results without the use of batch normalization on the CIFAR-10, CIFAR-100, MNIST, Fashion-MNIST datasets, as well as against reference models from "model zoo" on the ImageNet standard benchmark.

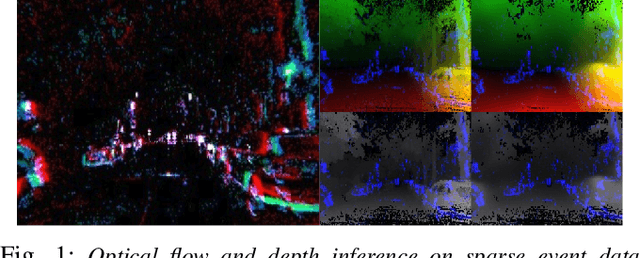

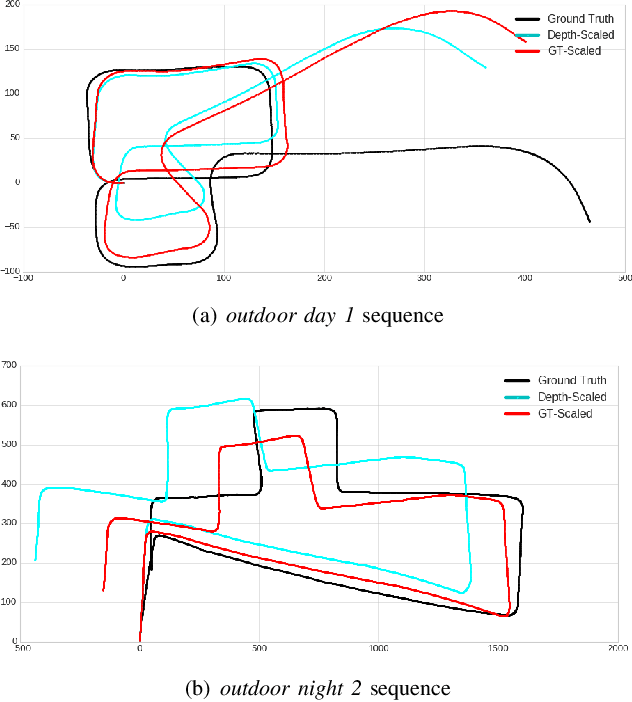

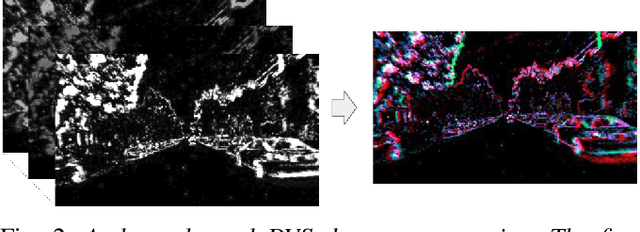

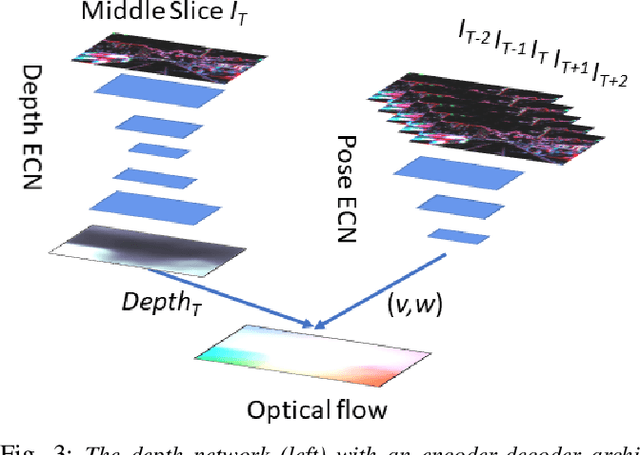

Unsupervised Learning of Dense Optical Flow, Depth and Egomotion from Sparse Event Data

Feb 25, 2019

Abstract:In this work we present a lightweight, unsupervised learning pipeline for \textit{dense} depth, optical flow and egomotion estimation from sparse event output of the Dynamic Vision Sensor (DVS). To tackle this low level vision task, we use a novel encoder-decoder neural network architecture - ECN. Our work is the first monocular pipeline that generates dense depth and optical flow from sparse event data only. The network works in self-supervised mode and has just 150k parameters. We evaluate our pipeline on the MVSEC self driving dataset and present results for depth, optical flow and and egomotion estimation. Due to the lightweight design, the inference part of the network runs at 250 FPS on a single GPU, making the pipeline ready for realtime robotics applications. Our experiments demonstrate significant improvements upon previous works that used deep learning on event data, as well as the ability of our pipeline to perform well during both day and night.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge