Jakub Fil

Heterogeneous computing platform for real-time robotics

Jan 13, 2026Abstract:After Industry 4.0 has embraced tight integration between machinery (OT), software (IT), and the Internet, creating a web of sensors, data, and algorithms in service of efficient and reliable production, a new concept of Society 5.0 is emerging, in which infrastructure of a city will be instrumented to increase reliability, efficiency, and safety. Robotics will play a pivotal role in enabling this vision that is pioneered by the NEOM initiative - a smart city, co-inhabited by humans and robots. In this paper we explore the computing platform that will be required to enable this vision. We show how we can combine neuromorphic computing hardware, exemplified by the Loihi2 processor used in conjunction with event-based cameras, for sensing and real-time perception and interaction with a local AI compute cluster (GPUs) for high-level language processing, cognition, and task planning. We demonstrate the use of this hybrid computing architecture in an interactive task, in which a humanoid robot plays a musical instrument with a human. Central to our design is the efficient and seamless integration of disparate components, ensuring that the synergy between software and hardware maximizes overall performance and responsiveness. Our proposed system architecture underscores the potential of heterogeneous computing architectures in advancing robotic autonomy and interactive intelligence, pointing toward a future where such integrated systems become the norm in complex, real-time applications.

A thermodynamically consistent chemical spiking neuron capable of autonomous Hebbian learning

Sep 28, 2020

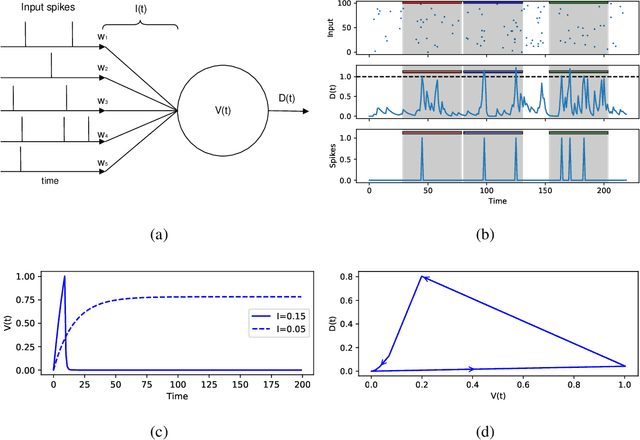

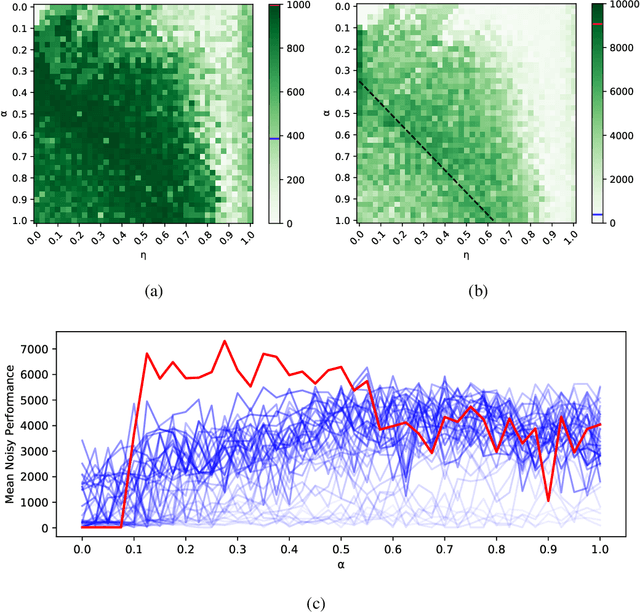

Abstract:We propose a fully autonomous, thermodynamically consistent set of chemical reactions that implements a spiking neuron. This chemical neuron is able to learn input patterns in a Hebbian fashion. The system is scalable to arbitrarily many input channels. We demonstrate its performance in learning frequency biases in the input as well as correlations between different input channels. Efficient computation of time-correlations requires a highly non-linear activation function. The resource requirements of a non-linear activation function are discussed. In addition to the thermodynamically consistent model of the CN, we also propose a biologically plausible version that could be engineered in a synthetic biology context.

Minimal spiking neuron for solving multi-label classification tasks

Mar 05, 2020

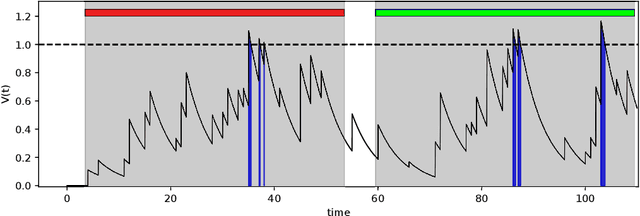

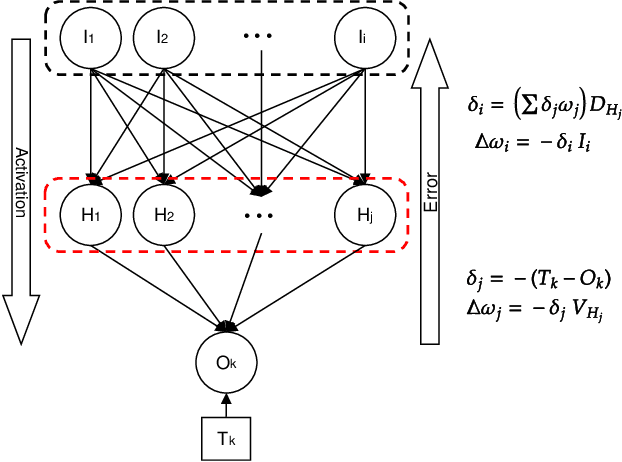

Abstract:The Multi-Spike Tempotron (MST) is a powerful single spiking neuron model that can solve complex supervised classification tasks. While powerful, it is also internally complex, computationally expensive to evaluate, and not suitable for neuromorphic hardware. Here we aim to understand whether it is possible to simplify the MST model, while retaining its ability to learn and to process information. To this end, we introduce a family of Generalised Neuron Models (GNM) which are a special case of the Spike Response Model and much simpler and cheaper to simulate than the MST. We find that over a wide range of parameters the GNM can learn at least as well as the MST. We identify the temporal autocorrelation of the membrane potential as the single most important ingredient of the GNM which enables it to classify multiple spatio-temporal patterns. We also interpret the GNM as a chemical system, thus conceptually bridging computation by neural networks with molecular information processing. We conclude the paper by proposing alternative training approaches for the GNM including error trace learning and error backpropagation.

Multi$^{\mathbf{3}}$Net: Segmenting Flooded Buildings via Fusion of Multiresolution, Multisensor, and Multitemporal Satellite Imagery

Dec 05, 2018

Abstract:We propose a novel approach for rapid segmentation of flooded buildings by fusing multiresolution, multisensor, and multitemporal satellite imagery in a convolutional neural network. Our model significantly expedites the generation of satellite imagery-based flood maps, crucial for first responders and local authorities in the early stages of flood events. By incorporating multitemporal satellite imagery, our model allows for rapid and accurate post-disaster damage assessment and can be used by governments to better coordinate medium- and long-term financial assistance programs for affected areas. The network consists of multiple streams of encoder-decoder architectures that extract spatiotemporal information from medium-resolution images and spatial information from high-resolution images before fusing the resulting representations into a single medium-resolution segmentation map of flooded buildings. We compare our model to state-of-the-art methods for building footprint segmentation as well as to alternative fusion approaches for the segmentation of flooded buildings and find that our model performs best on both tasks. We also demonstrate that our model produces highly accurate segmentation maps of flooded buildings using only publicly available medium-resolution data instead of significantly more detailed but sparsely available very high-resolution data. We release the first open-source dataset of fully preprocessed and labeled multiresolution, multispectral, and multitemporal satellite images of disaster sites along with our source code.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge