Minimal spiking neuron for solving multi-label classification tasks

Paper and Code

Mar 05, 2020

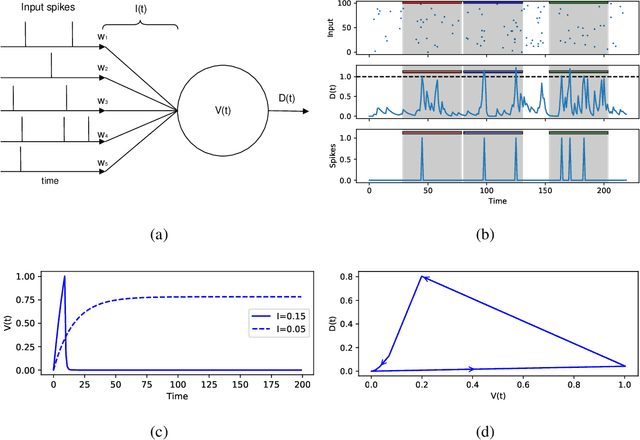

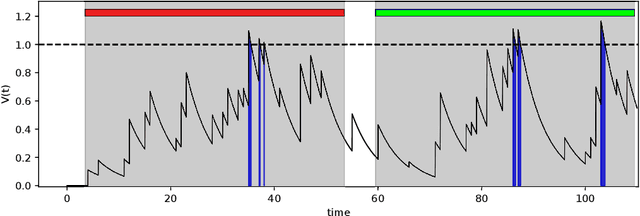

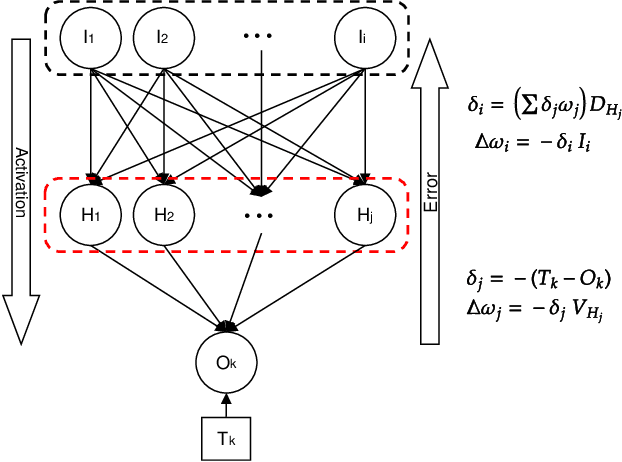

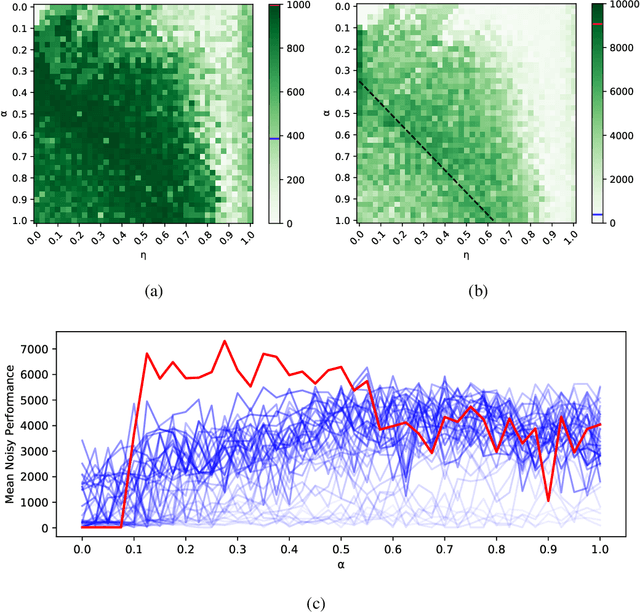

The Multi-Spike Tempotron (MST) is a powerful single spiking neuron model that can solve complex supervised classification tasks. While powerful, it is also internally complex, computationally expensive to evaluate, and not suitable for neuromorphic hardware. Here we aim to understand whether it is possible to simplify the MST model, while retaining its ability to learn and to process information. To this end, we introduce a family of Generalised Neuron Models (GNM) which are a special case of the Spike Response Model and much simpler and cheaper to simulate than the MST. We find that over a wide range of parameters the GNM can learn at least as well as the MST. We identify the temporal autocorrelation of the membrane potential as the single most important ingredient of the GNM which enables it to classify multiple spatio-temporal patterns. We also interpret the GNM as a chemical system, thus conceptually bridging computation by neural networks with molecular information processing. We conclude the paper by proposing alternative training approaches for the GNM including error trace learning and error backpropagation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge