Jack Wells

Compressive Electron Backscatter Diffraction Imaging

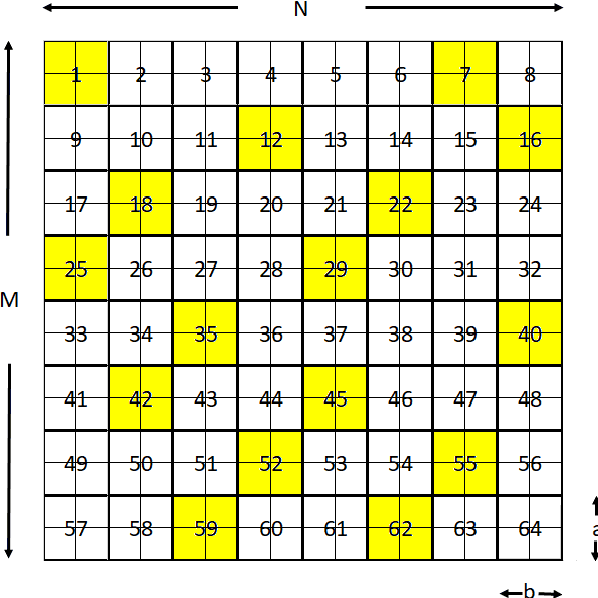

Jul 16, 2024Abstract:Electron backscatter diffraction (EBSD) has developed over the last few decades into a valuable crystallographic characterisation method for a wide range of sample types. Despite these advances, issues such as the complexity of sample preparation, relatively slow acquisition, and damage in beam-sensitive samples, still limit the quantity and quality of interpretable data that can be obtained. To mitigate these issues, here we propose a method based on the subsampling of probe positions and subsequent reconstruction of an incomplete dataset. The missing probe locations (or pixels in the image) are recovered via an inpainting process using a dictionary-learning based method called beta-process factor analysis (BPFA). To investigate the robustness of both our inpainting method and Hough-based indexing, we simulate subsampled and noisy EBSD datasets from a real fully sampled Ni-superalloy dataset for different subsampling ratios of probe positions using both Gaussian and Poisson noise models. We find that zero solution pixel detection (inpainting un-indexed pixels) enables higher quality reconstructions to be obtained. Numerical tests confirm high quality reconstruction of band contrast and inverse pole figure maps from only 10% of the probe positions, with the potential to reduce this to 5% if only inverse pole figure maps are needed. These results show the potential application of this method in EBSD, allowing for faster analysis and extending the use of this technique to beam sensitive materials.

SenseAI: Real-Time Inpainting for Electron Microscopy

Nov 25, 2023

Abstract:Despite their proven success and broad applicability to Electron Microscopy (EM) data, joint dictionary-learning and sparse-coding based inpainting algorithms have so far remained impractical for real-time usage with an Electron Microscope. For many EM applications, the reconstruction time for a single frame is orders of magnitude longer than the data acquisition time, making it impossible to perform exclusively subsampled acquisition. This limitation has led to the development of SenseAI, a C++/CUDA library capable of extremely efficient dictionary-based inpainting. SenseAI provides N-dimensional dictionary learning, live reconstructions, dictionary transfer and visualization, as well as real-time plotting of statistics, parameters, and image quality metrics.

Subsampling Methods for Fast Electron Backscattered Diffraction Analysis

Jul 17, 2023

Abstract:Despite advancements in electron backscatter diffraction (EBSD) detector speeds, the acquisition rates of 4-Dimensional (4D) EBSD data, i.e., a collection of 2-dimensional (2D) diffraction maps for every position of a convergent electron probe on the sample, is limited by the capacity of the detector. Such 4D data enables computation of, e.g., band contrast and Inverse Pole Figure (IPF) maps, used for material characterisation. In this work we propose a fast acquisition method of EBSD data through subsampling 2-D probe positions and inpainting. We investigate reconstruction of both band contrast and IPF maps using an unsupervised Bayesian dictionary learning approach, i.e., Beta process factor analysis. Numerical simulations achieve high quality reconstructed images from 10% subsampled data.

In silico Ptychography of Lithium-ion Cathode Materials from Subsampled 4-D STEM Data

Jul 12, 2023

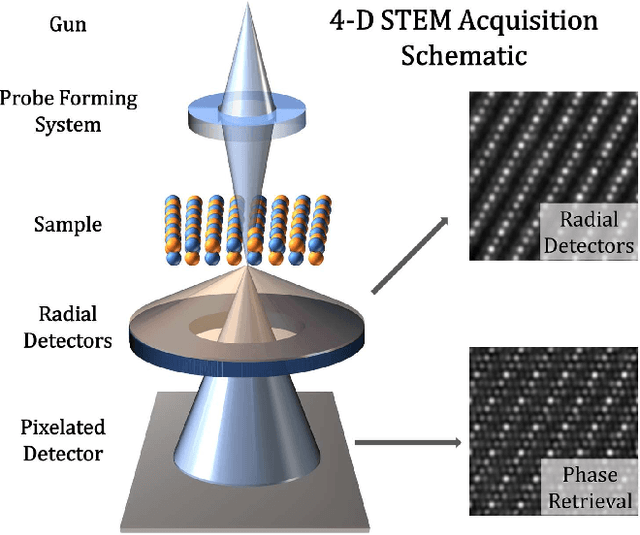

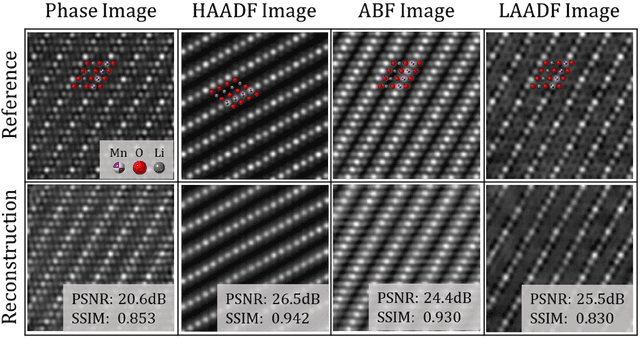

Abstract:High quality scanning transmission electron microscopy (STEM) data acquisition and analysis has become increasingly important due to the commercial demand for investigating the properties of complex materials such as battery cathodes; however, multidimensional techniques (such as 4-D STEM) which can improve resolution and sample information are ultimately limited by the beam-damage properties of the materials or the signal-to-noise ratio of the result. subsampling offers a solution to this problem by retaining high signal, but distributing the dose across the sample such that the damage can be reduced. It is for these reasons that we propose a method of subsampling for 4-D STEM, which can take advantage of the redundancy within said data to recover functionally identical results to the ground truth. We apply these ideas to a simulated 4-D STEM data set of a LiMnO2 sample and we obtained high quality reconstruction of phase images using 12.5% subsampling.

A Targeted Sampling Strategy for Compressive Cryo Focused Ion Beam Scanning Electron Microscopy

Nov 07, 2022

Abstract:Cryo Focused Ion-Beam Scanning Electron Microscopy (cryo FIB-SEM) enables three-dimensional and nanoscale imaging of biological specimens via a slice and view mechanism. The FIB-SEM experiments are, however, limited by a slow (typically, several hours) acquisition process and the high electron doses imposed on the beam sensitive specimen can cause damage. In this work, we present a compressive sensing variant of cryo FIB-SEM capable of reducing the operational electron dose and increasing speed. We propose two Targeted Sampling (TS) strategies that leverage the reconstructed image of the previous sample layer as a prior for designing the next subsampling mask. Our image recovery is based on a blind Bayesian dictionary learning approach, i.e., Beta Process Factor Analysis (BPFA). This method is experimentally viable due to our ultra-fast GPU-based implementation of BPFA. Simulations on artificial compressive FIB-SEM measurements validate the success of proposed methods: the operational electron dose can be reduced by up to 20 times. These methods have large implications for the cryo FIB-SEM community, in which the imaging of beam sensitive biological materials without beam damage is crucial.

SIM-STEM Lab: Incorporating Compressed Sensing Theory for Fast STEM Simulation

Jul 22, 2022

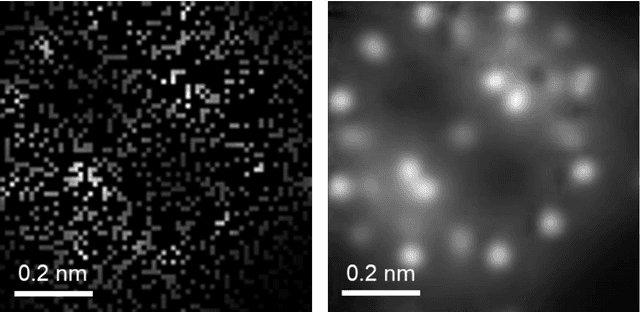

Abstract:Recently it has been shown that precise dose control and an increase in the overall acquisition speed of atomic resolution scanning transmission electron microscope (STEM) images can be achieved by acquiring only a small fraction of the pixels in the image experimentally and then reconstructing the full image using an inpainting algorithm. In this paper, we apply the same inpainting approach (a form of compressed sensing) to simulated, sub-sampled atomic resolution STEM images. We find that it is possible to significantly sub-sample the area that is simulated, the number of g-vectors contributing the image, and the number of frozen phonon configurations contributing to the final image while still producing an acceptable fit to a fully sampled simulation. Here we discuss the parameters that we use and how the resulting simulations can be quantifiably compared to the full simulations. As with any Compressed Sensing methodology, care must be taken to ensure that isolated events are not excluded from the process, but the observed increase in simulation speed provides significant opportunities for real time simulations, image classification and analytics to be performed as a supplement to experiments on a microscope to be developed in the future.

Compressive Scanning Transmission Electron Microscopy

Dec 22, 2021

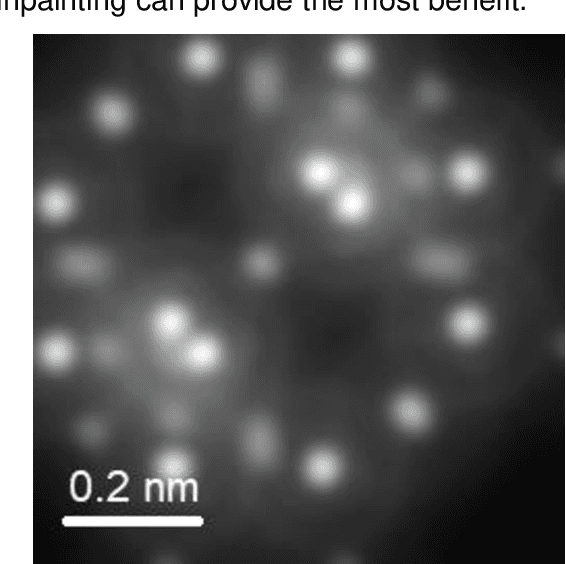

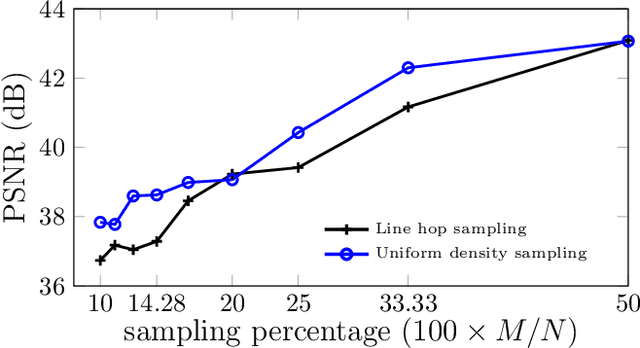

Abstract:Scanning Transmission Electron Microscopy (STEM) offers high-resolution images that are used to quantify the nanoscale atomic structure and composition of materials and biological specimens. In many cases, however, the resolution is limited by the electron beam damage, since in traditional STEM, a focused electron beam scans every location of the sample in a raster fashion. In this paper, we propose a scanning method based on the theory of Compressive Sensing (CS) and subsampling the electron probe locations using a line hop sampling scheme that significantly reduces the electron beam damage. We experimentally validate the feasibility of the proposed method by acquiring real CS-STEM data, and recovering images using a Bayesian dictionary learning approach. We support the proposed method by applying a series of masks to fully-sampled STEM data to simulate the expectation of real CS-STEM. Finally, we perform the real data experimental series using a constrained-dose budget to limit the impact of electron dose upon the results, by ensuring that the total electron count remains constant for each image.

Enabling real-time multi-messenger astrophysics discoveries with deep learning

Nov 26, 2019

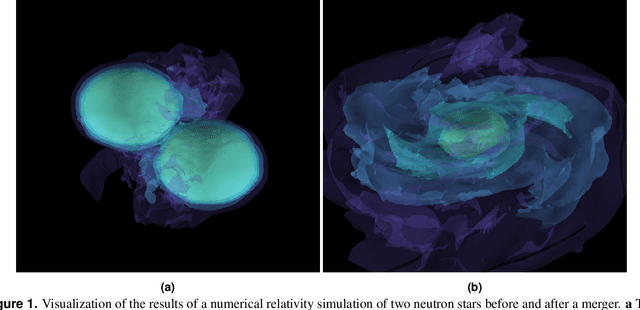

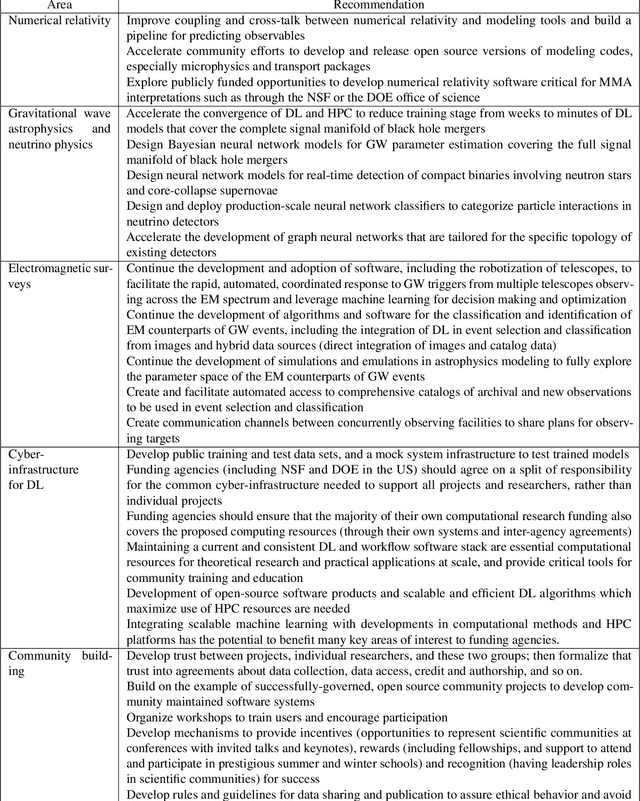

Abstract:Multi-messenger astrophysics is a fast-growing, interdisciplinary field that combines data, which vary in volume and speed of data processing, from many different instruments that probe the Universe using different cosmic messengers: electromagnetic waves, cosmic rays, gravitational waves and neutrinos. In this Expert Recommendation, we review the key challenges of real-time observations of gravitational wave sources and their electromagnetic and astroparticle counterparts, and make a number of recommendations to maximize their potential for scientific discovery. These recommendations refer to the design of scalable and computationally efficient machine learning algorithms; the cyber-infrastructure to numerically simulate astrophysical sources, and to process and interpret multi-messenger astrophysics data; the management of gravitational wave detections to trigger real-time alerts for electromagnetic and astroparticle follow-ups; a vision to harness future developments of machine learning and cyber-infrastructure resources to cope with the big-data requirements; and the need to build a community of experts to realize the goals of multi-messenger astrophysics.

* Invited Expert Recommendation for Nature Reviews Physics. The art work produced by E. A. Huerta and Shawn Rosofsky for this article was used by Carl Conway to design the cover of the October 2019 issue of Nature Reviews Physics

Deep Learning for Multi-Messenger Astrophysics: A Gateway for Discovery in the Big Data Era

Feb 01, 2019Abstract:This report provides an overview of recent work that harnesses the Big Data Revolution and Large Scale Computing to address grand computational challenges in Multi-Messenger Astrophysics, with a particular emphasis on real-time discovery campaigns. Acknowledging the transdisciplinary nature of Multi-Messenger Astrophysics, this document has been prepared by members of the physics, astronomy, computer science, data science, software and cyberinfrastructure communities who attended the NSF-, DOE- and NVIDIA-funded "Deep Learning for Multi-Messenger Astrophysics: Real-time Discovery at Scale" workshop, hosted at the National Center for Supercomputing Applications, October 17-19, 2018. Highlights of this report include unanimous agreement that it is critical to accelerate the development and deployment of novel, signal-processing algorithms that use the synergy between artificial intelligence (AI) and high performance computing to maximize the potential for scientific discovery with Multi-Messenger Astrophysics. We discuss key aspects to realize this endeavor, namely (i) the design and exploitation of scalable and computationally efficient AI algorithms for Multi-Messenger Astrophysics; (ii) cyberinfrastructure requirements to numerically simulate astrophysical sources, and to process and interpret Multi-Messenger Astrophysics data; (iii) management of gravitational wave detections and triggers to enable electromagnetic and astro-particle follow-ups; (iv) a vision to harness future developments of machine and deep learning and cyberinfrastructure resources to cope with the scale of discovery in the Big Data Era; (v) and the need to build a community that brings domain experts together with data scientists on equal footing to maximize and accelerate discovery in the nascent field of Multi-Messenger Astrophysics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge