Jack Henderson

Smart Visual Beacons with Asynchronous Optical Communications using Event Cameras

Aug 02, 2022

Abstract:Event cameras are bio-inspired dynamic vision sensors that respond to changes in image intensity with a high temporal resolution, high dynamic range and low latency. These sensor characteristics are ideally suited to enable visual target tracking in concert with a broadcast visual communication channel for smart visual beacons with applications in distributed robotics. Visual beacons can be constructed by high-frequency modulation of Light Emitting Diodes (LEDs) such as vehicle headlights, Internet of Things (IoT) LEDs, smart building lights, etc., that are already present in many real-world scenarios. The high temporal resolution characteristic of the event cameras allows them to capture visual signals at far higher data rates compared to classical frame-based cameras. In this paper, we propose a novel smart visual beacon architecture with both LED modulation and event camera demodulation algorithms. We quantitatively evaluate the relationship between LED transmission rate, communication distance and the message transmission accuracy for the smart visual beacon communication system that we prototyped. The proposed method achieves up to 4 kbps in an indoor environment and lossless transmission over a distance of 100 meters, at a transmission rate of 500 bps, in full sunlight, demonstrating the potential of the technology in an outdoor environment.

Inertial Collaborative Localisation for Autonomous Vehicles using a Minimum Energy Filter

Apr 13, 2021

Abstract:Collaborative Localisation has been studied extensively in recent years as a way to improve pose estimation of unmanned aerial vehicles in challenging environments. However little attention has been paid toward advancing the underlying filter design beyond standard Extended Kalman Filter-based approaches. In this paper, we detail a discrete-time collaborative localisation filter using the deterministic minimum-energy framework. The filter incorporates measurements from an inertial measurement unit and models the effects of sensor bias and gravitational acceleration. We present a simulation based on real-world vehicle trajectories and IMU data that demonstrates how collaborative localisation can improve performance over single-vehicle methods.

A Minimum Energy Filter for Localisation of an Unmanned Aerial Vehicle

Sep 10, 2020

Abstract:Accurate localisation of unmanned aerial vehicles is vital for the next generation of automation tasks. This paper proposes a minimum energy filter for velocity-aided pose estimation on the extended special Euclidean group. The approach taken exploits the Lie-group symmetry of the problem to combine Inertial Measurement Unit (IMU) sensor output with landmark measurements into a robust and high performance state estimate. We propose an asynchronous discrete-time implementation to fuse high bandwidth IMU with low bandwidth discrete-time landmark measurements typical of real-world scenarios. The filter's performance is demonstrated by simulation.

A Minimum Energy Filter for Distributed Multirobot Localisation

May 15, 2020

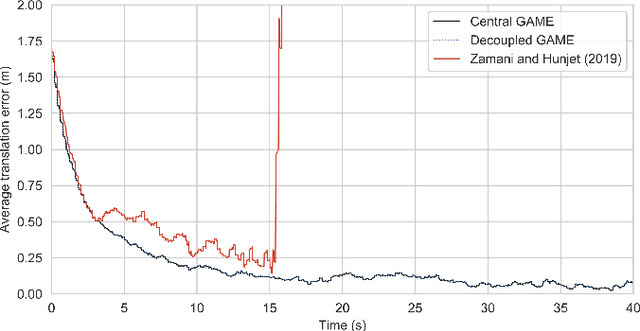

Abstract:We present a new approach to the cooperative localisation problem by applying the theory of minimum energy filtering. We consider the problem of estimating the pose of a group of mobile robots in an environment where robots can perceive fixed landmarks and neighbouring robots as well as share information with others over a communication channel. Whereas the vast majority of the existing literature applies some variant of a Kalman Filter, we derive a set of filter equations for the global state estimate based on the principle of minimum energy filtering. We show how the filter equations can be decoupled and the calculations distributed among the robots in the network without requiring a central processing node. Finally, we provide a demonstration of the filter's performance in simulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge