J. Taery Kim

Understanding Expectations for a Robotic Guide Dog for Visually Impaired People

Jan 08, 2025

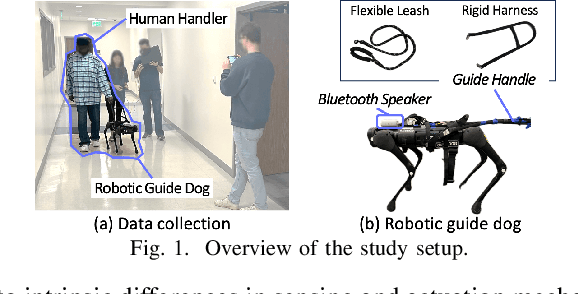

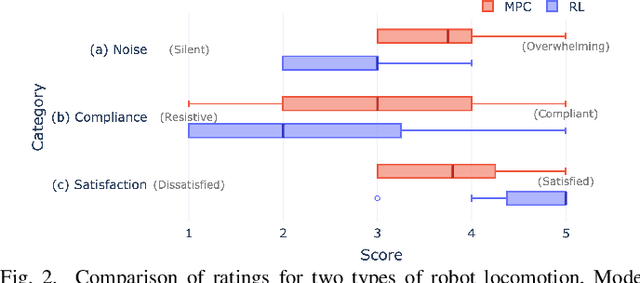

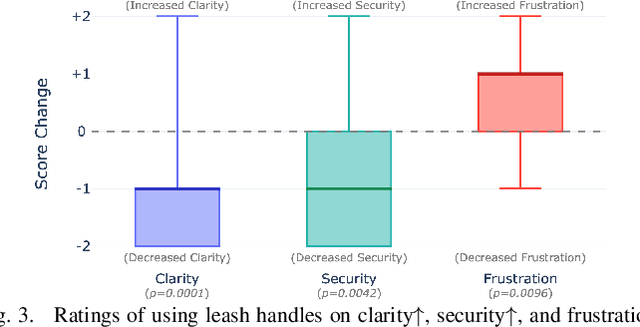

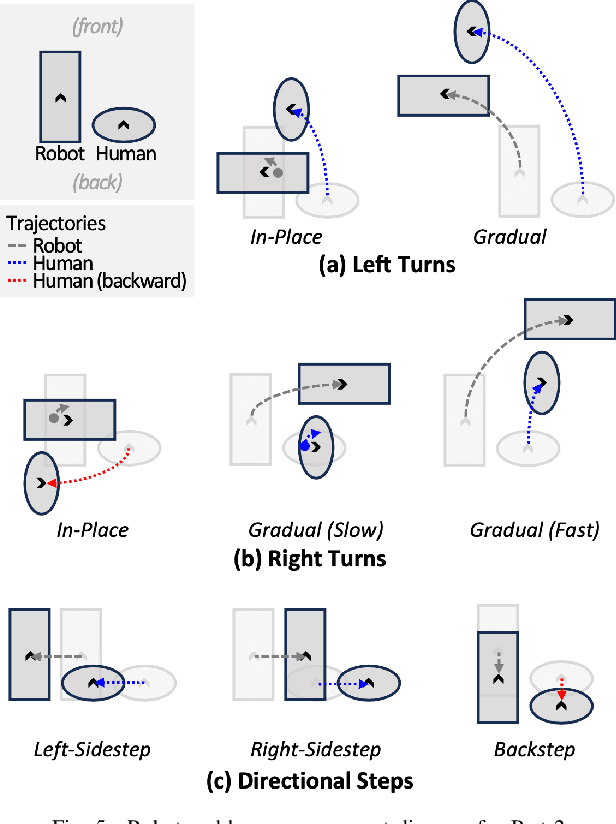

Abstract:Robotic guide dogs hold significant potential to enhance the autonomy and mobility of blind or visually impaired (BVI) individuals by offering universal assistance over unstructured terrains at affordable costs. However, the design of robotic guide dogs remains underexplored, particularly in systematic aspects such as gait controllers, navigation behaviors, interaction methods, and verbal explanations. Our study addresses this gap by conducting user studies with 18 BVI participants, comprising 15 cane users and three guide dog users. Participants interacted with a quadrupedal robot and provided both quantitative and qualitative feedback. Our study revealed several design implications, such as a preference for a learning-based controller and a rigid handle, gradual turns with asymmetric speeds, semantic communication methods, and explainability. The study also highlighted the importance of customization to support users with diverse backgrounds and preferences, along with practical concerns such as battery life, maintenance, and weather issues. These findings offer valuable insights and design implications for future research and development of robotic guide dogs.

Transforming a Quadruped into a Guide Robot for the Visually Impaired: Formalizing Wayfinding, Interaction Modeling, and Safety Mechanism

Jun 24, 2023

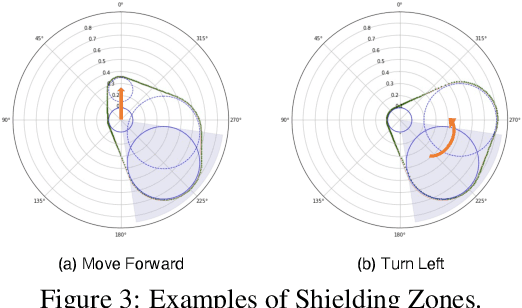

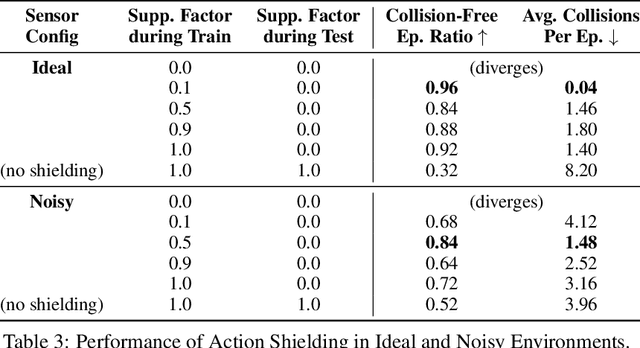

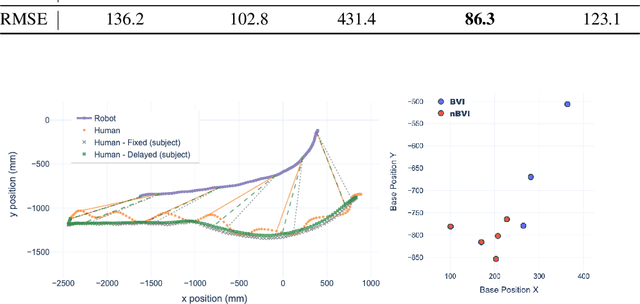

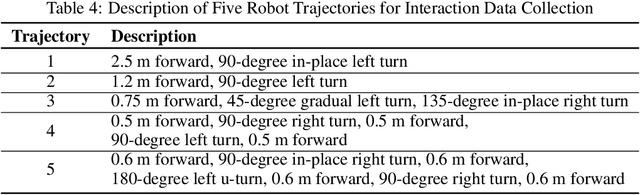

Abstract:This paper explores the principles for transforming a quadrupedal robot into a guide robot for individuals with visual impairments. A guide robot has great potential to resolve the limited availability of guide animals that are accessible to only two to three percent of the potential blind or visually impaired (BVI) users. To build a successful guide robot, our paper explores three key topics: (1) formalizing the navigation mechanism of a guide dog and a human, (2) developing a data-driven model of their interaction, and (3) improving user safety. First, we formalize the wayfinding task of the human-guide robot team using Markov Decision Processes based on the literature and interviews. Then we collect real human-robot interaction data from three visually impaired and six sighted people and develop an interaction model called the ``Delayed Harness'' to effectively simulate the navigation behaviors of the team. Additionally, we introduce an action shielding mechanism to enhance user safety by predicting and filtering out dangerous actions. We evaluate the developed interaction model and the safety mechanism in simulation, which greatly reduce the prediction errors and the number of collisions, respectively. We also demonstrate the integrated system on a quadrupedal robot with a rigid harness, by guiding users over $100+$~m trajectories.

Learning Robot Structure and Motion Embeddings using Graph Neural Networks

Sep 15, 2021

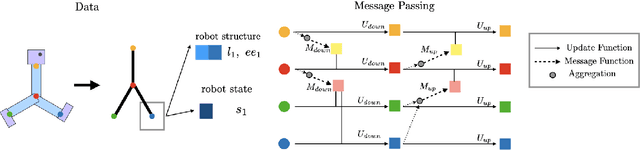

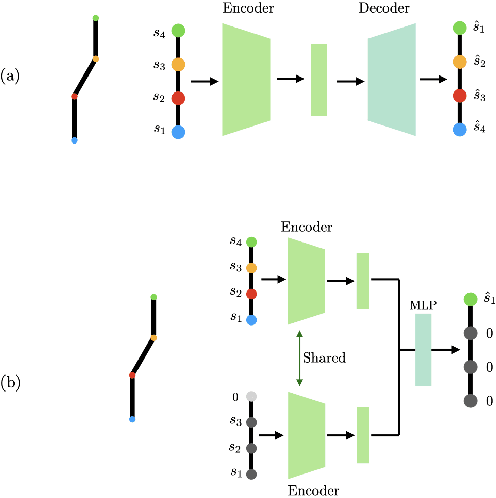

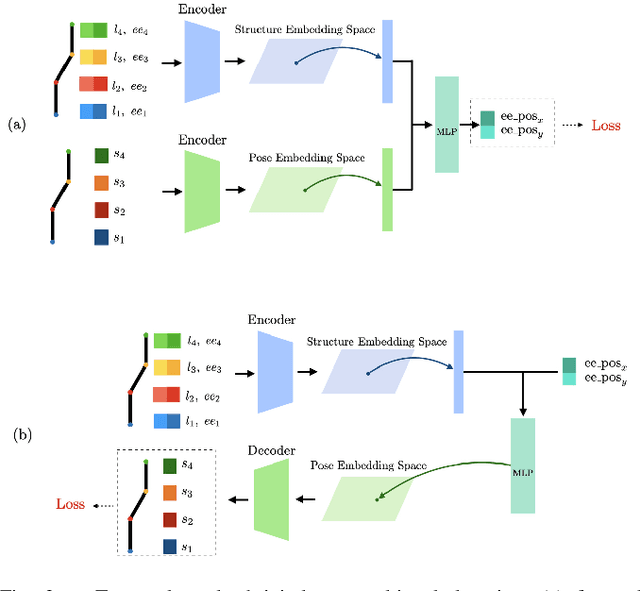

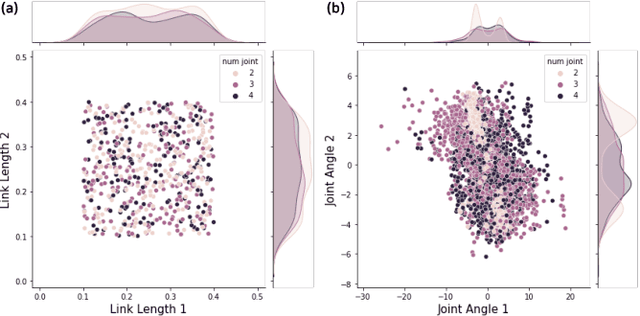

Abstract:We propose a learning framework to find the representation of a robot's kinematic structure and motion embedding spaces using graph neural networks (GNN). Finding a compact and low-dimensional embedding space for complex phenomena is a key for understanding its behaviors, which may lead to a better learning performance, as we observed in other domains of images or languages. However, although numerous robotics applications deal with various types of data, the embedding of the generated data has been relatively less studied by roboticists. To this end, our work aims to learn embeddings for two types of robotic data: the robot's design structure, such as links, joints, and their relationships, and the motion data, such as kinematic joint positions. Our method exploits the tree structure of the robot to train appropriate embeddings to the given robot data. To avoid overfitting, we formulate multi-task learning to find a general representation of the embedding spaces. We evaluate the proposed learning method on a robot with a simple linear structure and visualize the learned embeddings using t-SNE. We also study a few design choices of the learning framework, such as network architectures and message passing schemes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge