J. Marius Zoellner

Constrained Meta Agnostic Reinforcement Learning

Jun 20, 2024Abstract:Meta-Reinforcement Learning (Meta-RL) aims to acquire meta-knowledge for quick adaptation to diverse tasks. However, applying these policies in real-world environments presents a significant challenge in balancing rapid adaptability with adherence to environmental constraints. Our novel approach, Constraint Model Agnostic Meta Learning (C-MAML), merges meta learning with constrained optimization to address this challenge. C-MAML enables rapid and efficient task adaptation by incorporating task-specific constraints directly into its meta-algorithm framework during the training phase. This fusion results in safer initial parameters for learning new tasks. We demonstrate the effectiveness of C-MAML in simulated locomotion with wheeled robot tasks of varying complexity, highlighting its practicality and robustness in dynamic environments.

A Survey on Intermediate Fusion Methods for Collaborative Perception Categorized by Real World Challenges

Apr 28, 2024Abstract:This survey analyzes intermediate fusion methods in collaborative perception for autonomous driving, categorized by real-world challenges. We examine various methods, detailing their features and the evaluation metrics they employ. The focus is on addressing challenges like transmission efficiency, localization errors, communication disruptions, and heterogeneity. Moreover, we explore strategies to counter adversarial attacks and defenses, as well as approaches to adapt to domain shifts. The objective is to present an overview of how intermediate fusion methods effectively meet these diverse challenges, highlighting their role in advancing the field of collaborative perception in autonomous driving.

Collaborative Perception Datasets in Autonomous Driving: A Survey

Apr 22, 2024Abstract:This survey offers a comprehensive examination of collaborative perception datasets in the context of Vehicle-to-Infrastructure (V2I), Vehicle-to-Vehicle (V2V), and Vehicle-to-Everything (V2X). It highlights the latest developments in large-scale benchmarks that accelerate advancements in perception tasks for autonomous vehicles. The paper systematically analyzes a variety of datasets, comparing them based on aspects such as diversity, sensor setup, quality, public availability, and their applicability to downstream tasks. It also highlights the key challenges such as domain shift, sensor setup limitations, and gaps in dataset diversity and availability. The importance of addressing privacy and security concerns in the development of datasets is emphasized, regarding data sharing and dataset creation. The conclusion underscores the necessity for comprehensive, globally accessible datasets and collaborative efforts from both technological and research communities to overcome these challenges and fully harness the potential of autonomous driving.

A Review of Reward Functions for Reinforcement Learning in the context of Autonomous Driving

Apr 12, 2024Abstract:Reinforcement learning has emerged as an important approach for autonomous driving. A reward function is used in reinforcement learning to establish the learned skill objectives and guide the agent toward the optimal policy. Since autonomous driving is a complex domain with partly conflicting objectives with varying degrees of priority, developing a suitable reward function represents a fundamental challenge. This paper aims to highlight the gap in such function design by assessing different proposed formulations in the literature and dividing individual objectives into Safety, Comfort, Progress, and Traffic Rules compliance categories. Additionally, the limitations of the reviewed reward functions are discussed, such as objectives aggregation and indifference to driving context. Furthermore, the reward categories are frequently inadequately formulated and lack standardization. This paper concludes by proposing future research that potentially addresses the observed shortcomings in rewards, including a reward validation framework and structured rewards that are context-aware and able to resolve conflicts.

Plausibility Verification For 3D Object Detectors Using Energy-Based Optimization

Nov 02, 2022

Abstract:Environmental perception obtained via object detectors have no predictable safety layer encoded into their model schema, which creates the question of trustworthiness about the system's prediction. As can be seen from recent adversarial attacks, most of the current object detection networks are vulnerable to input tampering, which in the real world could compromise the safety of autonomous vehicles. The problem would be amplified even more when uncertainty errors could not propagate into the submodules, if these are not a part of the end-to-end system design. To address these concerns, a parallel module which verifies the predictions of the object proposals coming out of Deep Neural Networks are required. This work aims to verify 3D object proposals from MonoRUn model by proposing a plausibility framework that leverages cross sensor streams to reduce false positives. The verification metric being proposed uses prior knowledge in the form of four different energy functions, each utilizing a certain prior to output an energy value leading to a plausibility justification for the hypothesis under consideration. We also employ a novel two-step schema to improve the optimization of the composite energy function representing the energy model.

Radar Artifact Labeling Framework (RALF): Method for Plausible Radar Detections in Datasets

Dec 03, 2020

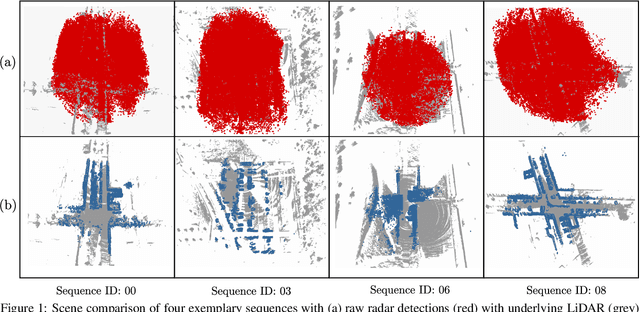

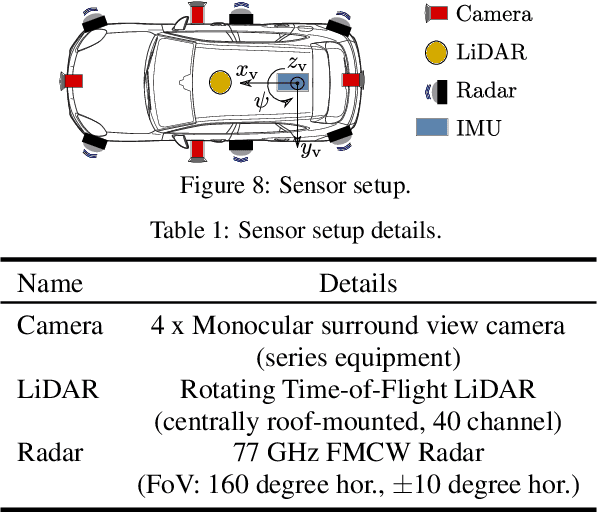

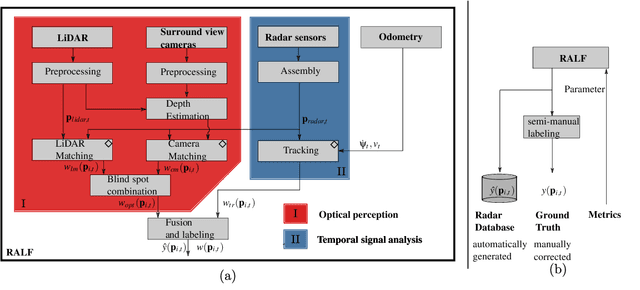

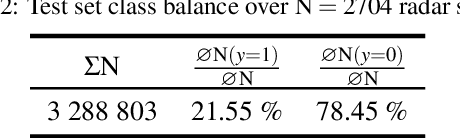

Abstract:Research on localization and perception for Autonomous Driving is mainly focused on camera and LiDAR datasets, rarely on radar data. Manually labeling sparse radar point clouds is challenging. For a dataset generation, we propose the cross sensor Radar Artifact Labeling Framework (RALF). Automatically generated labels for automotive radar data help to cure radar shortcomings like artifacts for the application of artificial intelligence. RALF provides plausibility labels for radar raw detections, distinguishing between artifacts and targets. The optical evaluation backbone consists of a generalized monocular depth image estimation of surround view cameras plus LiDAR scans. Modern car sensor sets of cameras and LiDAR allow to calibrate image-based relative depth information in overlapping sensing areas. K-Nearest Neighbors matching relates the optical perception point cloud with raw radar detections. In parallel, a temporal tracking evaluation part considers the radar detections' transient behavior. Based on the distance between matches, respecting both sensor and model uncertainties, we propose a plausibility rating of every radar detection. We validate the results by evaluating error metrics on semi-manually labeled ground truth dataset of $3.28\cdot10^6$ points. Besides generating plausible radar detections, the framework enables further labeled low-level radar signal datasets for applications of perception and Autonomous Driving learning tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge