Ismail Tutar

Catalog Phrase Grounding (CPG): Grounding of Product Textual Attributes in Product Images for e-commerce Vision-Language Applications

Aug 30, 2023

Abstract:We present Catalog Phrase Grounding (CPG), a model that can associate product textual data (title, brands) into corresponding regions of product images (isolated product region, brand logo region) for e-commerce vision-language applications. We use a state-of-the-art modulated multimodal transformer encoder-decoder architecture unifying object detection and phrase-grounding. We train the model in self-supervised fashion with 2.3 million image-text pairs synthesized from an e-commerce site. The self-supervision data is annotated with high-confidence pseudo-labels generated with a combination of teacher models: a pre-trained general domain phrase grounding model (e.g. MDETR) and a specialized logo detection model. This allows CPG, as a student model, to benefit from transfer knowledge from these base models combining general-domain knowledge and specialized knowledge. Beyond immediate catalog phrase grounding tasks, we can benefit from CPG representations by incorporating them as ML features into downstream catalog applications that require deep semantic understanding of products. Our experiments on product-brand matching, a challenging e-commerce application, show that incorporating CPG representations into the existing production ensemble system leads to on average 5% recall improvement across all countries globally (with the largest lift of 11% in a single country) at fixed 95% precision, outperforming other alternatives including a logo detection teacher model and ResNet50.

Solving Price Per Unit Problem Around the World: Formulating Fact Extraction as Question Answering

Apr 12, 2022

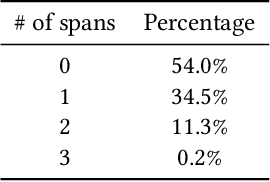

Abstract:Price Per Unit (PPU) is an essential information for consumers shopping on e-commerce websites when comparing products. Finding total quantity in a product is required for computing PPU, which is not always provided by the sellers. To predict total quantity, all relevant quantities given in a product attributes such as title, description and image need to be inferred correctly. We formulate this problem as a question-answering (QA) task rather than named entity recognition (NER) task for fact extraction. In our QA approach, we first predict the unit of measure (UoM) type (e.g., volume, weight or count), that formulates the desired question (e.g., "What is the total volume?") and then use this question to find all the relevant answers. Our model architecture consists of two subnetworks for the two subtasks: a classifier to predict UoM type (or the question) and an extractor to extract the relevant quantities. We use a deep character-level CNN architecture for both subtasks, which enables (1) easy expansion to new stores with similar alphabets, (2) multi-span answering due to its span-image architecture and (3) easy deployment by keeping model-inference latency low. Our QA approach outperforms rule-based methods by 34.4% in precision and also BERT-based fact extraction approach in all stores globally, with largest precision lift of 10.6% in the US store.

MLIM: Vision-and-Language Model Pre-training with Masked Language and Image Modeling

Sep 24, 2021

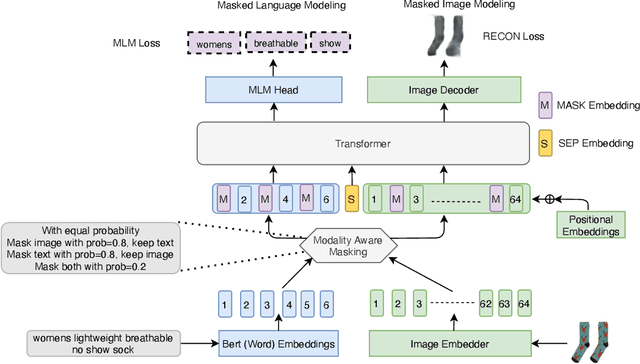

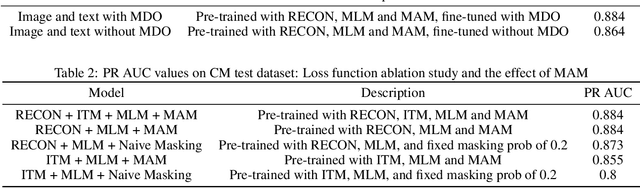

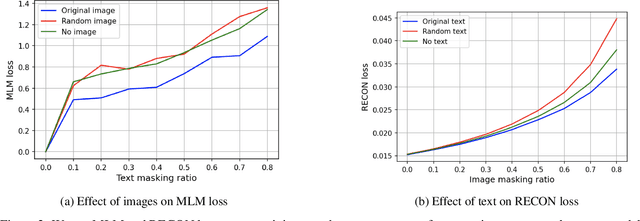

Abstract:Vision-and-Language Pre-training (VLP) improves model performance for downstream tasks that require image and text inputs. Current VLP approaches differ on (i) model architecture (especially image embedders), (ii) loss functions, and (iii) masking policies. Image embedders are either deep models like ResNet or linear projections that directly feed image-pixels into the transformer. Typically, in addition to the Masked Language Modeling (MLM) loss, alignment-based objectives are used for cross-modality interaction, and RoI feature regression and classification tasks for Masked Image-Region Modeling (MIRM). Both alignment and MIRM objectives mostly do not have ground truth. Alignment-based objectives require pairings of image and text and heuristic objective functions. MIRM relies on object detectors. Masking policies either do not take advantage of multi-modality or are strictly coupled with alignments generated by other models. In this paper, we present Masked Language and Image Modeling (MLIM) for VLP. MLIM uses two loss functions: Masked Language Modeling (MLM) loss and image reconstruction (RECON) loss. We propose Modality Aware Masking (MAM) to boost cross-modality interaction and take advantage of MLM and RECON losses that separately capture text and image reconstruction quality. Using MLM + RECON tasks coupled with MAM, we present a simplified VLP methodology and show that it has better downstream task performance on a proprietary e-commerce multi-modal dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge