Isaac Woungang

Generalization Analysis and Method for Domain Generalization for a Family of Recurrent Neural Networks

Jan 13, 2026Abstract:Deep learning (DL) has driven broad advances across scientific and engineering domains. Despite its success, DL models often exhibit limited interpretability and generalization, which can undermine trust, especially in safety-critical deployments. As a result, there is growing interest in (i) analyzing interpretability and generalization and (ii) developing models that perform robustly under data distributions different from those seen during training (i.e. domain generalization). However, the theoretical analysis of DL remains incomplete. For example, many generalization analyses assume independent samples, which is violated in sequential data with temporal correlations. Motivated by these limitations, this paper proposes a method to analyze interpretability and out-of-domain (OOD) generalization for a family of recurrent neural networks (RNNs). Specifically, the evolution of a trained RNN's states is modeled as an unknown, discrete-time, nonlinear closed-loop feedback system. Using Koopman operator theory, these nonlinear dynamics are approximated with a linear operator, enabling interpretability. Spectral analysis is then used to quantify the worst-case impact of domain shifts on the generalization error. Building on this analysis, a domain generalization method is proposed that reduces the OOD generalization error and improves the robustness to distribution shifts. Finally, the proposed analysis and domain generalization approach are validated on practical temporal pattern-learning tasks.

Meta-World+: An Improved, Standardized, RL Benchmark

May 16, 2025Abstract:Meta-World is widely used for evaluating multi-task and meta-reinforcement learning agents, which are challenged to master diverse skills simultaneously. Since its introduction however, there have been numerous undocumented changes which inhibit a fair comparison of algorithms. This work strives to disambiguate these results from the literature, while also leveraging the past versions of Meta-World to provide insights into multi-task and meta-reinforcement learning benchmark design. Through this process we release a new open-source version of Meta-World (https://github.com/Farama-Foundation/Metaworld/) that has full reproducibility of past results, is more technically ergonomic, and gives users more control over the tasks that are included in a task set.

Multi-Task Reinforcement Learning Enables Parameter Scaling

Mar 07, 2025

Abstract:Multi-task reinforcement learning (MTRL) aims to endow a single agent with the ability to perform well on multiple tasks. Recent works have focused on developing novel sophisticated architectures to improve performance, often resulting in larger models; it is unclear, however, whether the performance gains are a consequence of the architecture design itself or the extra parameters. We argue that gains are mostly due to scale by demonstrating that naively scaling up a simple MTRL baseline to match parameter counts outperforms the more sophisticated architectures, and these gains benefit most from scaling the critic over the actor. Additionally, we explore the training stability advantages that come with task diversity, demonstrating that increasing the number of tasks can help mitigate plasticity loss. Our findings suggest that MTRL's simultaneous training across multiple tasks provides a natural framework for beneficial parameter scaling in reinforcement learning, challenging the need for complex architectural innovations.

Koopman-Based Generalization of Deep Reinforcement Learning With Application to Wireless Communications

Mar 04, 2025

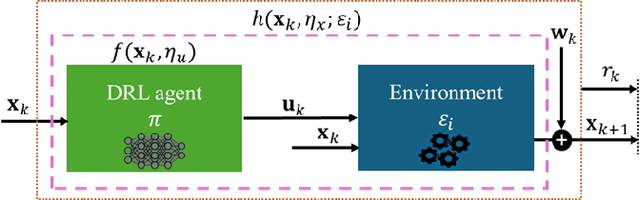

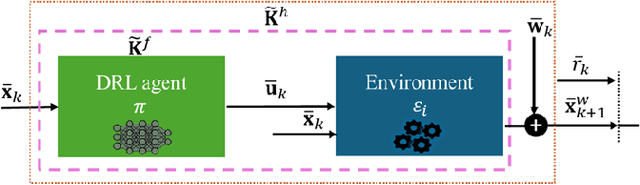

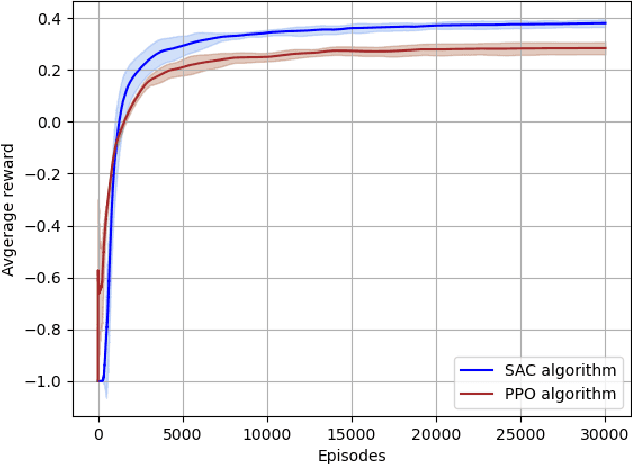

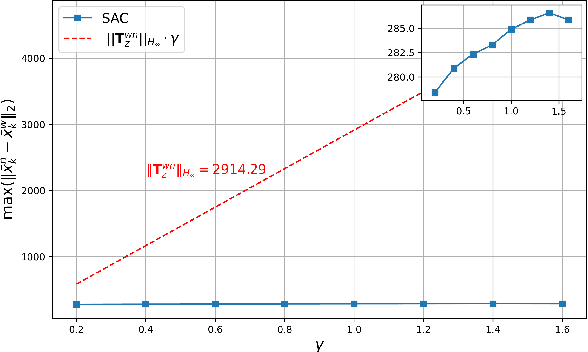

Abstract:Deep Reinforcement Learning (DRL) is a key machine learning technology driving progress across various scientific and engineering fields, including wireless communication. However, its limited interpretability and generalizability remain major challenges. In supervised learning, generalizability is commonly evaluated through the generalization error using information-theoretic methods. In DRL, the training data is sequential and not independent and identically distributed (i.i.d.), rendering traditional information-theoretic methods unsuitable for generalizability analysis. To address this challenge, this paper proposes a novel analytical method for evaluating the generalizability of DRL. Specifically, we first model the evolution of states and actions in trained DRL algorithms as unknown discrete, stochastic, and nonlinear dynamical functions. Then, we employ a data-driven identification method, the Koopman operator, to approximate these functions, and propose two interpretable representations. Based on these interpretable representations, we develop a rigorous mathematical approach to evaluate the generalizability of DRL algorithms. This approach is formulated using the spectral feature analysis of the Koopman operator, leveraging the H_\infty norm. Finally, we apply this generalization analysis to compare the soft actor-critic method, widely recognized as a robust DRL approach, against the proximal policy optimization algorithm for an unmanned aerial vehicle-assisted mmWave wireless communication scenario.

Science-Informed Deep Learning (ScIDL) With Applications to Wireless Communications

Jun 29, 2024

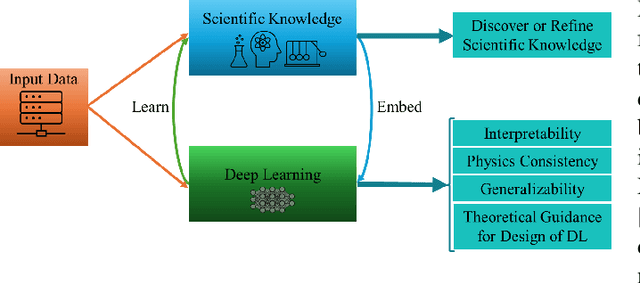

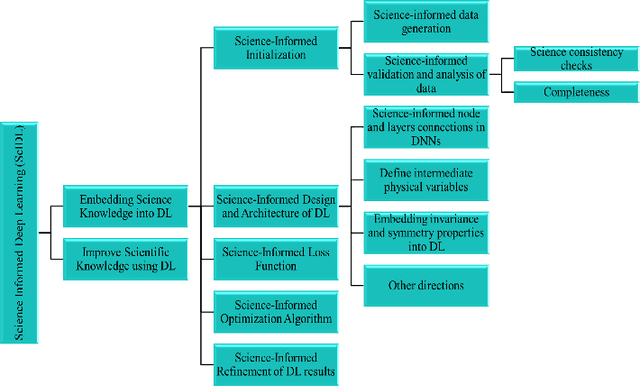

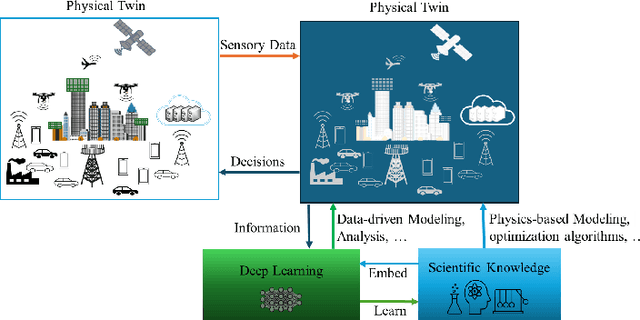

Abstract:Given the extensive and growing capabilities offered by deep learning (DL), more researchers are turning to DL to address complex challenges in next-generation (xG) communications. However, despite its progress, DL also reveals several limitations that are becoming increasingly evident. One significant issue is its lack of interpretability, which is especially critical for safety-sensitive applications. Another significant consideration is that DL may not comply with the constraints set by physics laws or given security standards, which are essential for reliable DL. Additionally, DL models often struggle outside their training data distributions, which is known as poor generalization. Moreover, there is a scarcity of theoretical guidance on designing DL algorithms. These challenges have prompted the emergence of a burgeoning field known as science-informed DL (ScIDL). ScIDL aims to integrate existing scientific knowledge with DL techniques to develop more powerful algorithms. The core objective of this article is to provide a brief tutorial on ScIDL that illustrates its building blocks and distinguishes it from conventional DL. Furthermore, we discuss both recent applications of ScIDL and potential future research directions in the field of wireless communications.

Video-Language Critic: Transferable Reward Functions for Language-Conditioned Robotics

May 30, 2024Abstract:Natural language is often the easiest and most convenient modality for humans to specify tasks for robots. However, learning to ground language to behavior typically requires impractical amounts of diverse, language-annotated demonstrations collected on each target robot. In this work, we aim to separate the problem of what to accomplish from how to accomplish it, as the former can benefit from substantial amounts of external observation-only data, and only the latter depends on a specific robot embodiment. To this end, we propose Video-Language Critic, a reward model that can be trained on readily available cross-embodiment data using contrastive learning and a temporal ranking objective, and use it to score behavior traces from a separate reinforcement learning actor. When trained on Open X-Embodiment data, our reward model enables 2x more sample-efficient policy training on Meta-World tasks than a sparse reward only, despite a significant domain gap. Using in-domain data but in a challenging task generalization setting on Meta-World, we further demonstrate more sample-efficient training than is possible with prior language-conditioned reward models that are either trained with binary classification, use static images, or do not leverage the temporal information present in video data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge