Ionut Florescu

Reinforcement Learning in Agent-Based Market Simulation: Unveiling Realistic Stylized Facts and Behavior

Mar 28, 2024Abstract:Investors and regulators can greatly benefit from a realistic market simulator that enables them to anticipate the consequences of their decisions in real markets. However, traditional rule-based market simulators often fall short in accurately capturing the dynamic behavior of market participants, particularly in response to external market impact events or changes in the behavior of other participants. In this study, we explore an agent-based simulation framework employing reinforcement learning (RL) agents. We present the implementation details of these RL agents and demonstrate that the simulated market exhibits realistic stylized facts observed in real-world markets. Furthermore, we investigate the behavior of RL agents when confronted with external market impacts, such as a flash crash. Our findings shed light on the effectiveness and adaptability of RL-based agents within the simulation, offering insights into their response to significant market events.

Control in Stochastic Environment with Delays: A Model-based Reinforcement Learning Approach

Feb 01, 2024

Abstract:In this paper we are introducing a new reinforcement learning method for control problems in environments with delayed feedback. Specifically, our method employs stochastic planning, versus previous methods that used deterministic planning. This allows us to embed risk preference in the policy optimization problem. We show that this formulation can recover the optimal policy for problems with deterministic transitions. We contrast our policy with two prior methods from literature. We apply the methodology to simple tasks to understand its features. Then, we compare the performance of the methods in controlling multiple Atari games.

A Sparsity Algorithm with Applications to Corporate Credit Rating

Jul 21, 2021

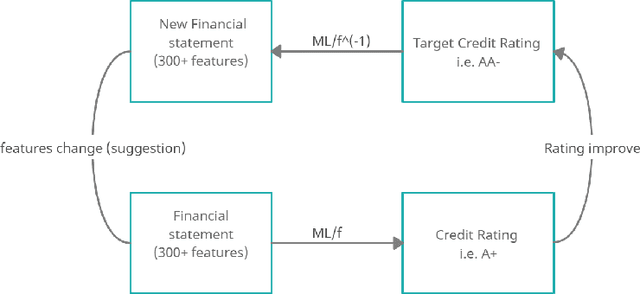

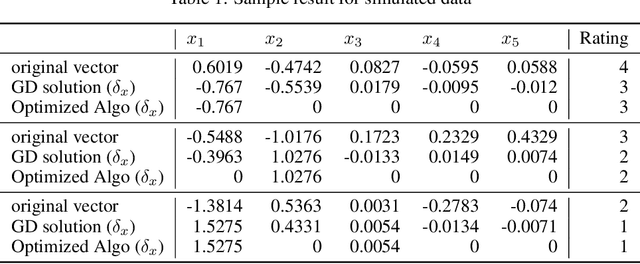

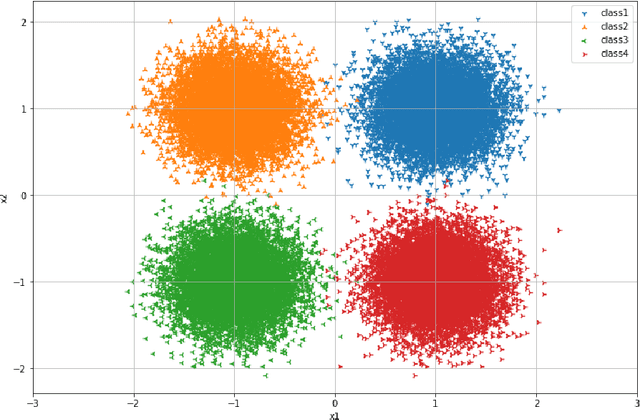

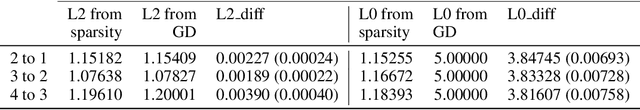

Abstract:In Artificial Intelligence, interpreting the results of a Machine Learning technique often termed as a black box is a difficult task. A counterfactual explanation of a particular "black box" attempts to find the smallest change to the input values that modifies the prediction to a particular output, other than the original one. In this work we formulate the problem of finding a counterfactual explanation as an optimization problem. We propose a new "sparsity algorithm" which solves the optimization problem, while also maximizing the sparsity of the counterfactual explanation. We apply the sparsity algorithm to provide a simple suggestion to publicly traded companies in order to improve their credit ratings. We validate the sparsity algorithm with a synthetically generated dataset and we further apply it to quarterly financial statements from companies in financial, healthcare and IT sectors of the US market. We provide evidence that the counterfactual explanation can capture the nature of the real statement features that changed between the current quarter and the following quarter when ratings improved. The empirical results show that the higher the rating of a company the greater the "effort" required to further improve credit rating.

Application of Deep Neural Networks to assess corporate Credit Rating

Mar 04, 2020

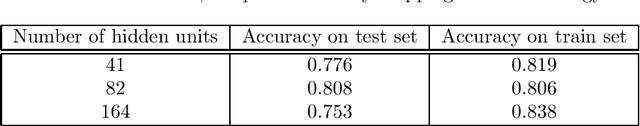

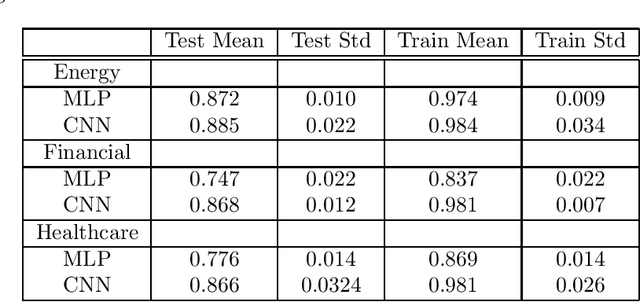

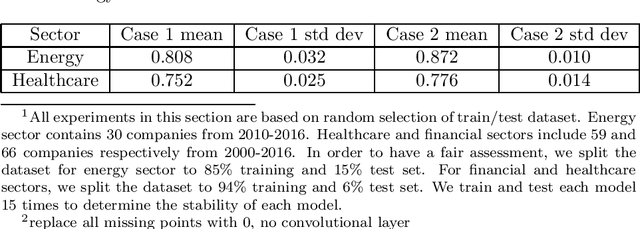

Abstract:Recent literature implements machine learning techniques to assess corporate credit rating based on financial statement reports. In this work, we analyze the performance of four neural network architectures (MLP, CNN, CNN2D, LSTM) in predicting corporate credit rating as issued by Standard and Poor's. We analyze companies from the energy, financial and healthcare sectors in US. The goal of the analysis is to improve application of machine learning algorithms to credit assessment. To this end, we focus on three questions. First, we investigate if the algorithms perform better when using a selected subset of features, or if it is better to allow the algorithms to select features themselves. Second, is the temporal aspect inherent in financial data important for the results obtained by a machine learning algorithm? Third, is there a particular neural network architecture that consistently outperforms others with respect to input features, sectors and holdout set? We create several case studies to answer these questions and analyze the results using ANOVA and multiple comparison testing procedure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge