Ioanna Mitsioni

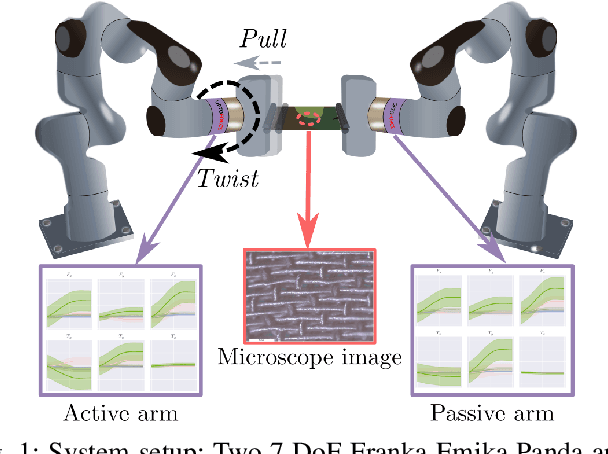

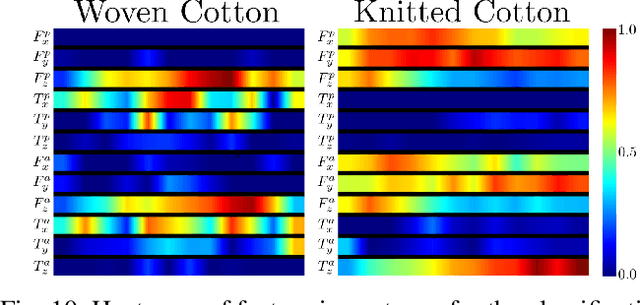

Textile Taxonomy and Classification Using Pulling and Twisting

Mar 17, 2021

Abstract:Identification of textile properties is an important milestone toward advanced robotic manipulation tasks that consider interaction with clothing items such as assisted dressing, laundry folding, automated sewing, textile recycling and reusing. Despite the abundance of work considering this class of deformable objects, many open problems remain. These relate to the choice and modelling of the sensory feedback as well as the control and planning of the interaction and manipulation strategies. Most importantly, there is no structured approach for studying and assessing different approaches that may bridge the gap between the robotics community and textile production industry. To this end, we outline a textile taxonomy considering fiber types and production methods, commonly used in textile industry. We devise datasets according to the taxonomy, and study how robotic actions, such as pulling and twisting of the textile samples, can be used for the classification. We also provide important insights from the perspective of visualization and interpretability of the gathered data.

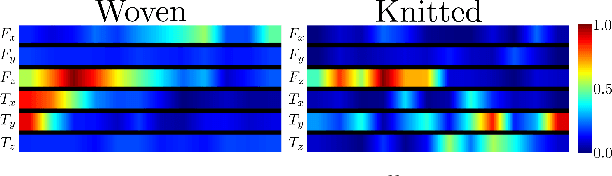

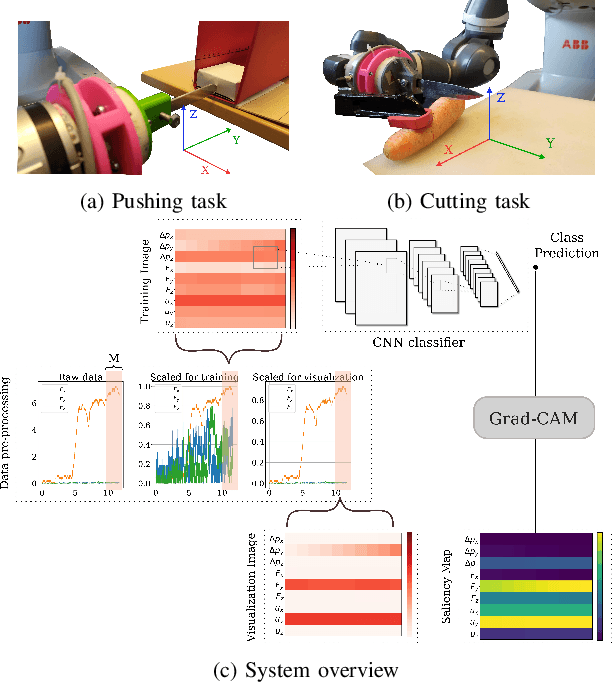

Interpretability in Contact-Rich Manipulation via Kinodynamic Images

Feb 23, 2021

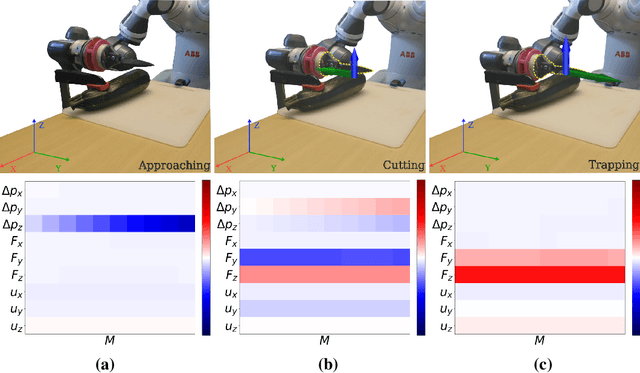

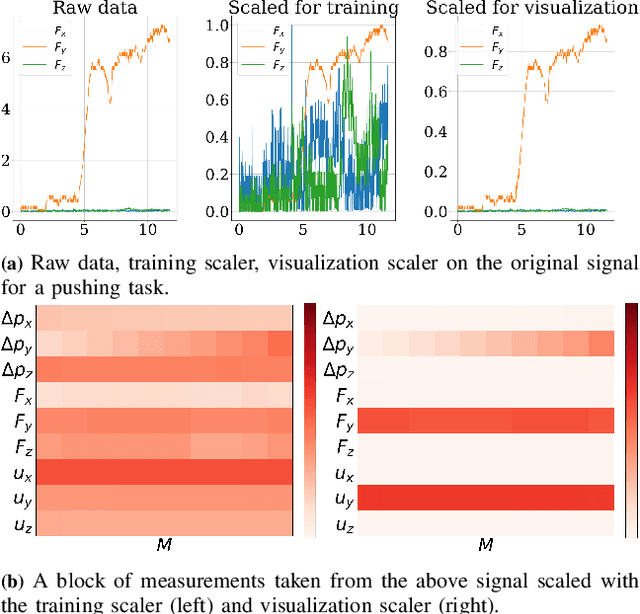

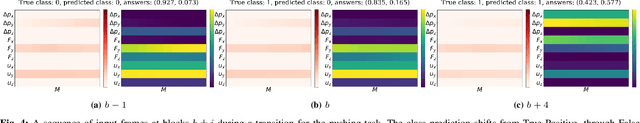

Abstract:Deep Neural Networks (NNs) have been widely utilized in contact-rich manipulation tasks to model the complicated contact dynamics. However, NN-based models are often difficult to decipher which can lead to seemingly inexplicable behaviors and unidentifiable failure cases. In this work, we address the interpretability of NN-based models by introducing the kinodynamic images. We propose a methodology that creates images from the kinematic and dynamic data of a contact-rich manipulation task. Our formulation visually reflects the task's state by encoding its kinodynamic variations and temporal evolution. By using images as the state representation, we enable the application of interpretability modules that were previously limited to vision-based tasks. We use this representation to train Convolution-based Networks and we extract interpretations of the model's decisions with Grad-CAM, a technique that produces visual explanations. Our method is versatile and can be applied to any classification problem using synchronous features in manipulation to visually interpret which parts of the input drive the model's decisions and distinguish its failure modes. We evaluate this approach on two examples of real-world contact-rich manipulation: pushing and cutting, with known and unknown objects. Finally, we demonstrate that our method enables both detailed visual inspections of sequences in a task, as well as high-level evaluations of a model's behavior and tendencies. Data and code for this work are available at https://github.com/imitsioni/interpretable_manipulation.

Modelling and Learning Dynamics for Robotic Food-Cutting

Mar 20, 2020

Abstract:Data-driven approaches for modelling contact-rich tasks address many of the difficulties that analytical models bear. For real-world scenarios, the hardware capabilities constrain the available measurements and consequently, every step of the problem's formulation. In this work, we propose a formulation that encapsulates knowledge from a baseline controller for the contact-rich task of food-cutting. Based on this formulation, we employ deep networks to model the dynamics within a model predictive controller. We design a training process based on curriculum training with learning rate decay for multi-step predictions, which are essential for receding horizon control. Experimental results demonstrate that even with a simple architecture, our model achieves consistently good predictive performance on known and unknown object classes and exhibits a good understanding of the long term dynamics.

Data-Driven Model Predictive Control for Food-Cutting

Mar 09, 2019

Abstract:Modelling of contact-rich tasks is challenging and cannot be entirely solved using classical control approaches due to the difficulty of constructing an analytic description of the contact dynamics. Additionally, in a manipulation task like food-cutting, purely learning-based methods such as Reinforcement Learning, require either a vast amount of data that is expensive to collect on a real robot, or a highly realistic simulation environment, which is currently not available. This paper presents a data-driven control approach that employs a recurrent neural network to model the dynamics for a Model Predictive Controller. We extend on previous work that was limited to torque-controlled robots by incorporating Force/Torque sensor measurements and formulate the control problem so that it can be applied to the more common velocity controlled robots. We evaluate the performance on objects used for training, as well as on unknown objects, by means of the cutting rates achieved and demonstrate that the method can efficiently treat different cases with only one dynamic model. Finally we investigate the behavior of the system during force-critical instances of cutting and illustrate its adaptive behavior in difficult cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge