Inkyu An

Topological RANSAC for instance verification and retrieval without fine-tuning

Oct 10, 2023

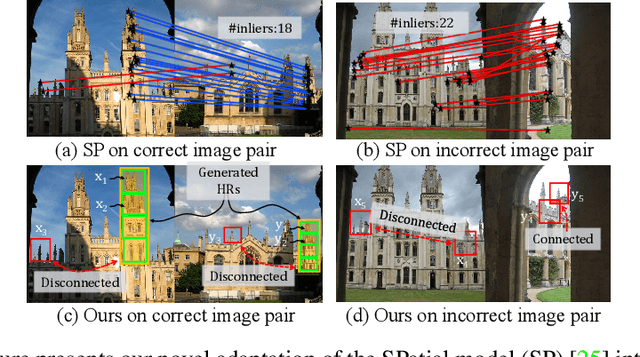

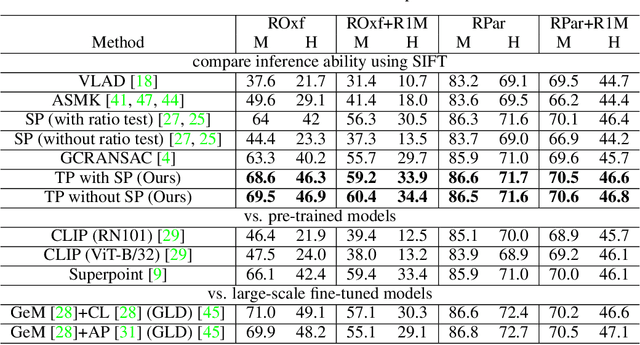

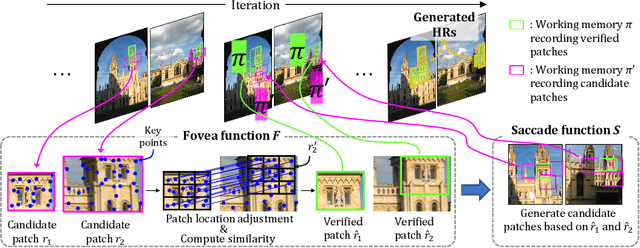

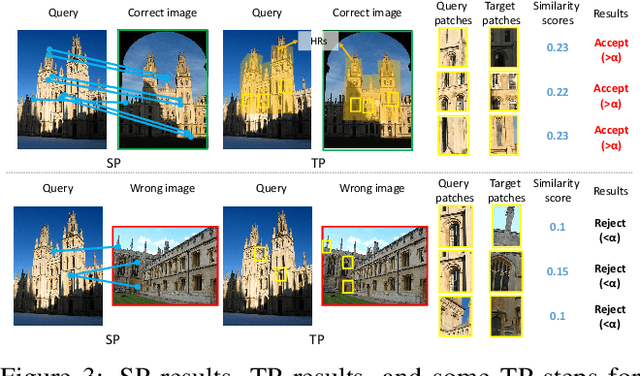

Abstract:This paper presents an innovative approach to enhancing explainable image retrieval, particularly in situations where a fine-tuning set is unavailable. The widely-used SPatial verification (SP) method, despite its efficacy, relies on a spatial model and the hypothesis-testing strategy for instance recognition, leading to inherent limitations, including the assumption of planar structures and neglect of topological relations among features. To address these shortcomings, we introduce a pioneering technique that replaces the spatial model with a topological one within the RANSAC process. We propose bio-inspired saccade and fovea functions to verify the topological consistency among features, effectively circumventing the issues associated with SP's spatial model. Our experimental results demonstrate that our method significantly outperforms SP, achieving state-of-the-art performance in non-fine-tuning retrieval. Furthermore, our approach can enhance performance when used in conjunction with fine-tuned features. Importantly, our method retains high explainability and is lightweight, offering a practical and adaptable solution for a variety of real-world applications.

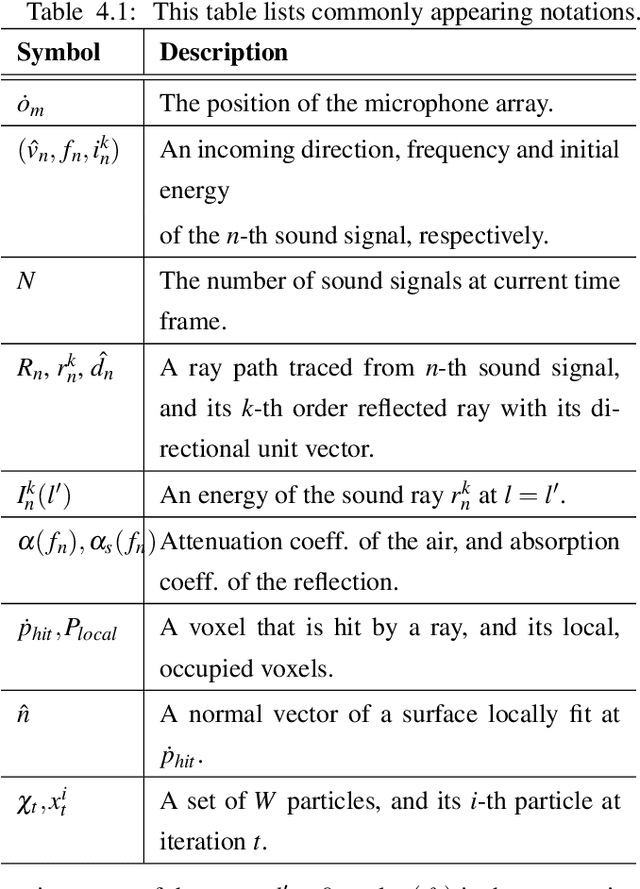

Robust Sound Source Localization considering Similarity of Back-Propagation Signals

Feb 25, 2019

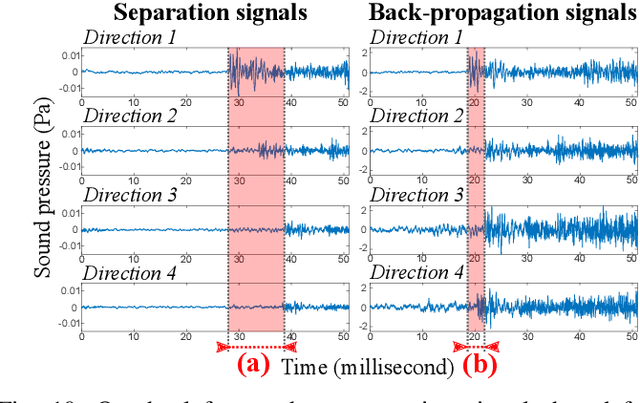

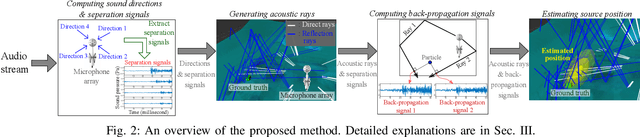

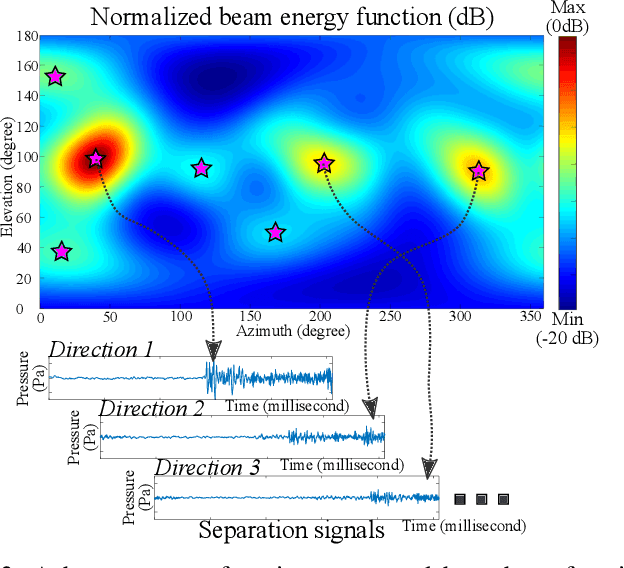

Abstract:We present a novel, robust sound source localization algorithm considering back-propagation signals. Sound propagation paths are estimated by generating direct and reflection acoustic rays based on ray tracing in a backward manner. We then compute the back-propagation signals by designing and using the impulse response of the backward sound propagation based on the acoustic ray paths. For identifying the 3D source position, we suggest a localization method based on the Monte Carlo localization algorithm. Candidates for a source position is determined by identifying the convergence regions of acoustic ray paths. This candidate is validated by measuring similarities between back-propagation signals, under the assumption that the back-propagation signals of different acoustic ray paths should be similar near the sound source position. Thanks to considering similarities of back-propagation signals, our approach can localize a source position with an averaged error of 0.51 m in a room of 7 m by 7 m area with 3 m height in tested environments. We also observe 65 % to 220 % improvement in accuracy over the stateof-the-art method. This improvement is achieved in environments containing a moving source, an obstacle, and noises.

Diffraction-Aware Sound Localization for a Non-Line-of-Sight Source

Sep 20, 2018

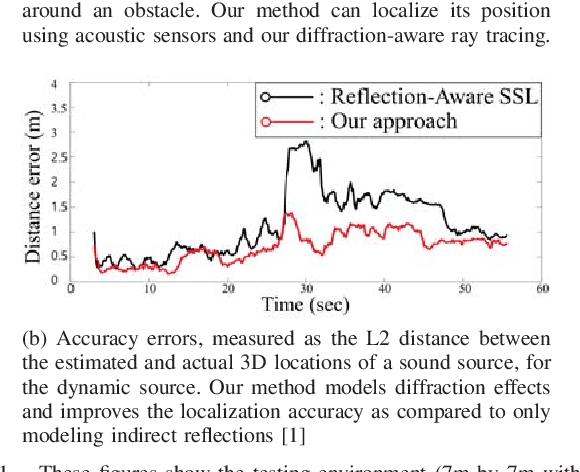

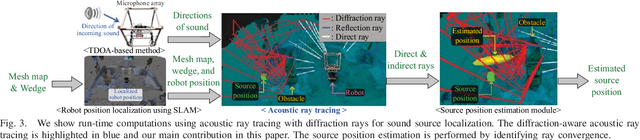

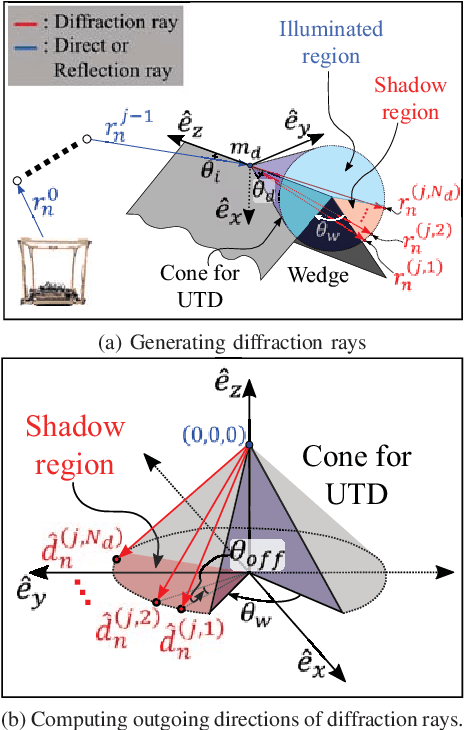

Abstract:We present a novel sound localization algorithm for a non-line-of-sight (NLOS) sound source in indoor environments. Our approach exploits the diffraction properties of sound waves as they bend around a barrier or an obstacle in the scene. We combine a ray tracing based sound propagation algorithm with a Uniform Theory of Diffraction (UTD) model, which simulate bending effects by placing a virtual sound source on a wedge in the environment. We precompute the wedges of a reconstructed mesh of an indoor scene and use them to generate diffraction acoustic rays to localize the 3D position of the source. Our method identifies the convergence region of those generated acoustic rays as the estimated source position based on a particle filter. We have evaluated our algorithm in multiple scenarios consisting of a static and dynamic NLOS sound source. In our tested cases, our approach can localize a source position with an average accuracy error, 0.7m, measured by the L2 distance between estimated and actual source locations in a 7m*7m*3m room. Furthermore, we observe 37% to 130% improvement in accuracy over a state-of-the-art localization method that does not model diffraction effects, especially when a sound source is not visible to the robot.

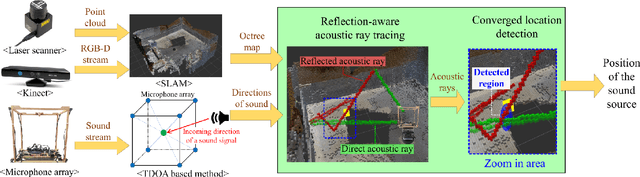

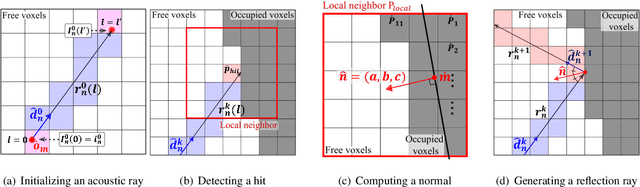

Reflection-Aware Sound Source Localization

Nov 21, 2017

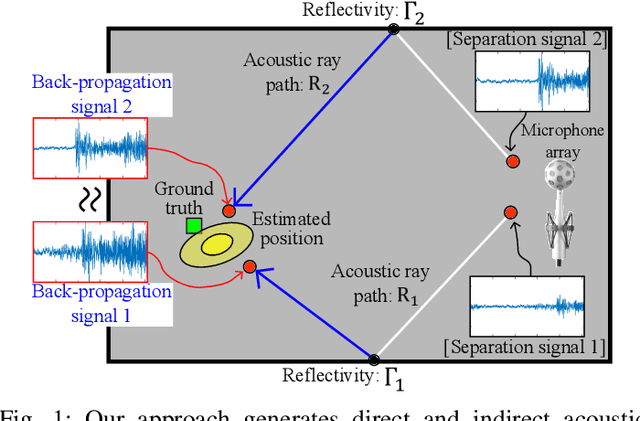

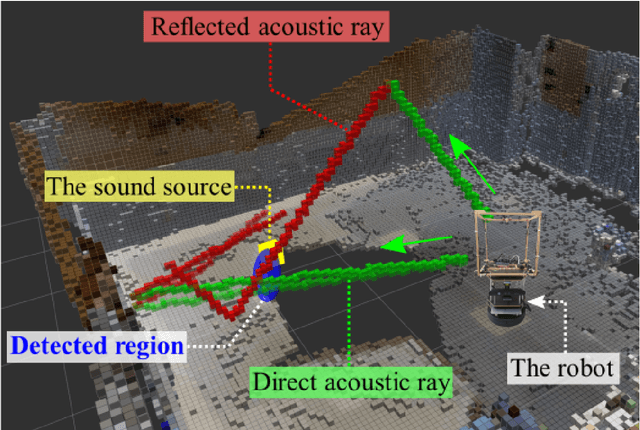

Abstract:We present a novel, reflection-aware method for 3D sound localization in indoor environments. Unlike prior approaches, which are mainly based on continuous sound signals from a stationary source, our formulation is designed to localize the position instantaneously from signals within a single frame. We consider direct sound and indirect sound signals that reach the microphones after reflecting off surfaces such as ceilings or walls. We then generate and trace direct and reflected acoustic paths using inverse acoustic ray tracing and utilize these paths with Monte Carlo localization to estimate a 3D sound source position. We have implemented our method on a robot with a cube-shaped microphone array and tested it against different settings with continuous and intermittent sound signals with a stationary or a mobile source. Across different settings, our approach can localize the sound with an average distance error of 0.8m tested in a room of 7m by 7m area with 3m height, including a mobile and non-line-of-sight sound source. We also reveal that the modeling of indirect rays increases the localization accuracy by 40% compared to only using direct acoustic rays.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge