Ingrid Agartz

Contrastive-Adversarial and Diffusion: Exploring pre-training and fine-tuning strategies for sulcal identification

May 29, 2024

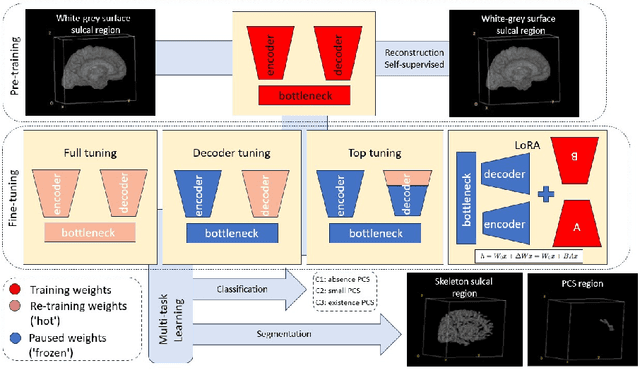

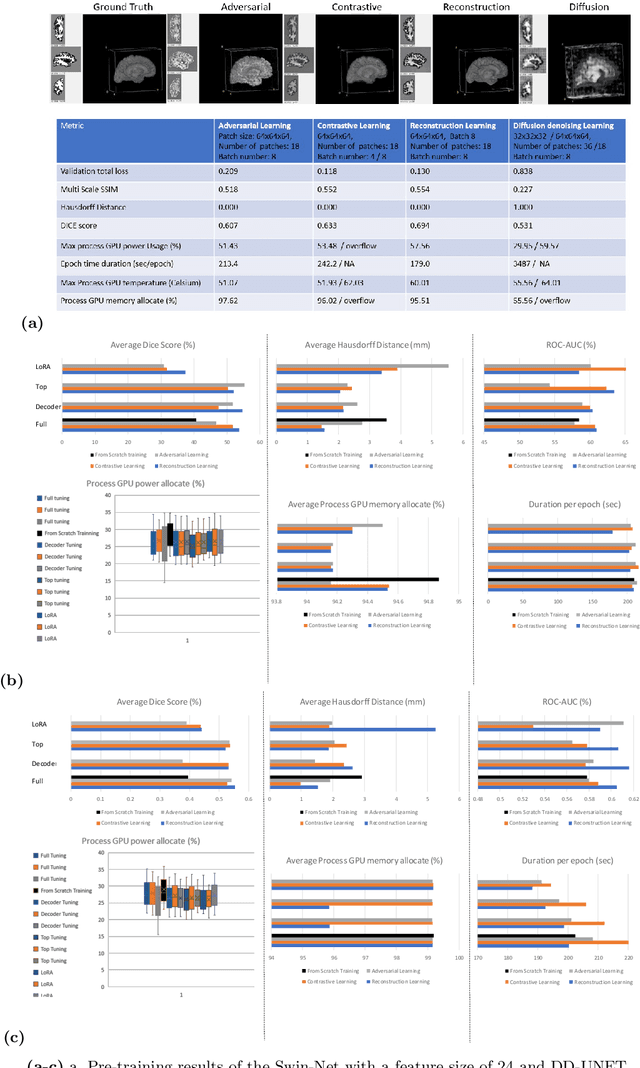

Abstract:In the last decade, computer vision has witnessed the establishment of various training and learning approaches. Techniques like adversarial learning, contrastive learning, diffusion denoising learning, and ordinary reconstruction learning have become standard, representing state-of-the-art methods extensively employed for fully training or pre-training networks across various vision tasks. The exploration of fine-tuning approaches has emerged as a current focal point, addressing the need for efficient model tuning with reduced GPU memory usage and time costs while enhancing overall performance, as exemplified by methodologies like low-rank adaptation (LoRA). Key questions arise: which pre-training technique yields optimal results - adversarial, contrastive, reconstruction, or diffusion denoising? How does the performance of these approaches vary as the complexity of fine-tuning is adjusted? This study aims to elucidate the advantages of pre-training techniques and fine-tuning strategies to enhance the learning process of neural networks in independent identical distribution (IID) cohorts. We underscore the significance of fine-tuning by examining various cases, including full tuning, decoder tuning, top-level tuning, and fine-tuning of linear parameters using LoRA. Systematic summaries of model performance and efficiency are presented, leveraging metrics such as accuracy, time cost, and memory efficiency. To empirically demonstrate our findings, we focus on a multi-task segmentation-classification challenge involving the paracingulate sulcus (PCS) using different 3D Convolutional Neural Network (CNN) architectures by using the TOP-OSLO cohort comprising 596 subjects.

Solving the enigma: Deriving optimal explanations of deep networks

May 16, 2024Abstract:The accelerated progress of artificial intelligence (AI) has popularized deep learning models across domains, yet their inherent opacity poses challenges, notably in critical fields like healthcare, medicine and the geosciences. Explainable AI (XAI) has emerged to shed light on these "black box" models, helping decipher their decision making process. Nevertheless, different XAI methods yield highly different explanations. This inter-method variability increases uncertainty and lowers trust in deep networks' predictions. In this study, for the first time, we propose a novel framework designed to enhance the explainability of deep networks, by maximizing both the accuracy and the comprehensibility of the explanations. Our framework integrates various explanations from established XAI methods and employs a non-linear "explanation optimizer" to construct a unique and optimal explanation. Through experiments on multi-class and binary classification tasks in 2D object and 3D neuroscience imaging, we validate the efficacy of our approach. Our explanation optimizer achieved superior faithfulness scores, averaging 155% and 63% higher than the best performing XAI method in the 3D and 2D applications, respectively. Additionally, our approach yielded lower complexity, increasing comprehensibility. Our results suggest that optimal explanations based on specific criteria are derivable and address the issue of inter-method variability in the current XAI literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge