Ilya O. Ryzhov

Digital Twin Calibration with Model-Based Reinforcement Learning

Jan 04, 2025Abstract:This paper presents a novel methodological framework, called the Actor-Simulator, that incorporates the calibration of digital twins into model-based reinforcement learning for more effective control of stochastic systems with complex nonlinear dynamics. Traditional model-based control often relies on restrictive structural assumptions (such as linear state transitions) and fails to account for parameter uncertainty in the model. These issues become particularly critical in industries such as biopharmaceutical manufacturing, where process dynamics are complex and not fully known, and only a limited amount of data is available. Our approach jointly calibrates the digital twin and searches for an optimal control policy, thus accounting for and reducing model error. We balance exploration and exploitation by using policy performance as a guide for data collection. This dual-component approach provably converges to the optimal policy, and outperforms existing methods in extensive numerical experiments based on the biopharmaceutical manufacturing domain.

Policy Optimization in Bayesian Network Hybrid Models of Biomanufacturing Processes

May 13, 2021

Abstract:Biopharmaceutical manufacturing is a rapidly growing industry with impact in virtually all branches of medicine. Biomanufacturing processes require close monitoring and control, in the presence of complex bioprocess dynamics with many interdependent factors, as well as extremely limited data due to the high cost and long duration of experiments. We develop a novel model-based reinforcement learning framework that can achieve human-level control in low-data environments. The model uses a probabilistic knowledge graph to capture causal interdependencies between factors in the underlying stochastic decision process, leveraging information from existing kinetic models from different unit operations while incorporating real-world experimental data. We then present a computationally efficient, provably convergent stochastic gradient method for policy optimization. Validation is conducted on a realistic application with a multi-dimensional, continuous state variable.

Personalized Multimorbidity Management for Patients with Type 2 Diabetes Using Reinforcement Learning of Electronic Health Records

Oct 29, 2020

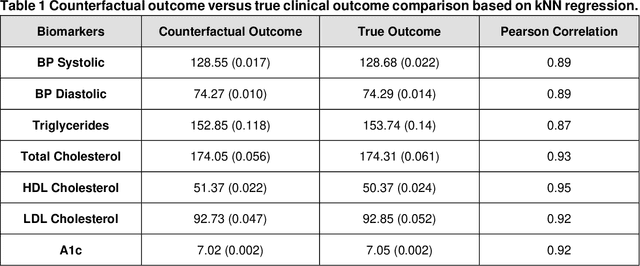

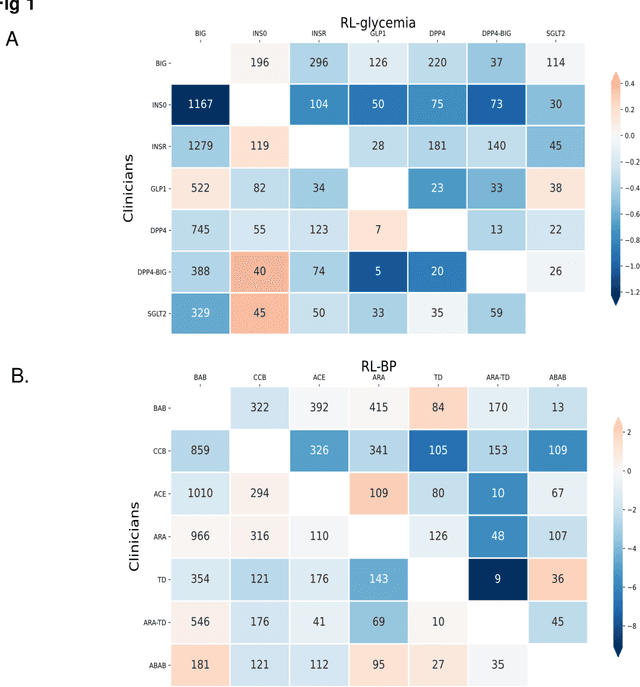

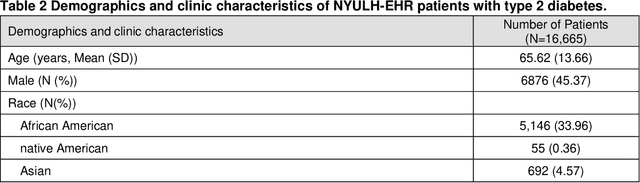

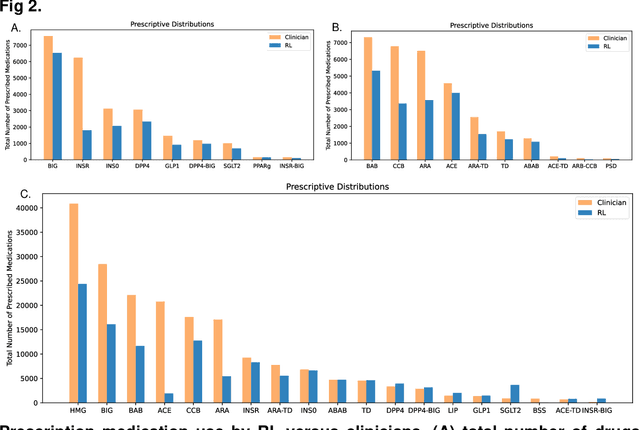

Abstract:Comorbid chronic conditions are common among people with type 2 diabetes. We developed an Artificial Intelligence algorithm, based on Reinforcement Learning (RL), for personalized diabetes and multi-morbidity management with strong potential to improve health outcomes relative to current clinical practice. In this paper, we modeled glycemia, blood pressure and cardiovascular disease (CVD) risk as health outcomes using a retrospective cohort of 16,665 patients with type 2 diabetes from New York University Langone Health ambulatory care electronic health records in 2009 to 2017. We trained a RL prescription algorithm that recommends a treatment regimen optimizing patients' cumulative health outcomes using their individual characteristics and medical history at each encounter. The RL recommendations were evaluated on an independent subset of patients. The results demonstrate that the proposed personalized reinforcement learning prescriptive framework for type 2 diabetes yielded high concordance with clinicians' prescriptions and substantial improvements in glycemia, blood pressure, cardiovascular disease risk outcomes.

A New Optimal Stepsize For Approximate Dynamic Programming

Jul 14, 2014

Abstract:Approximate dynamic programming (ADP) has proven itself in a wide range of applications spanning large-scale transportation problems, health care, revenue management, and energy systems. The design of effective ADP algorithms has many dimensions, but one crucial factor is the stepsize rule used to update a value function approximation. Many operations research applications are computationally intensive, and it is important to obtain good results quickly. Furthermore, the most popular stepsize formulas use tunable parameters and can produce very poor results if tuned improperly. We derive a new stepsize rule that optimizes the prediction error in order to improve the short-term performance of an ADP algorithm. With only one, relatively insensitive tunable parameter, the new rule adapts to the level of noise in the problem and produces faster convergence in numerical experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge