Ildar Babataev

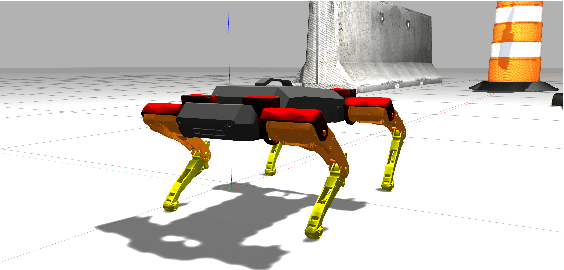

HyperGuider: Virtual Reality Framework for Interactive Path Planning of Quadruped Robot in Cluttered and Multi-Terrain Environments

Sep 20, 2022

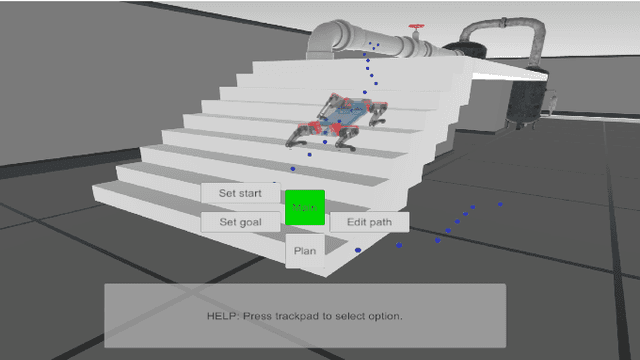

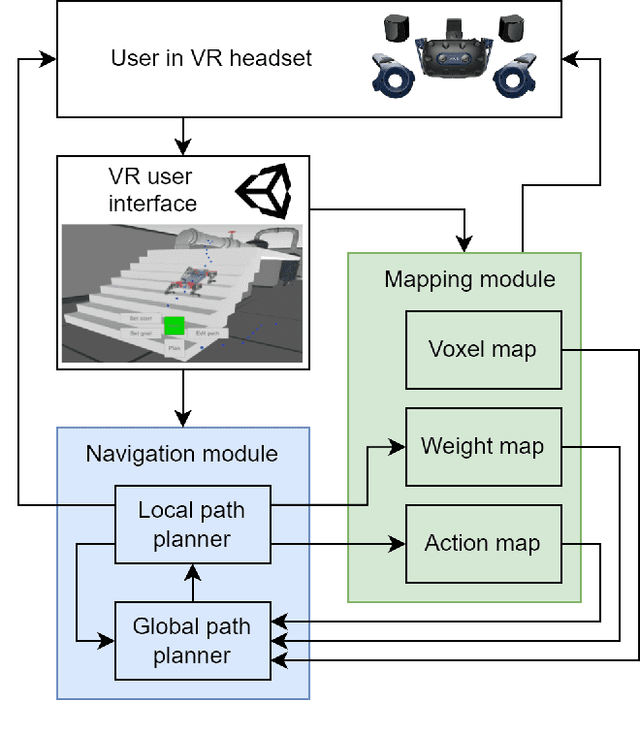

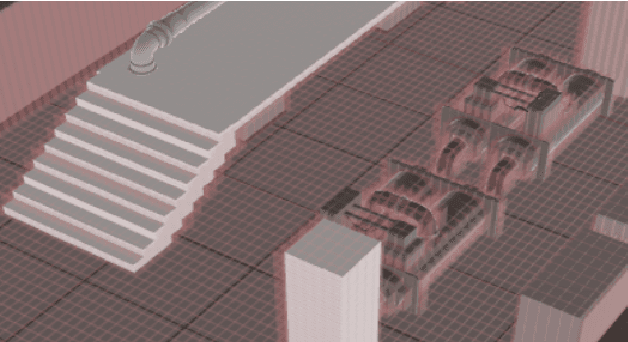

Abstract:Quadruped platforms have become an active topic of research due to their high mobility and traversability in rough terrain. However, it is highly challenging to determine whether the clattered environment could be passed by the robot and how exactly its path should be calculated. Moreover, the calculated path may pass through areas with dynamic objects or environments that are dangerous for the robot or people around. Therefore, we propose a novel conceptual approach of teaching quadruped robots navigation through user-guided path planning in virtual reality (VR). Our system contains both global and local path planners, allowing robot to generate path through iterations of learning. The VR interface allows user to interact with environment and to assist quadruped robot in challenging scenarios. The results of comparison experiments show that cooperation between human and path planning algorithms can increase the computational speed of the algorithm by 35.58% in average, and non-critically increasing of the path length (average of 6.66%) in test scenario. Additionally, users described VR interface as not requiring physical demand (2.3 out of 10) and highly evaluated their performance (7.1 out of 10). The ability to find a less optimal but safer path remains in demand for the task of navigating in a cluttered and unstructured environment.

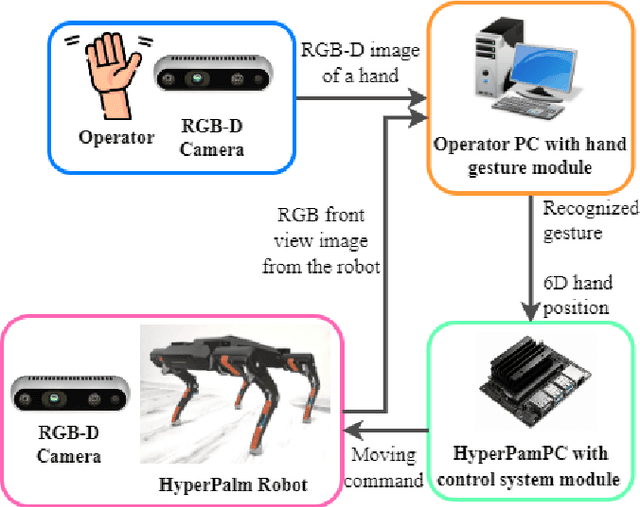

HyperPalm: DNN-based hand gesture recognition interface for intelligent communication with quadruped robot in 3D space

Sep 20, 2022

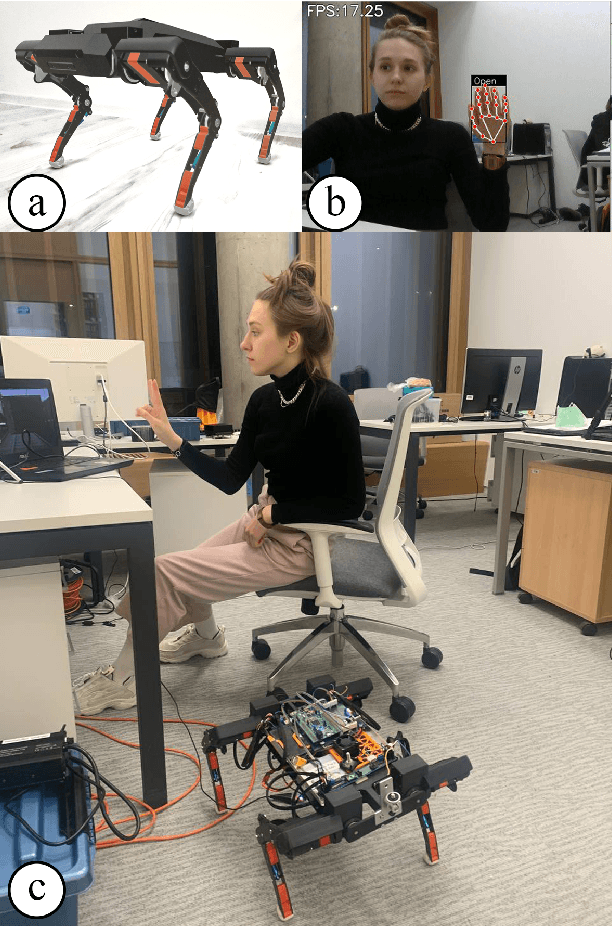

Abstract:Nowadays, autonomous mobile robots support people in many areas where human presence either redundant or too dangerous. They have successfully proven themselves in expeditions, gas industry, mines, warehouses, etc. However, even legged robots may stuck in rough terrain conditions requiring human cognitive abilities to navigate the system. While gamepads and keyboards are convenient for wheeled robot control, the quadruped robot in 3D space can move along all linear coordinates and Euler angles, requiring at least 12 buttons for independent control of their DoF. Therefore, more convenient interfaces of control are required. In this paper we present HyperPalm: a novel gesture interface for intuitive human-robot interaction with quadruped robots. Without additional devices, the operator has full position and orientation control of the quadruped robot in 3D space through hand gesture recognition with only 5 gestures and 6 DoF hand motion. The experimental results revealed to classify 5 static gestures with high accuracy (96.5%), accurately predict the position of the 6D position of the hand in three-dimensional space. The absolute linear deviation Root mean square deviation (RMSD) of the proposed approach is 11.7 mm, which is almost 50% lower than for the second tested approach, the absolute angular deviation RMSD of the proposed approach is 2.6 degrees, which is almost 27% lower than for the second tested approach. Moreover, the user study was conducted to explore user's subjective experience from human-robot interaction through the proposed gesture interface. The participants evaluated their interaction with HyperPalm as intuitive (2.0), not causing frustration (2.63), and requiring low physical demand (2.0).

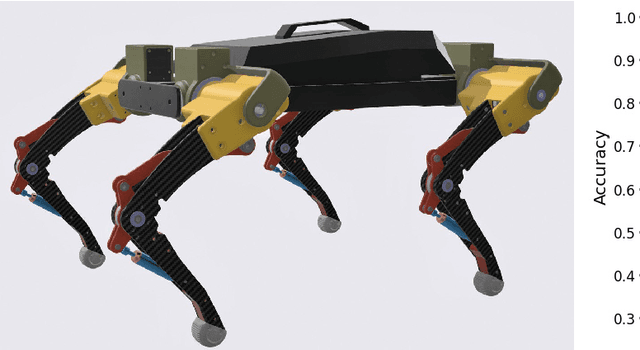

HyperDog: An Open-Source Quadruped Robot Platform Based on ROS2 and micro-ROS

Sep 19, 2022

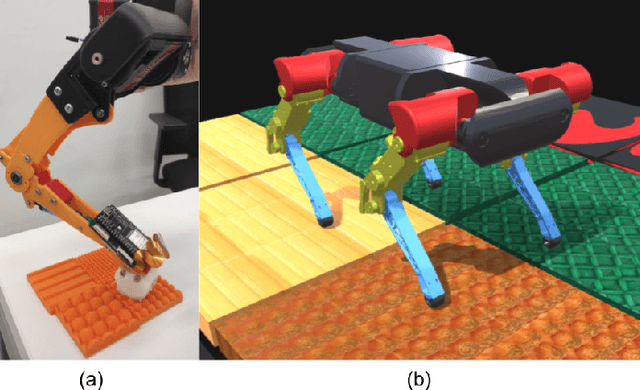

Abstract:Nowadays, design and development of legged quadruped robots is a quite active area of scientific research. In fact, the legged robots have become popular due to their capabilities to adapt to harsh terrains and diverse environmental conditions in comparison to other mobile robots. With the higher demand for legged robot experiments, more researches and engineers need an affordable and quick way of locomotion algorithm development. In this paper, we present a new open source quadruped robot HyperDog platform, which features 12 RC servo motors, onboard NVIDIA Jetson nano computer and STM32F4 Discovery board. HyperDog is an open-source platform for quadruped robotic software development, which is based on Robot Operating System 2 (ROS2) and micro-ROS. Moreover, the HyperDog is a quadrupedal robotic dog entirely built from 3D printed parts and carbon fiber, which allows the robot to have light weight and good strength. The idea of this work is to demonstrate an affordable and customizable way of robot development and provide researches and engineers with the legged robot platform, where different algorithms can be tested and validated in simulation and real environment. The developed project with code is available on GitHub (https://github.com/NDHANA94/hyperdog_ros2).

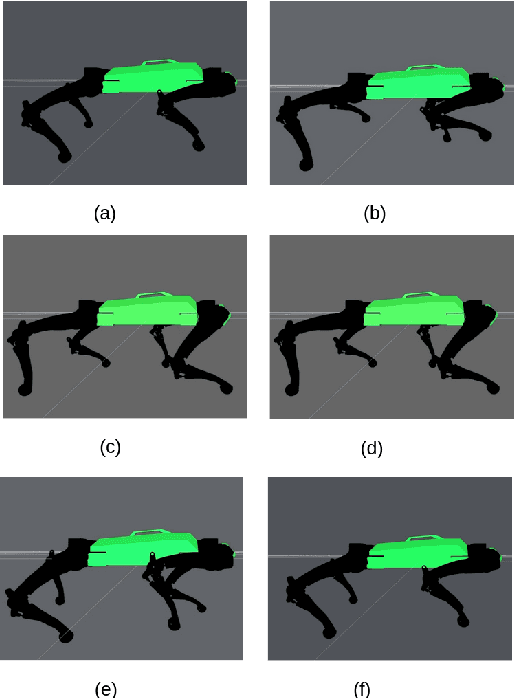

DogTouch: CNN-based Recognition of Surface Textures by Quadruped Robot with High Density Tactile Sensors

Jun 09, 2022

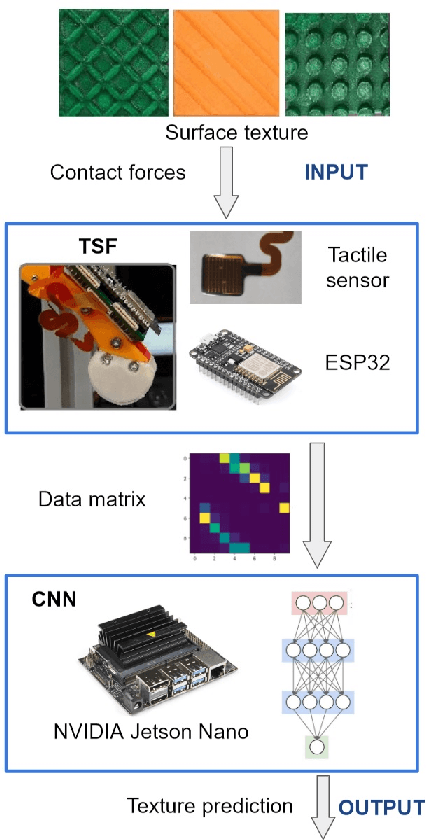

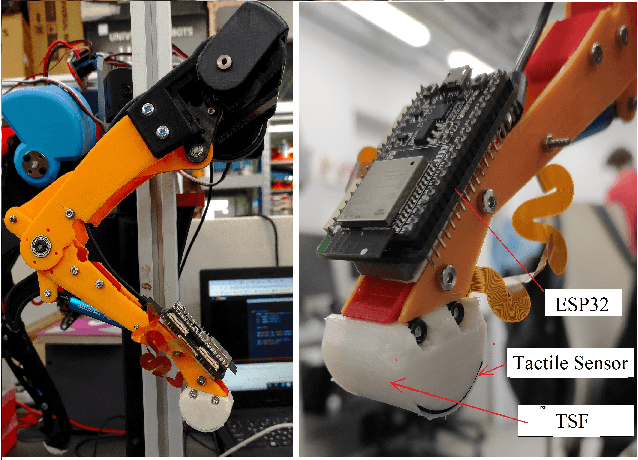

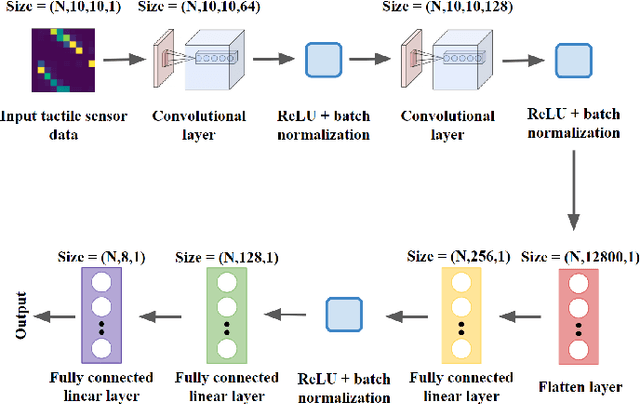

Abstract:The ability to perform locomotion in various terrains is critical for legged robots. However, the robot has to have a better understanding of the surface it is walking on to perform robust locomotion on different terrains. Animals and humans are able to recognize the surface with the help of the tactile sensation on their feet. Although, the foot tactile sensation for legged robots has not been much explored. This paper presents research on a novel quadruped robot DogTouch with tactile sensing feet (TSF). TSF allows the recognition of different surface textures utilizing a tactile sensor and a convolutional neural network (CNN). The experimental results show a sufficient validation accuracy of 74.37\% for our trained CNN-based model, with the highest recognition for line patterns of 90\%. In the future, we plan to improve the prediction model by presenting surface samples with the various depths of patterns and applying advanced Deep Learning and Shallow learning models for surface recognition. Additionally, we propose a novel approach to navigation of quadruped and legged robots. We can arrange the tactile paving textured surface (similar that used for blind or visually impaired people). Thus, DogTouch will be capable of locomotion in unknown environment by just recognizing the specific tactile patterns which will indicate the straight path, left or right turn, pedestrian crossing, road, and etc. That will allow robust navigation regardless of lighting condition. Future quadruped robots equipped with visual and tactile perception system will be able to safely and intelligently navigate and interact in the unstructured indoor and outdoor environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge