Hyungtak Choi

KILDST: Effective Knowledge-Integrated Learning for Dialogue State Tracking using Gazetteer and Speaker Information

Jan 18, 2023

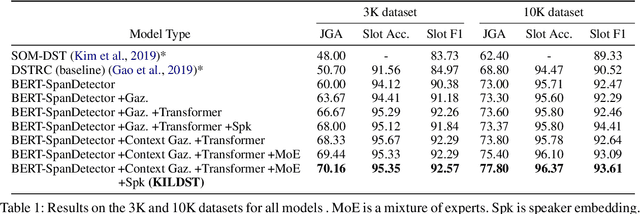

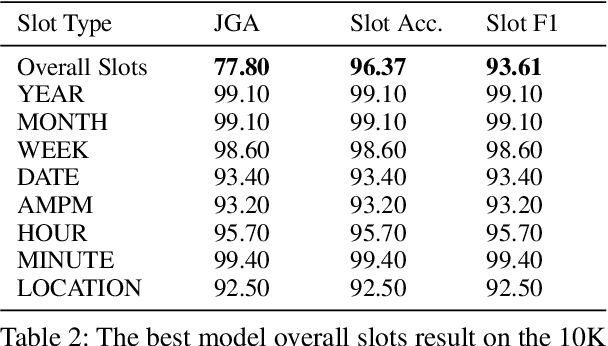

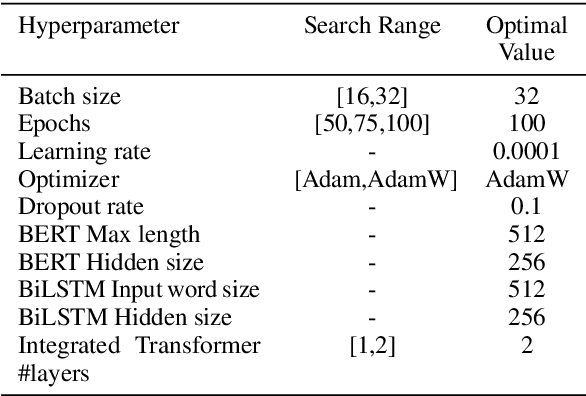

Abstract:Dialogue State Tracking (DST) is core research in dialogue systems and has received much attention. In addition, it is necessary to define a new problem that can deal with dialogue between users as a step toward the conversational AI that extracts and recommends information from the dialogue between users. So, we introduce a new task - DST from dialogue between users about scheduling an event (DST-USERS). The DST-USERS task is much more challenging since it requires the model to understand and track dialogue states in the dialogue between users and to understand who suggested the schedule and who agreed to the proposed schedule. To facilitate DST-USERS research, we develop dialogue datasets between users that plan a schedule. The annotated slot values which need to be extracted in the dialogue are date, time, and location. Previous approaches, such as Machine Reading Comprehension (MRC) and traditional DST techniques, have not achieved good results in our extensive evaluations. By adopting the knowledge-integrated learning method, we achieve exceptional results. The proposed model architecture combines gazetteer features and speaker information efficiently. Our evaluations of the dialogue datasets between users that plan a schedule show that our model outperforms the baseline model.

Ensemble-Based Deep Reinforcement Learning for Chatbots

Aug 27, 2019

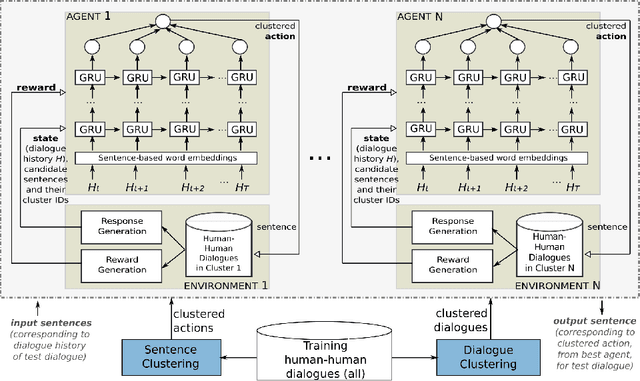

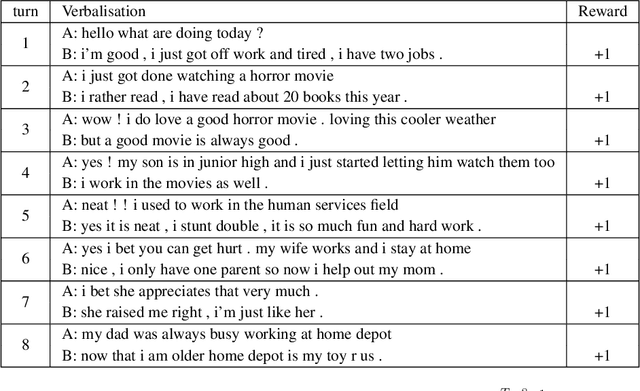

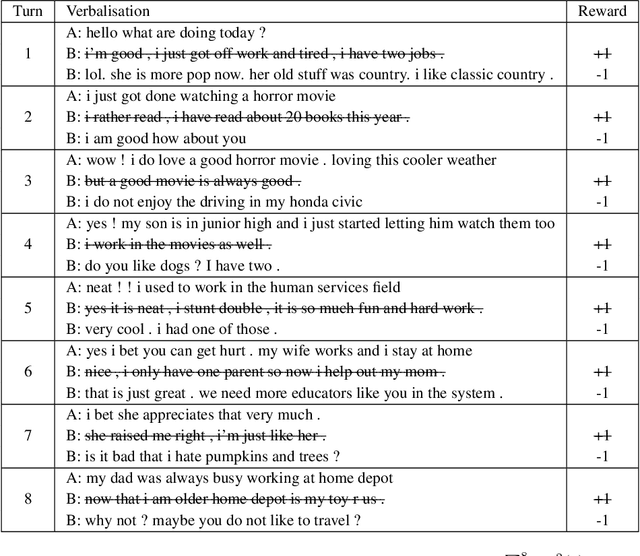

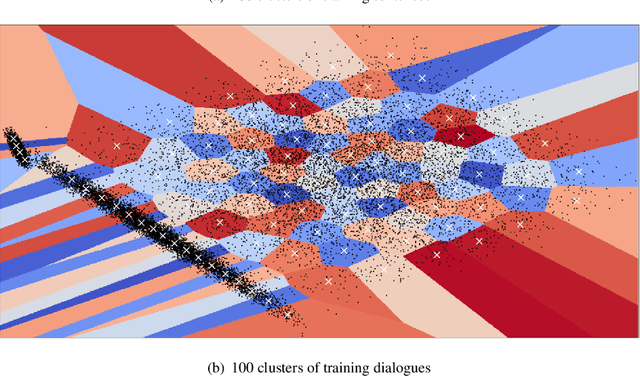

Abstract:Trainable chatbots that exhibit fluent and human-like conversations remain a big challenge in artificial intelligence. Deep Reinforcement Learning (DRL) is promising for addressing this challenge, but its successful application remains an open question. This article describes a novel ensemble-based approach applied to value-based DRL chatbots, which use finite action sets as a form of meaning representation. In our approach, while dialogue actions are derived from sentence clustering, the training datasets in our ensemble are derived from dialogue clustering. The latter aim to induce specialised agents that learn to interact in a particular style. In order to facilitate neural chatbot training using our proposed approach, we assume dialogue data in raw text only -- without any manually-labelled data. Experimental results using chitchat data reveal that (1) near human-like dialogue policies can be induced, (2) generalisation to unseen data is a difficult problem, and (3) training an ensemble of chatbot agents is essential for improved performance over using a single agent. In addition to evaluations using held-out data, our results are further supported by a human evaluation that rated dialogues in terms of fluency, engagingness and consistency -- which revealed that our proposed dialogue rewards strongly correlate with human judgements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge