Hussam Al Hamadi

A Visualized Malware Detection Framework with CNN and Conditional GAN

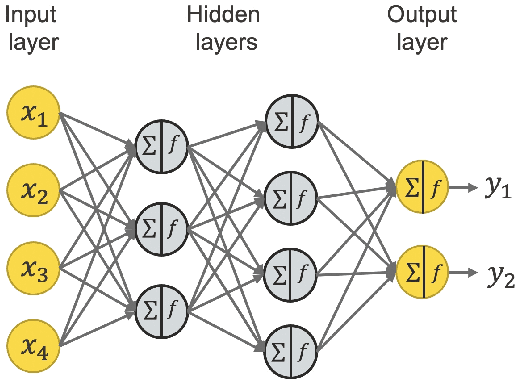

Sep 22, 2024Abstract:Malware visualization analysis incorporating with Machine Learning (ML) has been proven to be a promising solution for improving security defenses on different platforms. In this work, we propose an integrated framework for addressing common problems experienced by ML utilizers in developing malware detection systems. Namely, a pictorial presentation system with extensions is designed to preserve the identities of benign/malign samples by encoding each variable into binary digits and mapping them into black and white pixels. A conditional Generative Adversarial Network based model is adopted to produce synthetic images and mitigate issues of imbalance classes. Detection models architected by Convolutional Neural Networks are for validating performances while training on datasets with and without artifactual samples. Result demonstrates accuracy rates of 98.51% and 97.26% for these two training scenarios.

Pathway to Secure and Trustworthy 6G for LLMs: Attacks, Defense, and Opportunities

Aug 01, 2024Abstract:Recently, large language models (LLMs) have been gaining a lot of interest due to their adaptability and extensibility in emerging applications, including communication networks. It is anticipated that 6G mobile edge computing networks will be able to support LLMs as a service, as they provide ultra reliable low-latency communications and closed loop massive connectivity. However, LLMs are vulnerable to data and model privacy issues that affect the trustworthiness of LLMs to be deployed for user-based services. In this paper, we explore the security vulnerabilities associated with fine-tuning LLMs in 6G networks, in particular the membership inference attack. We define the characteristics of an attack network that can perform a membership inference attack if the attacker has access to the fine-tuned model for the downstream task. We show that the membership inference attacks are effective for any downstream task, which can lead to a personal data breach when using LLM as a service. The experimental results show that the attack success rate of maximum 92% can be achieved on named entity recognition task. Based on the experimental analysis, we discuss possible defense mechanisms and present possible research directions to make the LLMs more trustworthy in the context of 6G networks.

A Late Multi-Modal Fusion Model for Detecting Hybrid Spam E-mail

Oct 26, 2022Abstract:In recent years, spammers are now trying to obfuscate their intents by introducing hybrid spam e-mail combining both image and text parts, which is more challenging to detect in comparison to e-mails containing text or image only. The motivation behind this research is to design an effective approach filtering out hybrid spam e-mails to avoid situations where traditional text-based or image-baesd only filters fail to detect hybrid spam e-mails. To the best of our knowledge, a few studies have been conducted with the goal of detecting hybrid spam e-mails. Ordinarily, Optical Character Recognition (OCR) technology is used to eliminate the image parts of spam by transforming images into text. However, the research questions are that although OCR scanning is a very successful technique in processing text-and-image hybrid spam, it is not an effective solution for dealing with huge quantities due to the CPU power required and the execution time it takes to scan e-mail files. And the OCR techniques are not always reliable in the transformation processes. To address such problems, we propose new late multi-modal fusion training frameworks for a text-and-image hybrid spam e-mail filtering system compared to the classical early fusion detection frameworks based on the OCR method. Convolutional Neural Network (CNN) and Continuous Bag of Words were implemented to extract features from image and text parts of hybrid spam respectively, whereas generated features were fed to sigmoid layer and Machine Learning based classifiers including Random Forest (RF), Decision Tree (DT), Naive Bayes (NB) and Support Vector Machine (SVM) to determine the e-mail ham or spam.

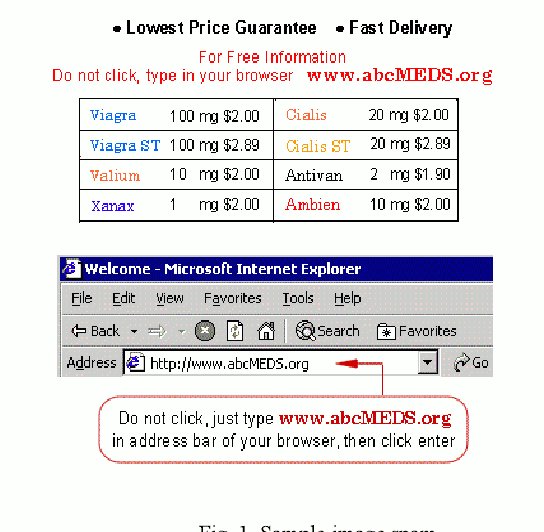

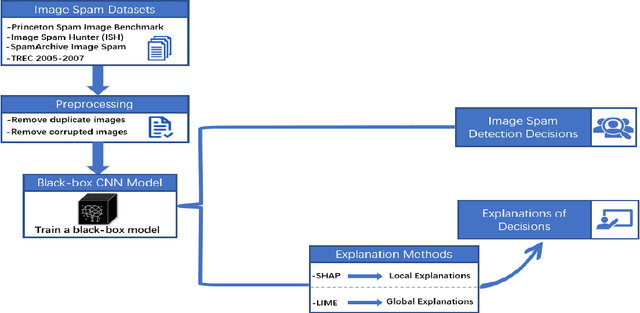

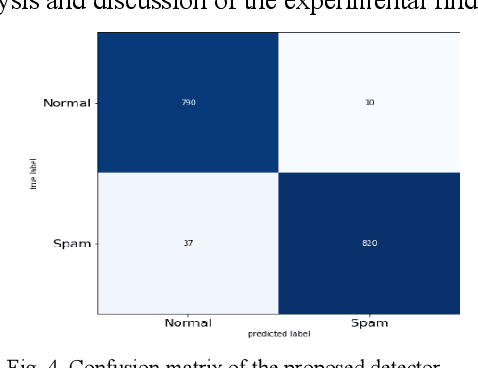

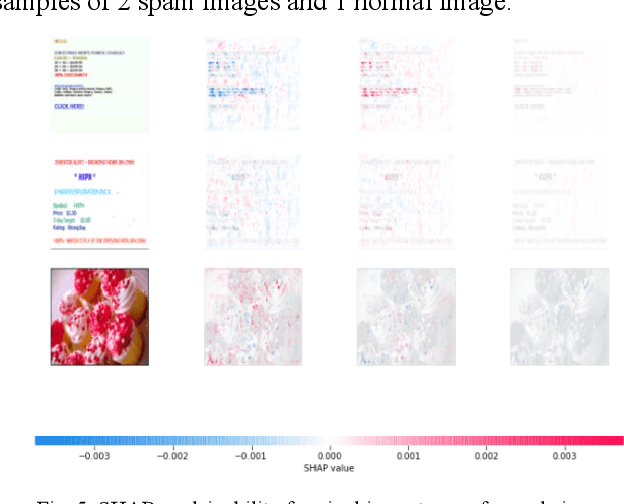

Explainable Artificial Intelligence to Detect Image Spam Using Convolutional Neural Network

Sep 07, 2022

Abstract:Image spam threat detection has continually been a popular area of research with the internet's phenomenal expansion. This research presents an explainable framework for detecting spam images using Convolutional Neural Network(CNN) algorithms and Explainable Artificial Intelligence (XAI) algorithms. In this work, we use CNN model to classify image spam respectively whereas the post-hoc XAI methods including Local Interpretable Model Agnostic Explanation (LIME) and Shapley Additive Explanations (SHAP) were deployed to provide explanations for the decisions that the black-box CNN models made about spam image detection. We train and then evaluate the performance of the proposed approach on a 6636 image dataset including spam images and normal images collected from three different publicly available email corpora. The experimental results show that the proposed framework achieved satisfactory detection results in terms of different performance metrics whereas the model-independent XAI algorithms could provide explanations for the decisions of different models which could be utilized for comparison for the future study.

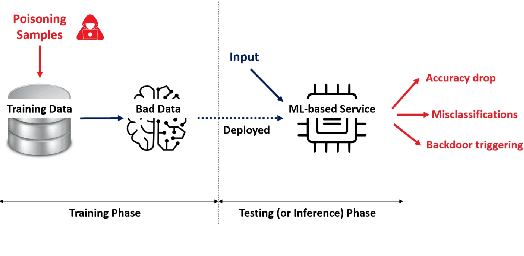

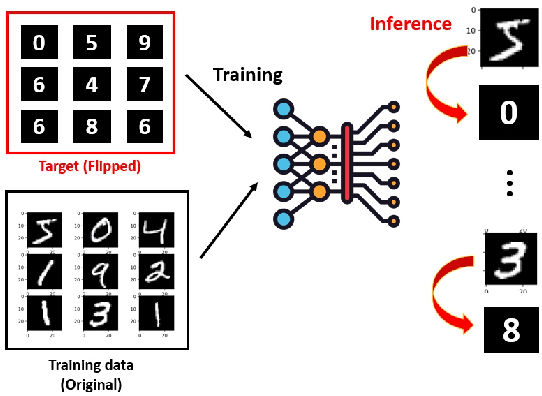

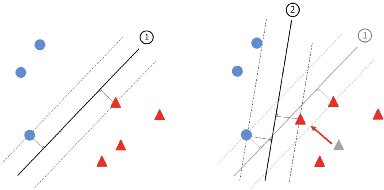

Poisoning Attacks and Defenses on Artificial Intelligence: A Survey

Feb 22, 2022

Abstract:Machine learning models have been widely adopted in several fields. However, most recent studies have shown several vulnerabilities from attacks with a potential to jeopardize the integrity of the model, presenting a new window of research opportunity in terms of cyber-security. This survey is conducted with a main intention of highlighting the most relevant information related to security vulnerabilities in the context of machine learning (ML) classifiers; more specifically, directed towards training procedures against data poisoning attacks, representing a type of attack that consists of tampering the data samples fed to the model during the training phase, leading to a degradation in the models accuracy during the inference phase. This work compiles the most relevant insights and findings found in the latest existing literatures addressing this type of attacks. Moreover, this paper also covers several defense techniques that promise feasible detection and mitigation mechanisms, capable of conferring a certain level of robustness to a target model against an attacker. A thorough assessment is performed on the reviewed works, comparing the effects of data poisoning on a wide range of ML models in real-world conditions, performing quantitative and qualitative analyses. This paper analyzes the main characteristics for each approach including performance success metrics, required hyperparameters, and deployment complexity. Moreover, this paper emphasizes the underlying assumptions and limitations considered by both attackers and defenders along with their intrinsic properties such as: availability, reliability, privacy, accountability, interpretability, etc. Finally, this paper concludes by making references of some of main existing research trends that provide pathways towards future research directions in the field of cyber-security.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge