Parus Khuwaja

Pathway to Secure and Trustworthy 6G for LLMs: Attacks, Defense, and Opportunities

Aug 01, 2024Abstract:Recently, large language models (LLMs) have been gaining a lot of interest due to their adaptability and extensibility in emerging applications, including communication networks. It is anticipated that 6G mobile edge computing networks will be able to support LLMs as a service, as they provide ultra reliable low-latency communications and closed loop massive connectivity. However, LLMs are vulnerable to data and model privacy issues that affect the trustworthiness of LLMs to be deployed for user-based services. In this paper, we explore the security vulnerabilities associated with fine-tuning LLMs in 6G networks, in particular the membership inference attack. We define the characteristics of an attack network that can perform a membership inference attack if the attacker has access to the fine-tuned model for the downstream task. We show that the membership inference attacks are effective for any downstream task, which can lead to a personal data breach when using LLM as a service. The experimental results show that the attack success rate of maximum 92% can be achieved on named entity recognition task. Based on the experimental analysis, we discuss possible defense mechanisms and present possible research directions to make the LLMs more trustworthy in the context of 6G networks.

ChatGPT Needs SPADE (Sustainability, PrivAcy, Digital divide, and Ethics) Evaluation: A Review

Apr 13, 2023Abstract:ChatGPT is another large language model (LLM) inline but due to its performance and ability to converse effectively, it has gained a huge popularity amongst research as well as industrial community. Recently, many studies have been published to show the effectiveness, efficiency, integration, and sentiments of chatGPT and other LLMs. In contrast, this study focuses on the important aspects that are mostly overlooked, i.e. sustainability, privacy, digital divide, and ethics and suggests that not only chatGPT but every subsequent entry in the category of conversational bots should undergo Sustainability, PrivAcy, Digital divide, and Ethics (SPADE) evaluation. This paper discusses in detail about the issues and concerns raised over chatGPT in line with aforementioned characteristics. We support our hypothesis by some preliminary data collection and visualizations along with hypothesized facts. We also suggest mitigations and recommendations for each of the concerns. Furthermore, we also suggest some policies and recommendations for AI policy act, if designed by the governments.

SPIN: Simulated Poisoning and Inversion Network for Federated Learning-Based 6G Vehicular Networks

Nov 21, 2022Abstract:The applications concerning vehicular networks benefit from the vision of beyond 5G and 6G technologies such as ultra-dense network topologies, low latency, and high data rates. Vehicular networks have always faced data privacy preservation concerns, which lead to the advent of distributed learning techniques such as federated learning. Although federated learning has solved data privacy preservation issues to some extent, the technique is quite vulnerable to model inversion and model poisoning attacks. We assume that the design of defense mechanism and attacks are two sides of the same coin. Designing a method to reduce vulnerability requires the attack to be effective and challenging with real-world implications. In this work, we propose simulated poisoning and inversion network (SPIN) that leverages the optimization approach for reconstructing data from a differential model trained by a vehicular node and intercepted when transmitted to roadside unit (RSU). We then train a generative adversarial network (GAN) to improve the generation of data with each passing round and global update from the RSU, accordingly. Evaluation results show the qualitative and quantitative effectiveness of the proposed approach. The attack initiated by SPIN can reduce up to 22% accuracy on publicly available datasets while just using a single attacker. We assume that revealing the simulation of such attacks would help us find its defense mechanism in an effective manner.

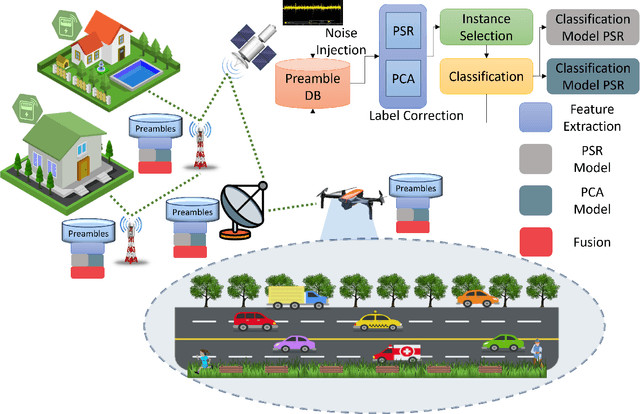

IIFNet: A Fusion based Intelligent Service for Noisy Preamble Detection in 6G

Apr 22, 2022

Abstract:In this article, we present our vision of preamble detection in a physical random access channel for next-generation (Next-G) networks using machine learning techniques. Preamble detection is performed to maintain communication and synchronization between devices of the Internet of Everything (IoE) and next-generation nodes. Considering the scalability and traffic density, Next-G networks have to deal with preambles corrupted by noise due to channel characteristics or environmental constraints. We show that when injecting 15% random noise, the detection performance degrades to 48%. We propose an informative instance-based fusion network (IIFNet) to cope with random noise and to improve detection performance, simultaneously. A novel sampling strategy for selecting informative instances from feature spaces has also been explored to improve detection performance. The proposed IIFNet is tested on a real dataset for preamble detection that was collected with the help of a reputable commercial company.

* 7 pages, 3 figures, 2 tables, Accepted Article

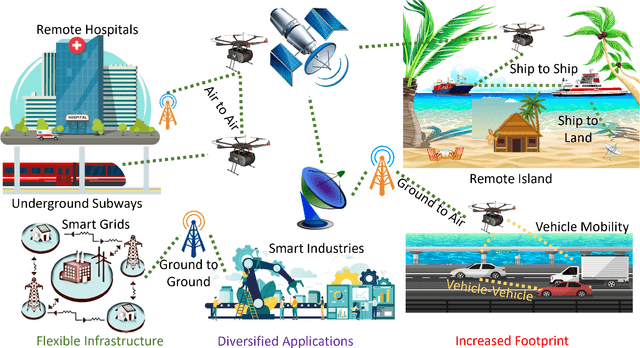

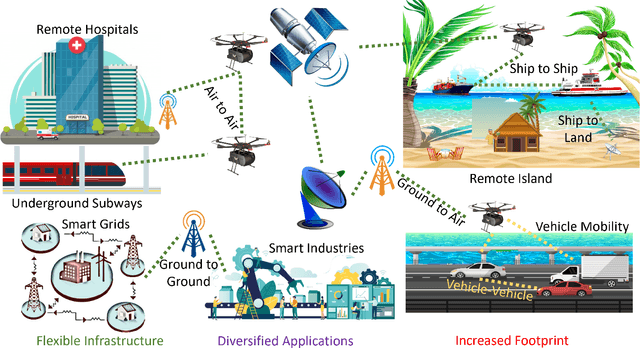

Towards Energy Efficient Distributed Federated Learning for 6G Networks

Jan 19, 2022

Abstract:The provision of communication services via portable and mobile devices, such as aerial base stations, is a crucial concept to be realized in 5G/6G networks. Conventionally, IoT/edge devices need to transmit the data directly to the base station for training the model using machine learning techniques. The data transmission introduces privacy issues that might lead to security concerns and monetary losses. Recently, Federated learning was proposed to partially solve privacy issues via model-sharing with base station. However, the centralized nature of federated learning only allow the devices within the vicinity of base stations to share the trained models. Furthermore, the long-range communication compels the devices to increase transmission power, which raises the energy efficiency concerns. In this work, we propose distributed federated learning (DBFL) framework that overcomes the connectivity and energy efficiency issues for distant devices. The DBFL framework is compatible with mobile edge computing architecture that connects the devices in a distributed manner using clustering protocols. Experimental results show that the framework increases the classification performance by 7.4\% in comparison to conventional federated learning while reducing the energy consumption.

* 8 pages, 4 figures, 1 table, Magazine Article

Towards Industrial Private AI: A two-tier framework for data and model security

Jul 27, 2021

Abstract:With the advances in 5G and IoT devices, the industries are vastly adopting artificial intelligence (AI) techniques for improving classification and prediction-based services. However, the use of AI also raises concerns regarding data privacy and security that can be misused or leaked. Private AI was recently coined to address the data security issue by combining AI with encryption techniques but existing studies have shown that model inversion attacks can be used to reverse engineer the images from model parameters. In this regard, we propose a federated learning and encryption-based private (FLEP) AI framework that provides two-tier security for data and model parameters in an IIoT environment. We proposed a three-layer encryption method for data security and provided a hypothetical method to secure the model parameters. Experimental results show that the proposed method achieves better encryption quality at the expense of slightly increased execution time. We also highlighted several open issues and challenges regarding the FLEP AI framework's realization.

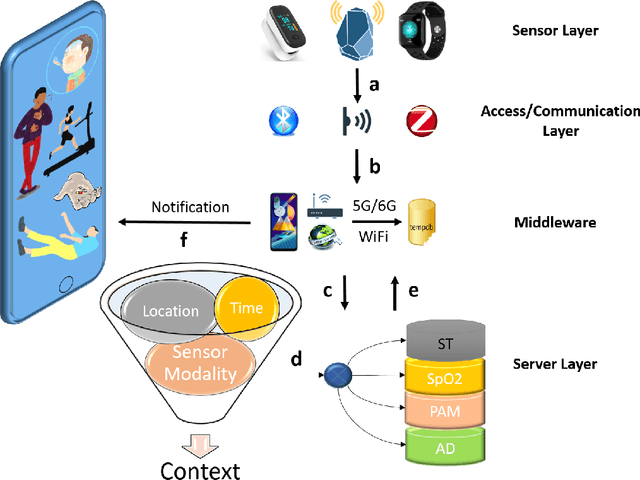

Internet of Everything enabled solution for COVID-19, its new variants and future pandemics: Framework, Challenges, and Research Directions

Jan 08, 2021

Abstract:After affecting the world in unexpected ways, COVID-19 has started mutating which is evident with the insurgence of its new variants. The governments, hospitals, schools, industries, and humans, in general, are looking for a potential solution in the vaccine which will eventually be available but its timeline for eradicating the virus is yet unknown. Several researchers have encouraged and recommended the use of good practices such as physical healthcare monitoring, immunity-boosting, personal hygiene, mental healthcare, and contact tracing for slowing down the spread of the virus. In this article, we propose the use of wearable/mobile sensors integrated with the Internet of Everything to cover the spectrum of good practices in an automated manner. We present hypothetical frameworks for each of the good practice modules and propose the COvid-19 Resistance Framework using the Internet of Everything (CORFIE) to tie all the individual modules in a unified architecture. We envision that CORFIE would be influential in assisting people with the new normal for current and future pandemics as well as instrumental in halting the economic losses, respectively. We also provide potential challenges and their probable solutions in compliance with the proposed CORFIE.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge