Huong Vu

TATL: Task Agnostic Transfer Learning for Skin Attributes Detection

Apr 04, 2021

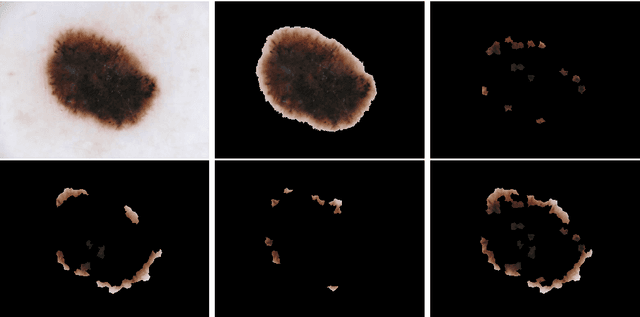

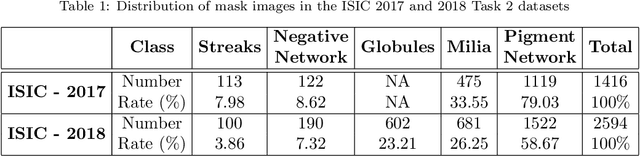

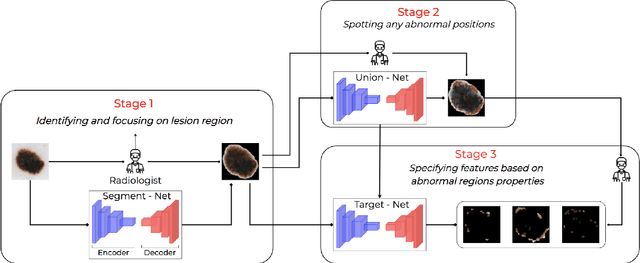

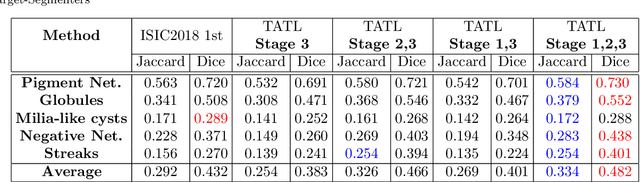

Abstract:Existing skin attributes detection methods usually initialize with a pre-trained Imagenet network and then fine-tune the medical target task. However, we argue that such approaches are suboptimal because medical datasets are largely different from ImageNet and often contain limited training samples. In this work, we propose Task Agnostic Transfer Learning (TATL), a novel framework motivated by dermatologists' behaviors in the skincare context. TATL learns an attribute-agnostic segmenter that detects lesion skin regions and then transfers this knowledge to a set of attribute-specific classifiers to detect each particular region's attributes. Since TATL's attribute-agnostic segmenter only detects abnormal skin regions, it enjoys ample data from all attributes, allows transferring knowledge among features, and compensates for the lack of training data from rare attributes. We extensively evaluate TATL on two popular skin attributes detection benchmarks and show that TATL outperforms state-of-the-art methods while enjoying minimal model and computational complexity. We also provide theoretical insights and explanations for why TATL works well in practice.

Attention with Multiple Sources Knowledges for COVID-19 from CT Images

Sep 24, 2020

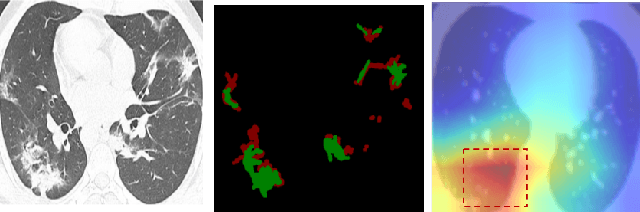

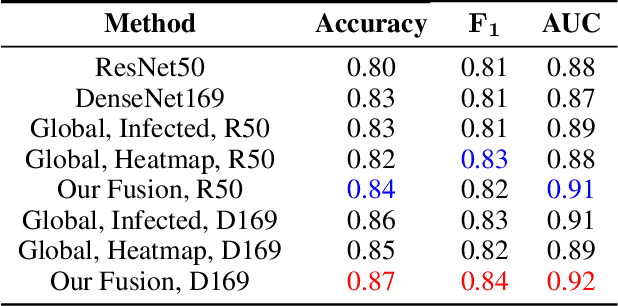

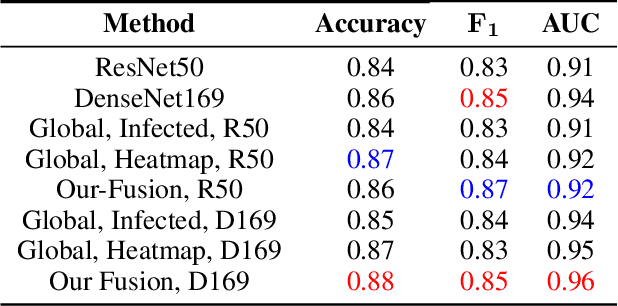

Abstract:Until now, Coronavirus SARS-CoV-2 has caused more than 850,000 deaths and infected more than 27 million individuals in over 120 countries. Besides principal polymerase chain reaction (PCR) tests, automatically identifying positive samples based on computed tomography (CT) scans can present a promising option in the early diagnosis of COVID-19. Recently, there have been increasing efforts to utilize deep networks for COVID-19 diagnosis based on CT scans. While these approaches mostly focus on introducing novel architectures, transfer learning techniques, or construction large scale data, we propose a novel strategy to improve the performance of several baselines by leveraging multiple useful information sources relevant to doctors' judgments. Specifically, infected regions and heat maps extracted from learned networks are integrated with the global image via an attention mechanism during the learning process. This procedure not only makes our system more robust to noise but also guides the network focusing on local lesion areas. Extensive experiments illustrate the superior performance of our approach compared to recent baselines. Furthermore, our learned network guidance presents an explainable feature to doctors as we can understand the connection between input and output in a grey-box model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge