Hongmei Li

Weakness Analysis of Cyberspace Configuration Based on Reinforcement Learning

Jul 09, 2020

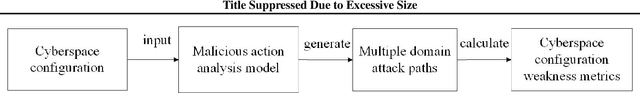

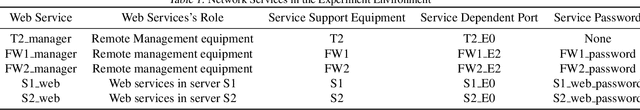

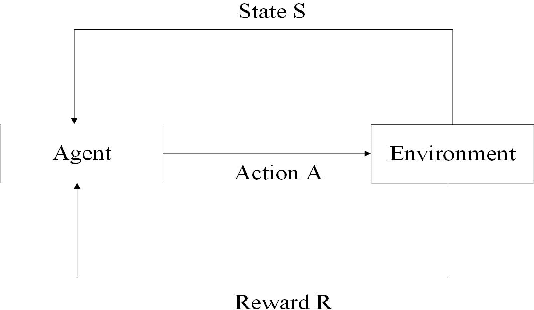

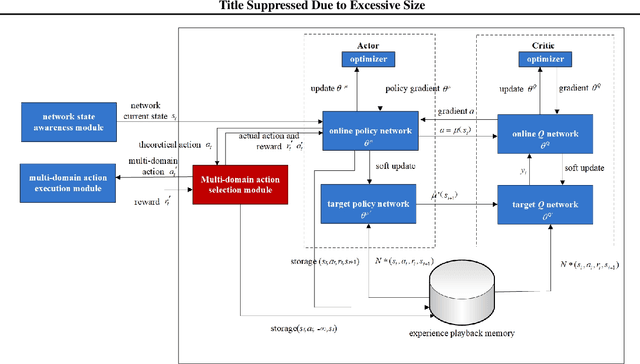

Abstract:In this work, we present a learning-based approach to analysis cyberspace configuration. Unlike prior methods, our approach has the ability to learn from past experience and improve over time. In particular, as we train over a greater number of agents as attackers, our method becomes better at rapidly finding attack paths for previously hidden paths, especially in multiple domain cyberspace. To achieve these results, we pose finding attack paths as a Reinforcement Learning (RL) problem and train an agent to find multiple domain attack paths. To enable our RL policy to find more hidden attack paths, we ground representation introduction an multiple domain action select module in RL. By designing a simulated cyberspace experimental environment to verify our method. Our objective is to find more hidden attack paths, to analysis the weakness of cyberspace configuration. The experimental results show that our method can find more hidden multiple domain attack paths than existing baselines methods.

Multi-Modal Coreference Resolution with the Correlation between Space Structures

Sep 01, 2018

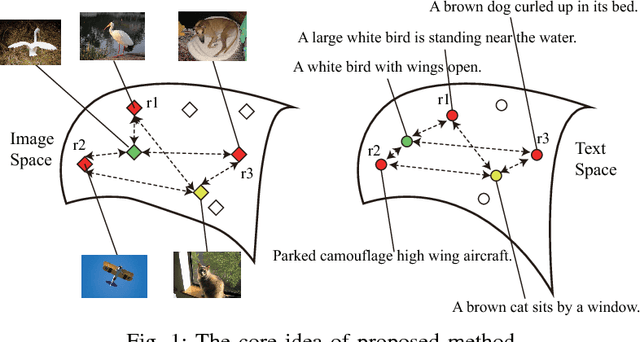

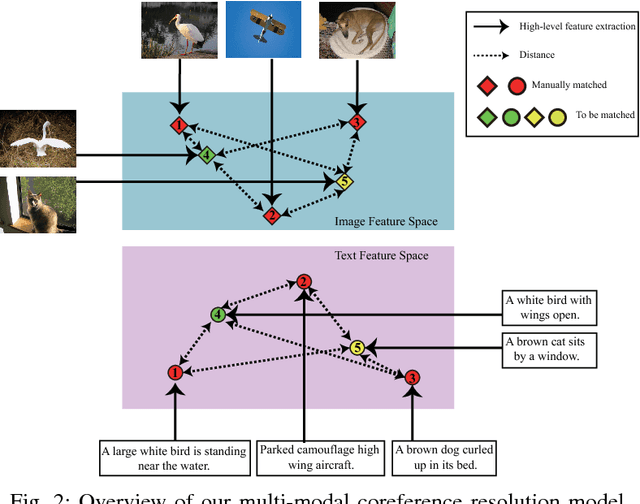

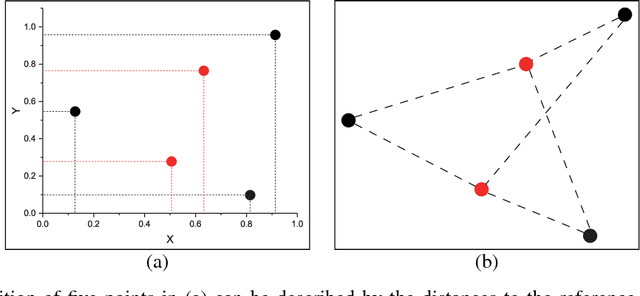

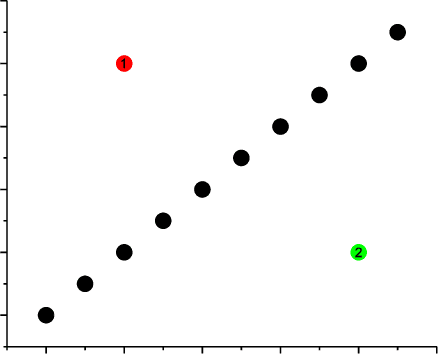

Abstract:Multi-modal data is becoming more common in big data background. Finding the semantically similar objects from different modality is one of the heart problems of multi-modal learning. Most of the current methods try to learn the inter-modal correlation with extrinsic supervised information, while intrinsic structural information of each modality is neglected. The performance of these methods heavily depends on the richness of training samples. However, obtaining the multi-modal training samples is still a labor and cost intensive work. In this paper, we bring a extrinsic correlation between the space structures of each modalities in coreference resolution. With this correlation, a semi-supervised learning model for multi-modal coreference resolution is proposed. We firstly extract high-level features of images and text, then compute the distances of each object from some reference points to build the space structure of each modality. With a shared reference point set, the space structures of each modality are correlated. We employ the correlation to build a commonly shared space that the semantic distance between multi-modal objects can be computed directly. The experiments on two multi-modal datasets show that our model performs better than the existing methods with insufficient training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge