Hong-Bo Xie

A variational autoencoder-based nonnegative matrix factorisation model for deep dictionary learning

Jan 18, 2023

Abstract:Construction of dictionaries using nonnegative matrix factorisation (NMF) has extensive applications in signal processing and machine learning. With the advances in deep learning, training compact and robust dictionaries using deep neural networks, i.e., dictionaries of deep features, has been proposed. In this study, we propose a probabilistic generative model which employs a variational autoencoder (VAE) to perform nonnegative dictionary learning. In contrast to the existing VAE models, we cast the model under a statistical framework with latent variables obeying a Gamma distribution and design a new loss function to guarantee the nonnegative dictionaries. We adopt an acceptance-rejection sampling reparameterization trick to update the latent variables iteratively. We apply the dictionaries learned from VAE-NMF to two signal processing tasks, i.e., enhancement of speech and extraction of muscle synergies. Experimental results demonstrate that VAE-NMF performs better in learning the latent nonnegative dictionaries in comparison with state-of-the-art methods.

clusterBMA: Bayesian model averaging for clustering

Sep 09, 2022

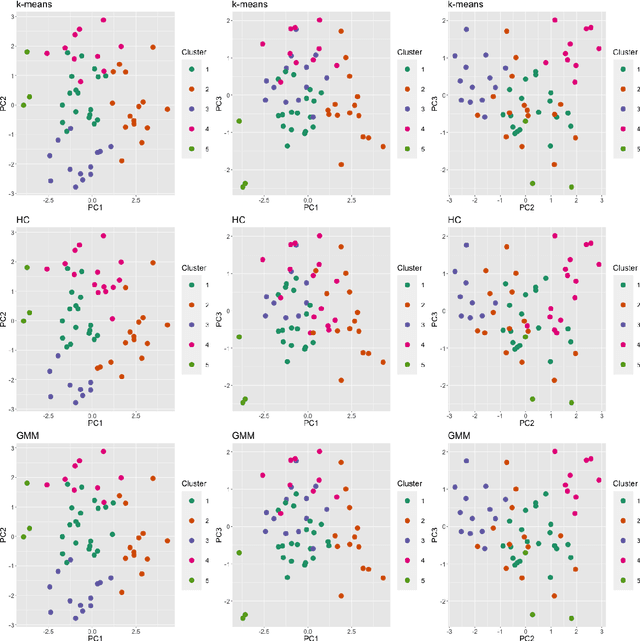

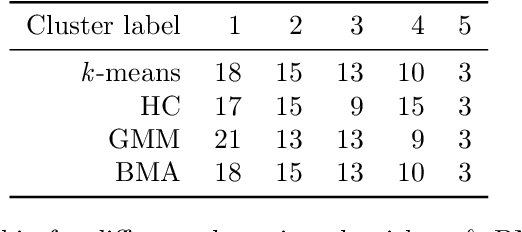

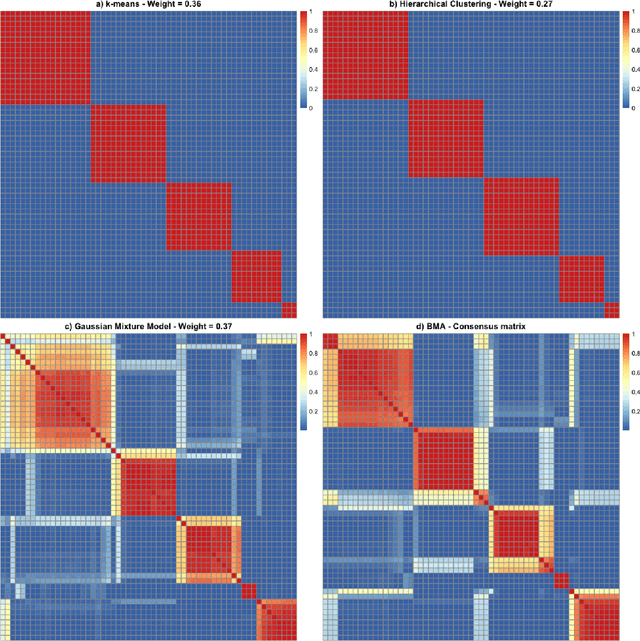

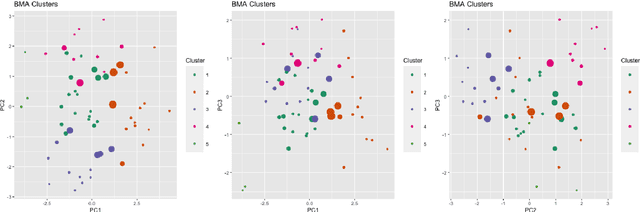

Abstract:Various methods have been developed to combine inference across multiple sets of results for unsupervised clustering, within the ensemble and consensus clustering literature. The approach of reporting results from one `best' model out of several candidate clustering models generally ignores the uncertainty that arises from model selection, and results in inferences that are sensitive to the particular model and parameters chosen, and assumptions made, especially with small sample size or small cluster sizes. Bayesian model averaging (BMA) is a popular approach for combining results across multiple models that offers some attractive benefits in this setting, including probabilistic interpretation of the combine cluster structure and quantification of model-based uncertainty. In this work we introduce clusterBMA, a method that enables weighted model averaging across results from multiple unsupervised clustering algorithms. We use a combination of clustering internal validation criteria as a novel approximation of the posterior model probability for weighting the results from each model. From a combined posterior similarity matrix representing a weighted average of the clustering solutions across models, we apply symmetric simplex matrix factorisation to calculate final probabilistic cluster allocations. This method is implemented in an accompanying R package. We explore the performance of this approach through a case study that aims to to identify probabilistic clusters of individuals based on electroencephalography (EEG) data. We also use simulated datasets to explore the ability of the proposed technique to identify robust integrated clusters with varying levels of separations between subgroups, and with varying numbers of clusters between models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge