Holger Fröning

Uncertainty-Preserving QBNNs: Multi-Level Quantization of SVI-Based Bayesian Neural Networks for Image Classification

Dec 11, 2025Abstract:Bayesian Neural Networks (BNNs) provide principled uncertainty quantification but suffer from substantial computational and memory overhead compared to deterministic networks. While quantization techniques have successfully reduced resource requirements in standard deep learning models, their application to probabilistic models remains largely unexplored. We introduce a systematic multi-level quantization framework for Stochastic Variational Inference based BNNs that distinguishes between three quantization strategies: Variational Parameter Quantization (VPQ), Sampled Parameter Quantization (SPQ), and Joint Quantization (JQ). Our logarithmic quantization for variance parameters, and specialized activation functions to preserve the distributional structure are essential for calibrated uncertainty estimation. Through comprehensive experiments on Dirty-MNIST, we demonstrate that BNNs can be quantized down to 4-bit precision while maintaining both classification accuracy and uncertainty disentanglement. At 4 bits, Joint Quantization achieves up to 8x memory reduction compared to floating-point implementations with minimal degradation in epistemic and aleatoric uncertainty estimation. These results enable deployment of BNNs on resource-constrained edge devices and provide design guidelines for future analog "Bayesian Machines" operating at inherently low precision.

Variance-Aware Noisy Training: Hardening DNNs against Unstable Analog Computations

Mar 20, 2025

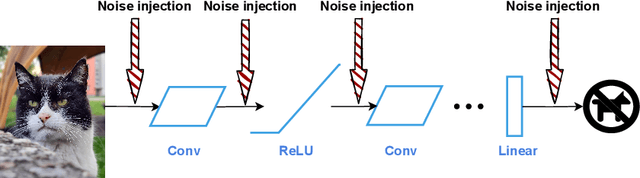

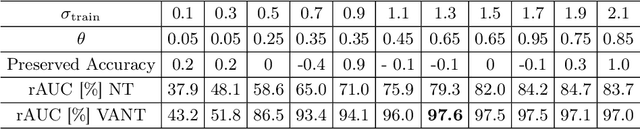

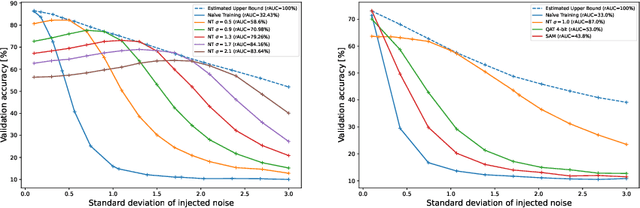

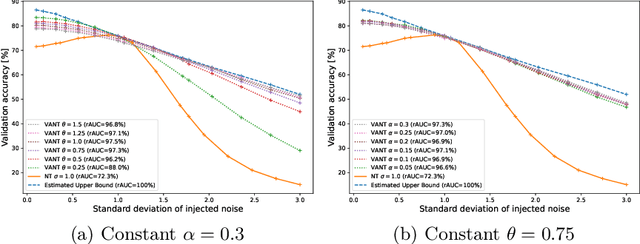

Abstract:The disparity between the computational demands of deep learning and the capabilities of compute hardware is expanding drastically. Although deep learning achieves remarkable performance in countless tasks, its escalating requirements for computational power and energy consumption surpass the sustainable limits of even specialized neural processing units, including the Apple Neural Engine and NVIDIA TensorCores. This challenge is intensified by the slowdown in CMOS scaling. Analog computing presents a promising alternative, offering substantial improvements in energy efficiency by directly manipulating physical quantities such as current, voltage, charge, or photons. However, it is inherently vulnerable to manufacturing variations, nonlinearities, and noise, leading to degraded prediction accuracy. One of the most effective techniques for enhancing robustness, Noisy Training, introduces noise during the training phase to reinforce the model against disturbances encountered during inference. Although highly effective, its performance degrades in real-world environments where noise characteristics fluctuate due to external factors such as temperature variations and temporal drift. This study underscores the necessity of Noisy Training while revealing its fundamental limitations in the presence of dynamic noise. To address these challenges, we propose Variance-Aware Noisy Training, a novel approach that mitigates performance degradation by incorporating noise schedules which emulate the evolving noise conditions encountered during inference. Our method substantially improves model robustness, without training overhead. We demonstrate a significant increase in robustness, from 72.3\% with conventional Noisy Training to 97.3\% with Variance-Aware Noisy Training on CIFAR-10 and from 38.5\% to 89.9\% on Tiny ImageNet.

On Hardening DNNs against Noisy Computations

Jan 24, 2025

Abstract:The success of deep learning has sparked significant interest in designing computer hardware optimized for the high computational demands of neural network inference. As further miniaturization of digital CMOS processors becomes increasingly challenging, alternative computing paradigms, such as analog computing, are gaining consideration. Particularly for compute-intensive tasks such as matrix multiplication, analog computing presents a promising alternative due to its potential for significantly higher energy efficiency compared to conventional digital technology. However, analog computations are inherently noisy, which makes it challenging to maintain high accuracy on deep neural networks. This work investigates the effectiveness of training neural networks with quantization to increase the robustness against noise. Experimental results across various network architectures show that quantization-aware training with constant scaling factors enhances robustness. We compare these methods with noisy training, which incorporates a noise injection during training that mimics the noise encountered during inference. While both two methods increase tolerance against noise, noisy training emerges as the superior approach for achieving robust neural network performance, especially in complex neural architectures.

Function Space Diversity for Uncertainty Prediction via Repulsive Last-Layer Ensembles

Dec 20, 2024Abstract:Bayesian inference in function space has gained attention due to its robustness against overparameterization in neural networks. However, approximating the infinite-dimensional function space introduces several challenges. In this work, we discuss function space inference via particle optimization and present practical modifications that improve uncertainty estimation and, most importantly, make it applicable for large and pretrained networks. First, we demonstrate that the input samples, where particle predictions are enforced to be diverse, are detrimental to the model performance. While diversity on training data itself can lead to underfitting, the use of label-destroying data augmentation, or unlabeled out-of-distribution data can improve prediction diversity and uncertainty estimates. Furthermore, we take advantage of the function space formulation, which imposes no restrictions on network parameterization other than sufficient flexibility. Instead of using full deep ensembles to represent particles, we propose a single multi-headed network that introduces a minimal increase in parameters and computation. This allows seamless integration to pretrained networks, where this repulsive last-layer ensemble can be used for uncertainty aware fine-tuning at minimal additional cost. We achieve competitive results in disentangling aleatoric and epistemic uncertainty for active learning, detecting out-of-domain data, and providing calibrated uncertainty estimates under distribution shifts with minimal compute and memory.

Less Memory Means smaller GPUs: Backpropagation with Compressed Activations

Sep 18, 2024Abstract:The ever-growing scale of deep neural networks (DNNs) has lead to an equally rapid growth in computational resource requirements. Many recent architectures, most prominently Large Language Models, have to be trained using supercomputers with thousands of accelerators, such as GPUs or TPUs. Next to the vast number of floating point operations the memory footprint of DNNs is also exploding. In contrast, GPU architectures are notoriously short on memory. Even comparatively small architectures like some EfficientNet variants cannot be trained on a single consumer-grade GPU at reasonable mini-batch sizes. During training, intermediate input activations have to be stored until backpropagation for gradient calculation. These make up the vast majority of the memory footprint. In this work we therefore consider compressing activation maps for the backward pass using pooling, which can reduce both the memory footprint and amount of data movement. The forward computation remains uncompressed. We empirically show convergence and study effects on feature detection at the example of the common vision architecture ResNet. With this approach we are able to reduce the peak memory consumption by 29% at the cost of a longer training schedule, while maintaining prediction accuracy compared to an uncompressed baseline.

DeepHYDRA: Resource-Efficient Time-Series Anomaly Detection in Dynamically-Configured Systems

May 13, 2024

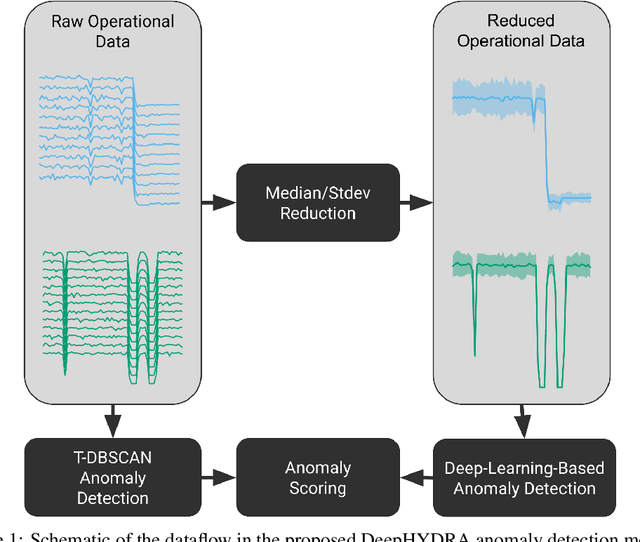

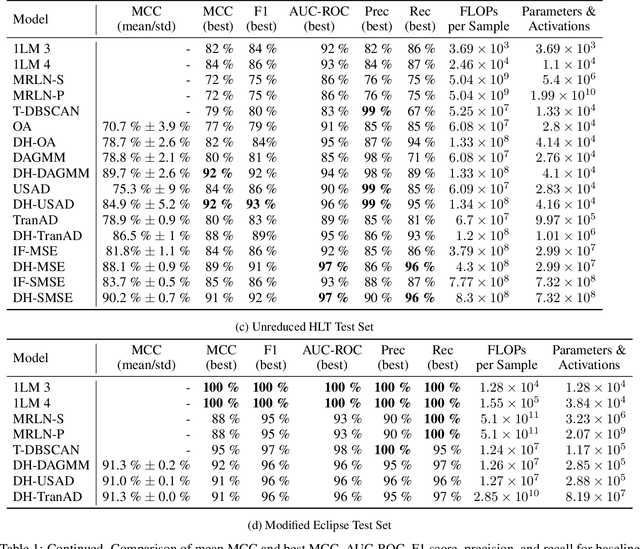

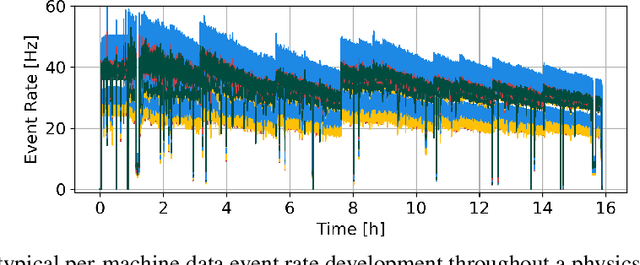

Abstract:Anomaly detection in distributed systems such as High-Performance Computing (HPC) clusters is vital for early fault detection, performance optimisation, security monitoring, reliability in general but also operational insights. Deep Neural Networks have seen successful use in detecting long-term anomalies in multidimensional data, originating for instance from industrial or medical systems, or weather prediction. A downside of such methods is that they require a static input size, or lose data through cropping, sampling, or other dimensionality reduction methods, making deployment on systems with variability on monitored data channels, such as computing clusters difficult. To address these problems, we present DeepHYDRA (Deep Hybrid DBSCAN/Reduction-Based Anomaly Detection) which combines DBSCAN and learning-based anomaly detection. DBSCAN clustering is used to find point anomalies in time-series data, mitigating the risk of missing outliers through loss of information when reducing input data to a fixed number of channels. A deep learning-based time-series anomaly detection method is then applied to the reduced data in order to identify long-term outliers. This hybrid approach reduces the chances of missing anomalies that might be made indistinguishable from normal data by the reduction process, and likewise enables the algorithm to be scalable and tolerate partial system failures while retaining its detection capabilities. Using a subset of the well-known SMD dataset family, a modified variant of the Eclipse dataset, as well as an in-house dataset with a large variability in active data channels, made publicly available with this work, we furthermore analyse computational intensity, memory footprint, and activation counts. DeepHYDRA is shown to reliably detect different types of anomalies in both large and complex datasets.

Implications of Noise in Resistive Memory on Deep Neural Networks for Image Classification

Jan 11, 2024Abstract:Resistive memory is a promising alternative to SRAM, but is also an inherently unstable device that requires substantial effort to ensure correct read and write operations. To avoid the associated costs in terms of area, time and energy, the present work is concerned with exploring how much noise in memory operations can be tolerated by image classification tasks based on neural networks. We introduce a special noisy operator that mimics the noise in an exemplary resistive memory unit, explore the resilience of convolutional neural networks on the CIFAR-10 classification task, and discuss a couple of countermeasures to improve this resilience.

Compressing the Backward Pass of Large-Scale Neural Architectures by Structured Activation Pruning

Nov 29, 2023

Abstract:The rise of Deep Neural Networks (DNNs) has led to an increase in model size and complexity, straining the memory capacity of GPUs. Sparsity in DNNs, characterized as structural or ephemeral, has gained attention as a solution. This work focuses on ephemeral sparsity, aiming to reduce memory consumption during training. It emphasizes the significance of activations, an often overlooked component, and their role in memory usage. This work employs structured pruning in Block Sparse Compressed Row (BSR) format in combination with a magnitude-based criterion to efficiently prune activations. We furthermore introduce efficient block-sparse operators for GPUs and showcase their effectiveness, as well as the superior compression offered by block sparsity. We report the effectiveness of activation pruning by evaluating training speed, accuracy, and memory usage of large-scale neural architectures on the example of ResMLP on image classification tasks. As a result, we observe a memory reduction of up to 32% while maintaining accuracy. Ultimately, our approach aims to democratize large-scale model training, reduce GPU requirements, and address ecological concerns.

On the Non-Associativity of Analog Computations

Sep 25, 2023Abstract:The energy efficiency of analog forms of computing makes it one of the most promising candidates to deploy resource-hungry machine learning tasks on resource-constrained system such as mobile or embedded devices. However, it is well known that for analog computations the safety net of discretization is missing, thus all analog computations are exposed to a variety of imperfections of corresponding implementations. Examples include non-linearities, saturation effect and various forms of noise. In this work, we observe that the ordering of input operands of an analog operation also has an impact on the output result, which essentially makes analog computations non-associative, even though the underlying operation might be mathematically associative. We conduct a simple test by creating a model of a real analog processor which captures such ordering effects. With this model we assess the importance of ordering by comparing the test accuracy of a neural network for keyword spotting, which is trained based either on an ordered model, on a non-ordered variant, and on real hardware. The results prove the existence of ordering effects as well as their high impact, as neglecting ordering results in substantial accuracy drops.

Reducing Memory Requirements for the IPU using Butterfly Factorizations

Sep 16, 2023

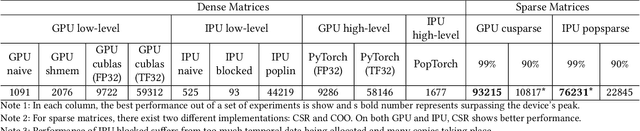

Abstract:High Performance Computing (HPC) benefits from different improvements during last decades, specially in terms of hardware platforms to provide more processing power while maintaining the power consumption at a reasonable level. The Intelligence Processing Unit (IPU) is a new type of massively parallel processor, designed to speedup parallel computations with huge number of processing cores and on-chip memory components connected with high-speed fabrics. IPUs mainly target machine learning applications, however, due to the architectural differences between GPUs and IPUs, especially significantly less memory capacity on an IPU, methods for reducing model size by sparsification have to be considered. Butterfly factorizations are well-known replacements for fully-connected and convolutional layers. In this paper, we examine how butterfly structures can be implemented on an IPU and study their behavior and performance compared to a GPU. Experimental results indicate that these methods can provide 98.5% compression ratio to decrease the immense need for memory, the IPU implementation can benefit from 1.3x and 1.6x performance improvement for butterfly and pixelated butterfly, respectively. We also reach to 1.62x training time speedup on a real-word dataset such as CIFAR10.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge