Hogyun Kim

Uni-Mapper: Unified Mapping Framework for Multi-modal LiDARs in Complex and Dynamic Environments

Jul 28, 2025Abstract:The unification of disparate maps is crucial for enabling scalable robot operation across multiple sessions and collaborative multi-robot scenarios. However, achieving a unified map robust to sensor modalities and dynamic environments remains a challenging problem. Variations in LiDAR types and dynamic elements lead to differences in point cloud distribution and scene consistency, hindering reliable descriptor generation and loop closure detection essential for accurate map alignment. To address these challenges, this paper presents Uni-Mapper, a dynamic-aware 3D point cloud map merging framework for multi-modal LiDAR systems. It comprises dynamic object removal, dynamic-aware loop closure, and multi-modal LiDAR map merging modules. A voxel-wise free space hash map is built in a coarse-to-fine manner to identify and reject dynamic objects via temporal occupancy inconsistencies. The removal module is integrated with a LiDAR global descriptor, which encodes preserved static local features to ensure robust place recognition in dynamic environments. In the final stage, multiple pose graph optimizations are conducted for both intra-session and inter-map loop closures. We adopt a centralized anchor-node strategy to mitigate intra-session drift errors during map merging. In the final stage, centralized anchor-node-based pose graph optimization is performed to address intra- and inter-map loop closures for globally consistent map merging. Our framework is evaluated on diverse real-world datasets with dynamic objects and heterogeneous LiDARs, showing superior performance in loop detection across sensor modalities, robust mapping in dynamic environments, and accurate multi-map alignment over existing methods. Project Page: https://sparolab.github.io/research/uni_mapper.

MARSCalib: Multi-robot, Automatic, Robust, Spherical Target-based Extrinsic Calibration in Field and Extraterrestrial Environments

Jul 23, 2025Abstract:This paper presents a novel spherical target-based LiDAR-camera extrinsic calibration method designed for outdoor environments with multi-robot systems, considering both target and sensor corruption. The method extracts the 2D ellipse center from the image and the 3D sphere center from the pointcloud, which are then paired to compute the transformation matrix. Specifically, the image is first decomposed using the Segment Anything Model (SAM). Then, a novel algorithm extracts an ellipse from a potentially corrupted sphere, and the extracted center of ellipse is corrected for errors caused by the perspective projection model. For the LiDAR pointcloud, points on the sphere tend to be highly noisy due to the absence of flat regions. To accurately extract the sphere from these noisy measurements, we apply a hierarchical weighted sum to the accumulated pointcloud. Through experiments, we demonstrated that the sphere can be robustly detected even under both types of corruption, outperforming other targets. We evaluated our method using three different types of LiDARs (spinning, solid-state, and non-repetitive) with cameras positioned in three different locations. Furthermore, we validated the robustness of our method to target corruption by experimenting with spheres subjected to various types of degradation. These experiments were conducted in both a planetary test and a field environment. Our code is available at https://github.com/sparolab/MARSCalib.

SKiD-SLAM: Robust, Lightweight, and Distributed Multi-Robot LiDAR SLAM in Resource-Constrained Field Environments

May 13, 2025Abstract:Distributed LiDAR SLAM is crucial for achieving efficient robot autonomy and improving the scalability of mapping. However, two issues need to be considered when applying it in field environments: one is resource limitation, and the other is inter/intra-robot association. The resource limitation issue arises when the data size exceeds the processing capacity of the network or memory, especially when utilizing communication systems or onboard computers in the field. The inter/intra-robot association issue occurs due to the narrow convergence region of ICP under large viewpoint differences, triggering many false positive loops and ultimately resulting in an inconsistent global map for multi-robot systems. To tackle these problems, we propose a distributed LiDAR SLAM framework designed for versatile field applications, called SKiD-SLAM. Extending our previous work that solely focused on lightweight place recognition and fast and robust global registration, we present a multi-robot mapping framework that focuses on robust and lightweight inter-robot loop closure in distributed LiDAR SLAM. Through various environmental experiments, we demonstrate that our method is more robust and lightweight compared to other state-of-the-art distributed SLAM approaches, overcoming resource limitation and inter/intra-robot association issues. Also, we validated the field applicability of our approach through mapping experiments in real-world planetary emulation terrain and cave environments, which are in-house datasets. Our code will be available at https://sparolab.github.io/research/skid_slam/.

PoLaRIS Dataset: A Maritime Object Detection and Tracking Dataset in Pohang Canal

Dec 09, 2024Abstract:Maritime environments often present hazardous situations due to factors such as moving ships or buoys, which become obstacles under the influence of waves. In such challenging conditions, the ability to detect and track potentially hazardous objects is critical for the safe navigation of marine robots. To address the scarcity of comprehensive datasets capturing these dynamic scenarios, we introduce a new multi-modal dataset that includes image and point-wise annotations of maritime hazards. Our dataset provides detailed ground truth for obstacle detection and tracking, including objects as small as 10$\times$10 pixels, which are crucial for maritime safety. To validate the dataset's effectiveness as a reliable benchmark, we conducted evaluations using various methodologies, including \ac{SOTA} techniques for object detection and tracking. These evaluations are expected to contribute to performance improvements, particularly in the complex maritime environment. To the best of our knowledge, this is the first dataset offering multi-modal annotations specifically tailored to maritime environments. Our dataset is available at https://sites.google.com/view/polaris-dataset.

DiTer++: Diverse Terrain and Multi-modal Dataset for Multi-Robot SLAM in Multi-session Environments

Dec 08, 2024Abstract:We encounter large-scale environments where both structured and unstructured spaces coexist, such as on campuses. In this environment, lighting conditions and dynamic objects change constantly. To tackle the challenges of large-scale mapping under such conditions, we introduce DiTer++, a diverse terrain and multi-modal dataset designed for multi-robot SLAM in multi-session environments. According to our datasets' scenarios, Agent-A and Agent-B scan the area designated for efficient large-scale mapping day and night, respectively. Also, we utilize legged robots for terrain-agnostic traversing. To generate the ground-truth of each robot, we first build the survey-grade prior map. Then, we remove the dynamic objects and outliers from the prior map and extract the trajectory through scan-to-map matching. Our dataset and supplement materials are available at https://sites.google.com/view/diter-plusplus/.

ReFeree: Radar-Based Lightweight and Robust Localization using Feature and Free space

Oct 02, 2024

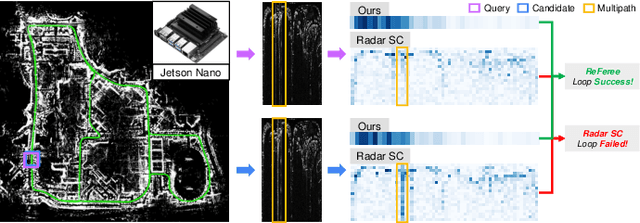

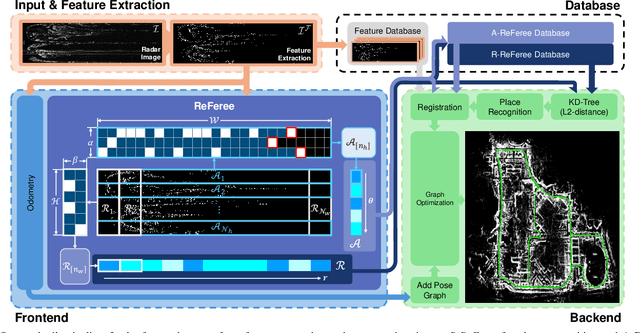

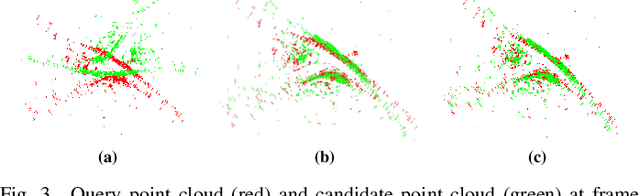

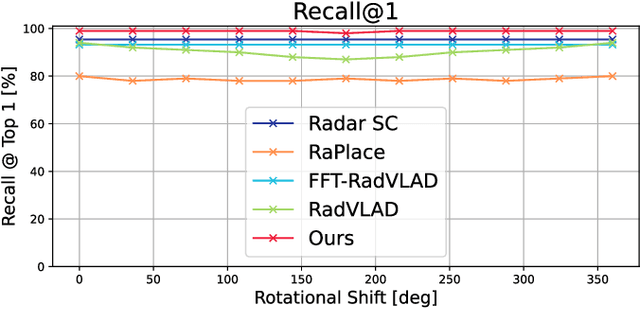

Abstract:Place recognition plays an important role in achieving robust long-term autonomy. Real-world robots face a wide range of weather conditions (e.g. overcast, heavy rain, and snowing) and most sensors (i.e. camera, LiDAR) essentially functioning within or near-visible electromagnetic waves are sensitive to adverse weather conditions, making reliable localization difficult. In contrast, radar is gaining traction due to long electromagnetic waves, which are less affected by environmental changes and weather independence. In this work, we propose a radar-based lightweight and robust place recognition. We achieve rotational invariance and lightweight by selecting a one-dimensional ring-shaped description and robustness by mitigating the impact of false detection utilizing opposite noise characteristics between free space and feature. In addition, the initial heading can be estimated, which can assist in building a SLAM pipeline that combines odometry and registration, which takes into account onboard computing. The proposed method was tested for rigorous validation across various scenarios (i.e. single session, multi-session, and different weather conditions). In particular, we validate our descriptor achieving reliable place recognition performance through the results of extreme environments that lacked structural information such as an OORD dataset.

Narrowing your FOV with SOLiD: Spatially Organized and Lightweight Global Descriptor for FOV-constrained LiDAR Place Recognition

Aug 14, 2024

Abstract:We often encounter limited FOV situations due to various factors such as sensor fusion or sensor mount in real-world robot navigation. However, the limited FOV interrupts the generation of descriptions and impacts place recognition adversely. Therefore, we suffer from correcting accumulated drift errors in a consistent map using LiDAR-based place recognition with limited FOV. Thus, in this paper, we propose a robust LiDAR-based place recognition method for handling narrow FOV scenarios. The proposed method establishes spatial organization based on the range-elevation bin and azimuth-elevation bin to represent places. In addition, we achieve a robust place description through reweighting based on vertical direction information. Based on these representations, our method enables addressing rotational changes and determining the initial heading. Additionally, we designed a lightweight and fast approach for the robot's onboard autonomy. For rigorous validation, the proposed method was tested across various LiDAR place recognition scenarios (i.e., single-session, multi-session, and multi-robot scenarios). To the best of our knowledge, we report the first method to cope with the restricted FOV. Our place description and SLAM codes will be released. Also, the supplementary materials of our descriptor are available at \texttt{\url{https://sites.google.com/view/lidar-solid}}.

ReFeree: Radar-based efficient global descriptor using a Feature and Free space for Place Recognition

Mar 21, 2024

Abstract:Radar is highlighted for robust sensing capabilities in adverse weather conditions (e.g. dense fog, heavy rain, or snowfall). In addition, Radar can cover wide areas and penetrate small particles. Despite these advantages, Radar-based place recognition remains in the early stages compared to other sensors due to its unique characteristics such as low resolution, and significant noise. In this paper, we propose a Radarbased place recognition utilizing a descriptor called ReFeree using a feature and free space. Unlike traditional methods, we overwhelmingly summarize the Radar image. Despite being lightweight, it contains semi-metric information and is also outstanding from the perspective of place recognition performance. For concrete validation, we test a single session from the MulRan dataset and a multi-session from the Oxford Radar RobotCar and the Boreas dataset.

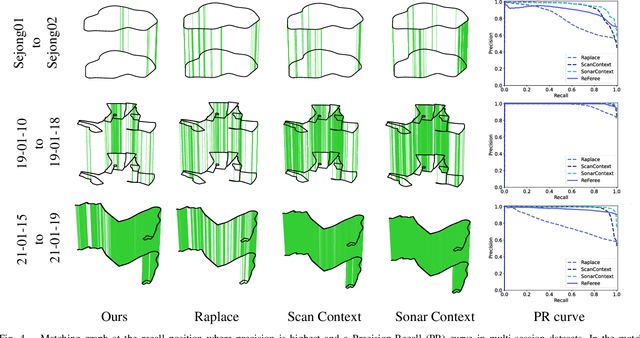

Robust Imaging Sonar-based Place Recognition and Localization in Underwater Environments

May 24, 2023

Abstract:Place recognition using SOund Navigation and Ranging (SONAR) images is an important task for simultaneous localization and mapping(SLAM) in underwater environments. This paper proposes a robust and efficient imaging SONAR based place recognition, SONAR context, and loop closure method. Unlike previous methods, our approach encodes geometric information based on the characteristics of raw SONAR measurements without prior knowledge or training. We also design a hierarchical searching procedure for fast retrieval of candidate SONAR frames and apply adaptive shifting and padding to achieve robust matching on rotation and translation changes. In addition, we can derive the initial pose through adaptive shifting and apply it to the iterative closest point (ICP) based loop closure factor. We evaluate the performance of SONAR context in the various underwater sequences such as simulated open water, real water tank, and real underwater environments. The proposed approach shows the robustness and improvements of place recognition on various datasets and evaluation metrics. Supplementary materials are available at https://github.com/sparolab/sonar_context.git.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge