Hisaichi Shibata

Theory of Hallucinations based on Equivariance

Jan 04, 2024Abstract:This study aims to acquire knowledge for creating very large language models that are immune to hallucinations. Hallucinations in contemporary large language models are often attributed to a misunderstanding of real-world social relationships. Therefore, I hypothesize that very large language models capable of thoroughly grasping all these relationships will be free from hallucinations. Additionally, I propose that certain types of equivariant language models are adept at learning and understanding these relationships. Building on this, I have developed a specialized cross-entropy error function to create a hallucination scale for language models, which measures their extent of equivariance acquisition. Utilizing this scale, I tested language models for their ability to acquire character-level equivariance. In particular, I introduce and employ a novel technique based on T5 (Text To Text Transfer Transformer) that efficiently understands permuted input texts without the need for explicit dictionaries to convert token IDs (integers) to texts (strings). This T5 model demonstrated a moderate ability to acquire character-level equivariance. Additionally, I discovered scale laws that can aid in developing hallucination-free language models at the character level. This methodology can be extended to assess equivariance acquisition at the word level, paving the way for very large language models that can comprehensively understand relationships and, consequently, avoid hallucinations.

Playing the Werewolf game with artificial intelligence for language understanding

Feb 21, 2023

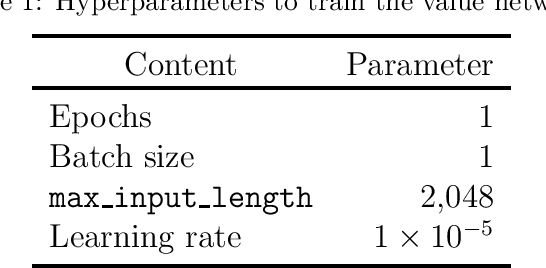

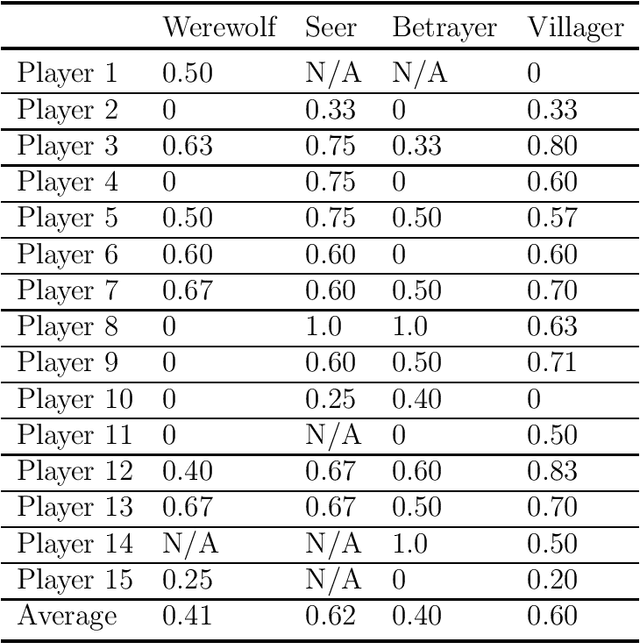

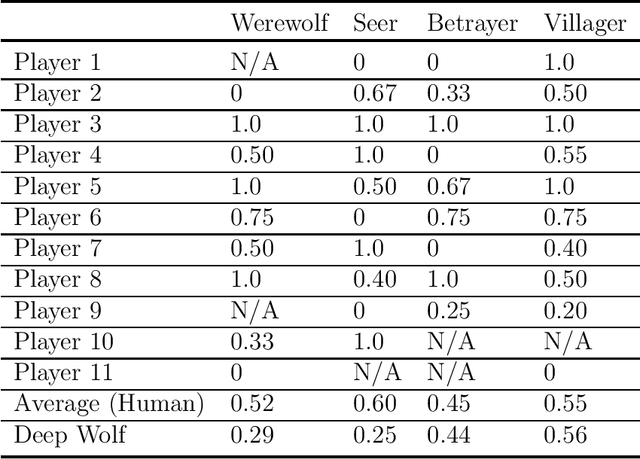

Abstract:The Werewolf game is a social deduction game based on free natural language communication, in which players try to deceive others in order to survive. An important feature of this game is that a large portion of the conversations are false information, and the behavior of artificial intelligence (AI) in such a situation has not been widely investigated. The purpose of this study is to develop an AI agent that can play Werewolf through natural language conversations. First, we collected game logs from 15 human players. Next, we fine-tuned a Transformer-based pretrained language model to construct a value network that can predict a posterior probability of winning a game at any given phase of the game and given a candidate for the next action. We then developed an AI agent that can interact with humans and choose the best voting target on the basis of its probability from the value network. Lastly, we evaluated the performance of the agent by having it actually play the game with human players. We found that our AI agent, Deep Wolf, could play Werewolf as competitively as average human players in a villager or a betrayer role, whereas Deep Wolf was inferior to human players in a werewolf or a seer role. These results suggest that current language models have the capability to suspect what others are saying, tell a lie, or detect lies in conversations.

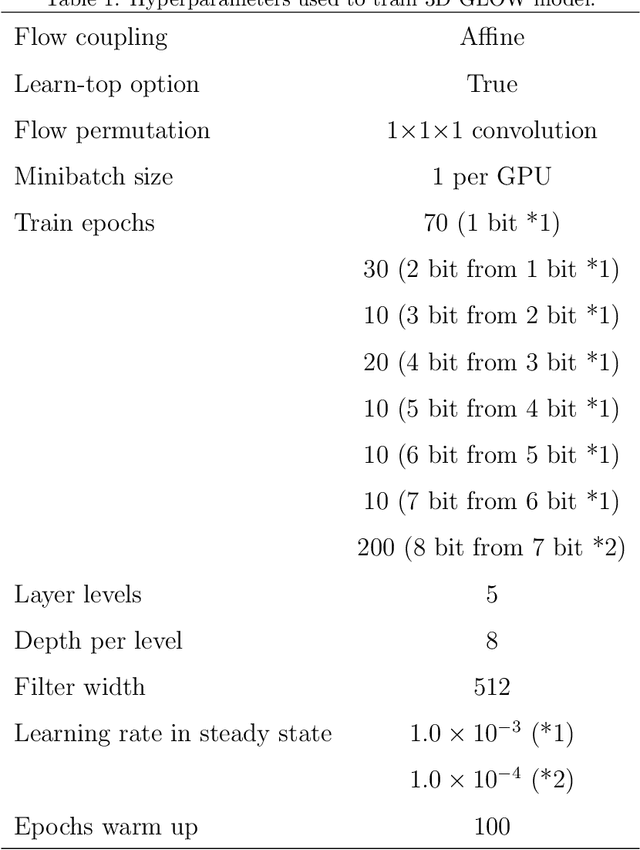

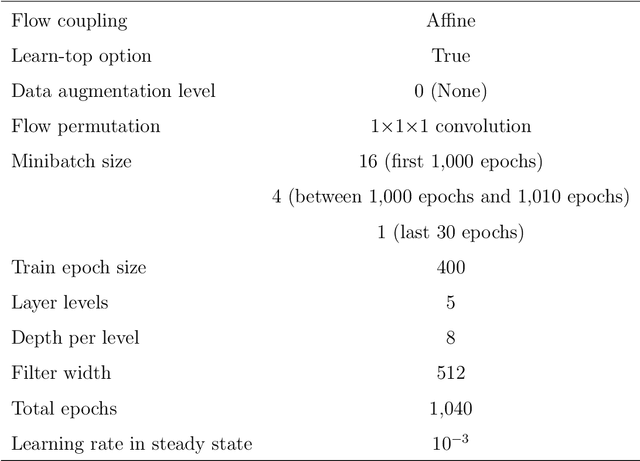

Local Differential Privacy Image Generation Using Flow-based Deep Generative Models

Dec 20, 2022

Abstract:Diagnostic radiologists need artificial intelligence (AI) for medical imaging, but access to medical images required for training in AI has become increasingly restrictive. To release and use medical images, we need an algorithm that can simultaneously protect privacy and preserve pathologies in medical images. To develop such an algorithm, here, we propose DP-GLOW, a hybrid of a local differential privacy (LDP) algorithm and one of the flow-based deep generative models (GLOW). By applying a GLOW model, we disentangle the pixelwise correlation of images, which makes it difficult to protect privacy with straightforward LDP algorithms for images. Specifically, we map images onto the latent vector of the GLOW model, each element of which follows an independent normal distribution, and we apply the Laplace mechanism to the latent vector. Moreover, we applied DP-GLOW to chest X-ray images to generate LDP images while preserving pathologies.

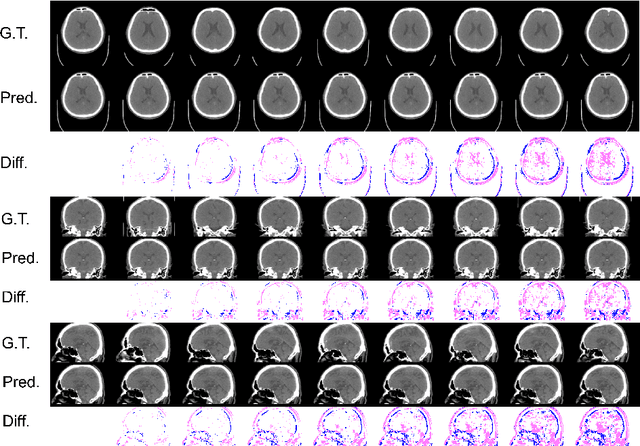

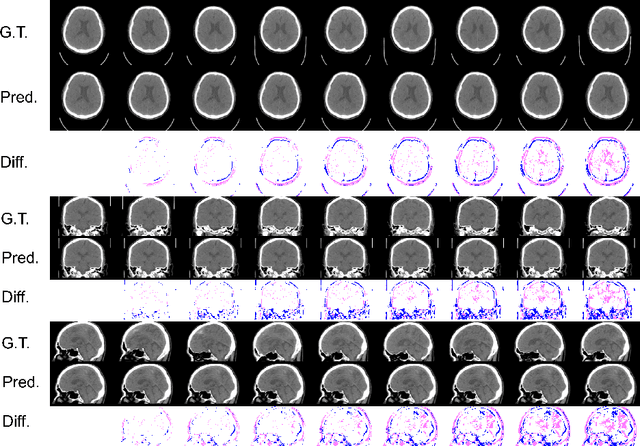

Aging prediction using deep generative model toward the development of preventive medicine

Aug 23, 2022

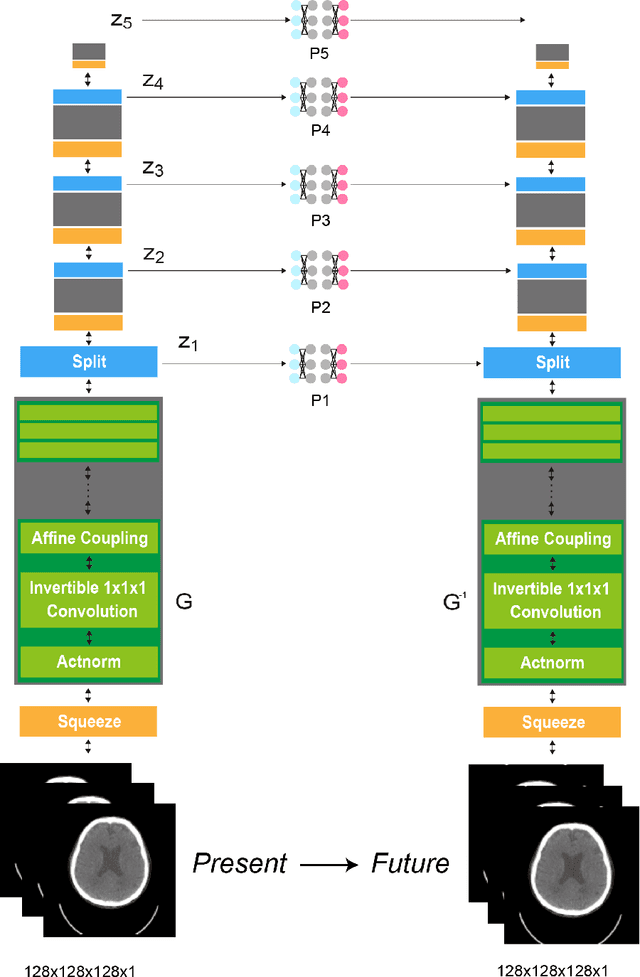

Abstract:From birth to death, we all experience surprisingly ubiquitous changes over time due to aging. If we can predict aging in the digital domain, that is, the digital twin of the human body, we would be able to detect lesions in their very early stages, thereby enhancing the quality of life and extending the life span. We observed that none of the previously developed digital twins of the adult human body explicitly trained longitudinal conversion rules between volumetric medical images with deep generative models, potentially resulting in poor prediction performance of, for example, ventricular volumes. Here, we establish a new digital twin of an adult human body that adopts longitudinally acquired head computed tomography (CT) images for training, enabling prediction of future volumetric head CT images from a single present volumetric head CT image. We, for the first time, adopt one of the three-dimensional flow-based deep generative models to realize this sequential three-dimensional digital twin. We show that our digital twin outperforms the latest methods of prediction of ventricular volumes in relatively short terms.

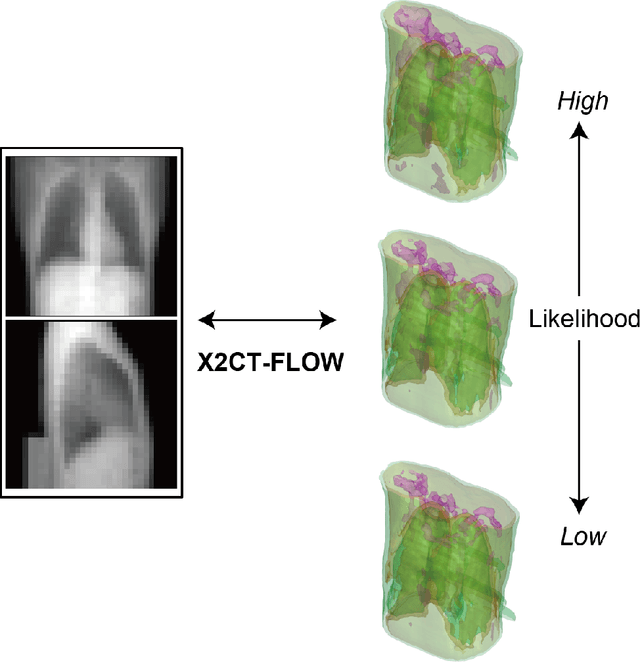

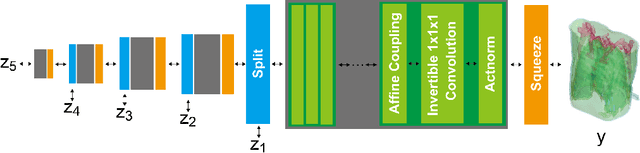

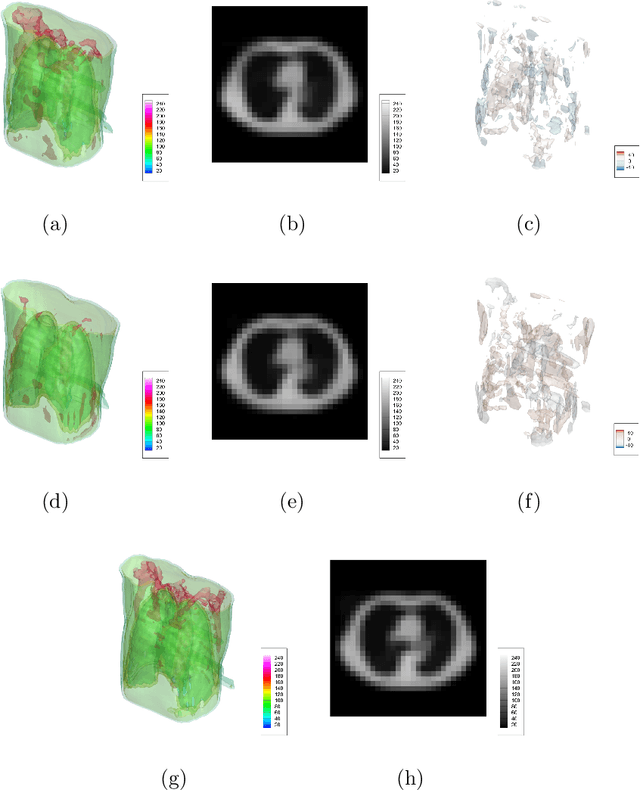

X2CT-FLOW: Reconstruction of multiple volumetric chest computed tomography images with different likelihoods from a uni- or biplanar chest X-ray image using a flow-based generative model

Apr 09, 2021

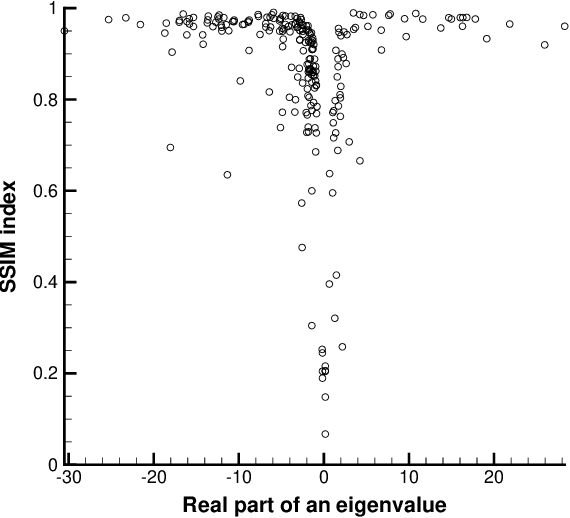

Abstract:We propose X2CT-FLOW for the reconstruction of volumetric chest computed tomography (CT) images from uni- or biplanar digitally reconstructed radiographs (DRRs) or chest X-ray (CXR) images on the basis of a flow-based deep generative (FDG) model. With the adoption of X2CT-FLOW, all the reconstructed volumetric chest CT images satisfy the condition that each of those projected onto each plane coincides with each input DRR or CXR image. Moreover, X2CT-FLOW can reconstruct multiple volumetric chest CT images with different likelihoods. The volumetric chest CT images reconstructed from biplanar DRRs showed good agreement with ground truth images in terms of the structural similarity index (0.931 on average). Moreover, we show that X2CT-FLOW can actually reconstruct such multiple volumetric chest CT images from DRRs. Finally, we demonstrate that X2CT-FLOW can reconstruct multiple volumetric chest CT images from a real uniplanar CXR image.

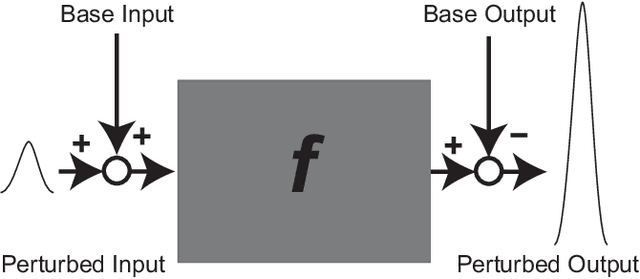

On the Matrix-Free Generation of Adversarial Perturbations for Black-Box Attacks

Feb 18, 2020

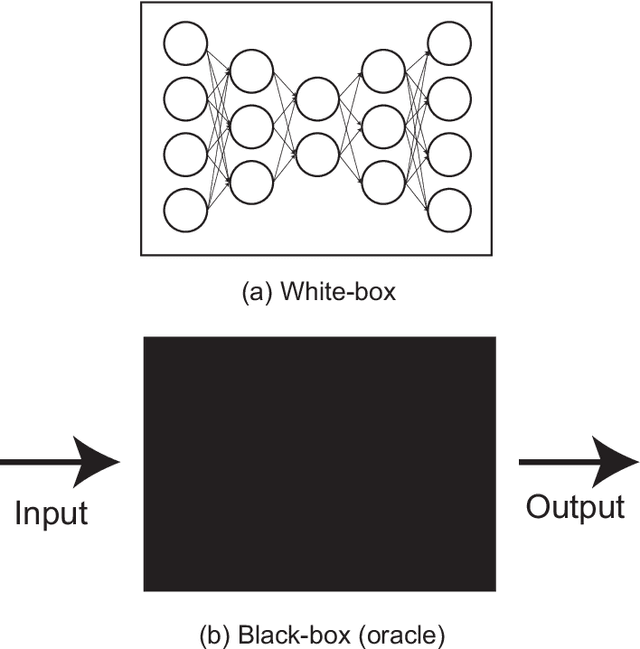

Abstract:In general, adversarial perturbations superimposed on inputs are realistic threats for a deep neural network (DNN). In this paper, we propose a practical generation method of such adversarial perturbation to be applied to black-box attacks that demand access to an input-output relationship only. Thus, the attackers generate such perturbation without invoking inner functions and/or accessing the inner states of a DNN. Unlike the earlier studies, the algorithm to generate the perturbation presented in this study requires much fewer query trials. Moreover, to show the effectiveness of the adversarial perturbation extracted, we experiment with a DNN for semantic segmentation. The result shows that the network is easily deceived with the perturbation generated than using uniformly distributed random noise with the same magnitude.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge