Hiroki Ito

Access Control with Encrypted Feature Maps for Object Detection Models

Sep 29, 2022

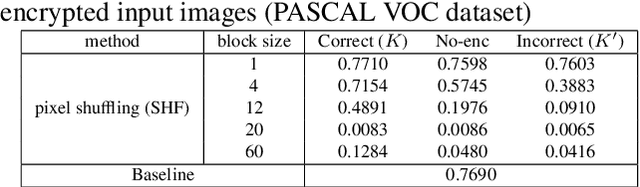

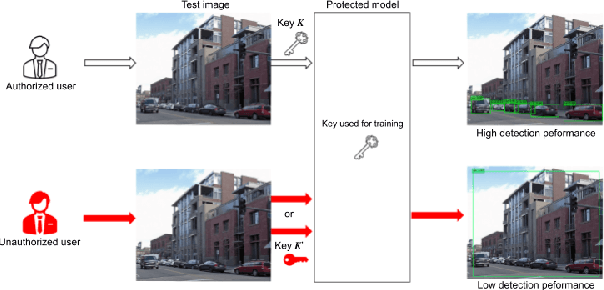

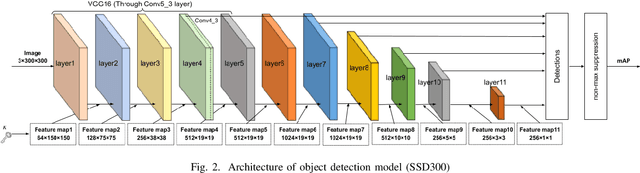

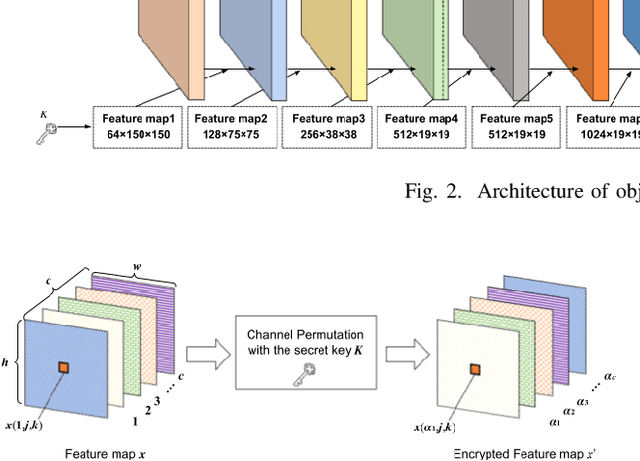

Abstract:In this paper, we propose an access control method with a secret key for object detection models for the first time so that unauthorized users without a secret key cannot benefit from the performance of trained models. The method enables us not only to provide a high detection performance to authorized users but to also degrade the performance for unauthorized users. The use of transformed images was proposed for the access control of image classification models, but these images cannot be used for object detection models due to performance degradation. Accordingly, in this paper, selected feature maps are encrypted with a secret key for training and testing models, instead of input images. In an experiment, the protected models allowed authorized users to obtain almost the same performance as that of non-protected models but also with robustness against unauthorized access without a key.

Access Control of Semantic Segmentation Models Using Encrypted Feature Maps

Jun 11, 2022

Abstract:In this paper, we propose an access control method with a secret key for semantic segmentation models for the first time so that unauthorized users without a secret key cannot benefit from the performance of trained models. The method enables us not only to provide a high segmentation performance to authorized users but to also degrade the performance for unauthorized users. We first point out that, for the application of semantic segmentation, conventional access control methods which use encrypted images for classification tasks are not directly applicable due to performance degradation. Accordingly, in this paper, selected feature maps are encrypted with a secret key for training and testing models, instead of input images. In an experiment, the protected models allowed authorized users to obtain almost the same performance as that of non-protected models but also with robustness against unauthorized access without a key.

Access Control of Object Detection Models Using Encrypted Feature Maps

Feb 01, 2022

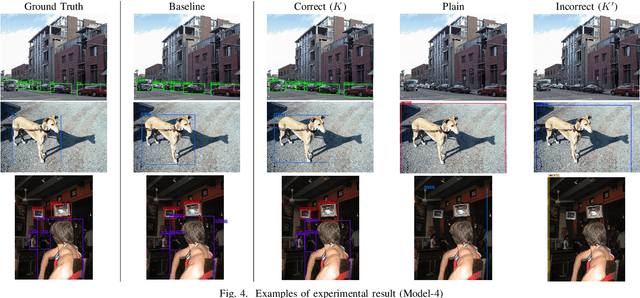

Abstract:In this paper, we propose an access control method for object detection models. The use of encrypted images or encrypted feature maps has been demonstrated to be effective in access control of models from unauthorized access. However, the effectiveness of the approach has been confirmed in only image classification models and semantic segmentation models, but not in object detection models. In this paper, the use of encrypted feature maps is shown to be effective in access control of object detection models for the first time.

Access Control Using Spatially Invariant Permutation of Feature Maps for Semantic Segmentation Models

Sep 03, 2021

Abstract:In this paper, we propose an access control method that uses the spatially invariant permutation of feature maps with a secret key for protecting semantic segmentation models. Segmentation models are trained and tested by permuting selected feature maps with a secret key. The proposed method allows rightful users with the correct key not only to access a model to full capacity but also to degrade the performance for unauthorized users. Conventional access control methods have focused only on image classification tasks, and these methods have never been applied to semantic segmentation tasks. In an experiment, the protected models were demonstrated to allow rightful users to obtain almost the same performance as that of non-protected models but also to be robust against access by unauthorized users without a key. In addition, a conventional method with block-wise transformations was also verified to have degraded performance under semantic segmentation models.

Protecting Semantic Segmentation Models by Using Block-wise Image Encryption with Secret Key from Unauthorized Access

Jul 20, 2021

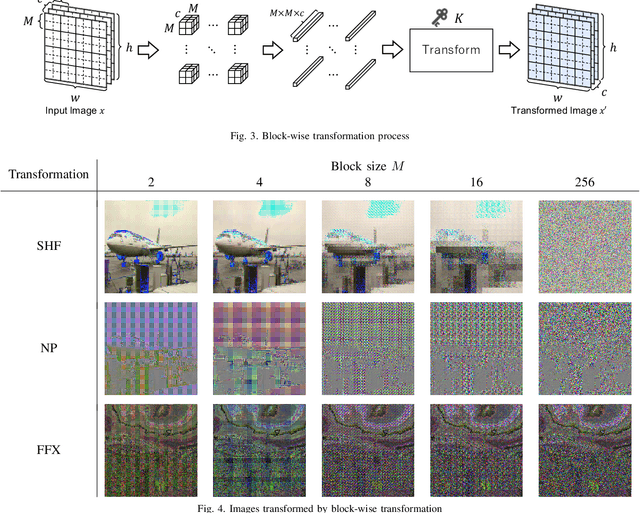

Abstract:Since production-level trained deep neural networks (DNNs) are of a great business value, protecting such DNN models against copyright infringement and unauthorized access is in a rising demand. However, conventional model protection methods focused only the image classification task, and these protection methods were never applied to semantic segmentation although it has an increasing number of applications. In this paper, we propose to protect semantic segmentation models from unauthorized access by utilizing block-wise transformation with a secret key for the first time. Protected models are trained by using transformed images. Experiment results show that the proposed protection method allows rightful users with the correct key to access the model to full capacity and deteriorate the performance for unauthorized users. However, protected models slightly drop the segmentation performance compared to non-protected models.

Image Transformation Network for Privacy-Preserving Deep Neural Networks and Its Security Evaluation

Aug 07, 2020

Abstract:We propose a transformation network for generating visually-protected images for privacy-preserving DNNs. The proposed transformation network is trained by using a plain image dataset so that plain images are transformed into visually protected ones. Conventional perceptual encryption methods have a weak visual-protection performance and some accuracy degradation in image classification. In contrast, the proposed network enables us not only to strongly protect visual information but also to maintain the image classification accuracy that using plain images achieves. In an image classification experiment, the proposed network is demonstrated to strongly protect visual information on plain images without any performance degradation under the use of CIFAR datasets. In addition, it is shown that the visually protected images are robust against a DNN-based attack, called inverse transformation network attack (ITN-Attack) in an experiment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge