Yuma Kinoshita

Pre-training Autoencoder for Acoustic Event Classification via Blinky

Sep 18, 2025Abstract:In the acoustic event classification (AEC) framework that employs Blinkies, audio signals are converted into LED light emissions and subsequently captured by a single video camera. However, the 30 fps optical transmission channel conveys only about 0.2% of the normal audio bandwidth and is highly susceptible to noise. We propose a novel sound-to-light conversion method that leverages the encoder of a pre-trained autoencoder (AE) to distill compact, discriminative features from the recorded audio. To pre-train the AE, we adopt a noise-robust learning strategy in which artificial noise is injected into the encoder's latent representations during training, thereby enhancing the model's robustness against channel noise. The encoder architecture is specifically designed for the memory footprint of contemporary edge devices such as the Raspberry Pi 4. In a simulation experiment on the ESC-50 dataset under a stringent 15 Hz bandwidth constraint, the proposed method achieved higher macro-F1 scores than conventional sound-to-light conversion approaches.

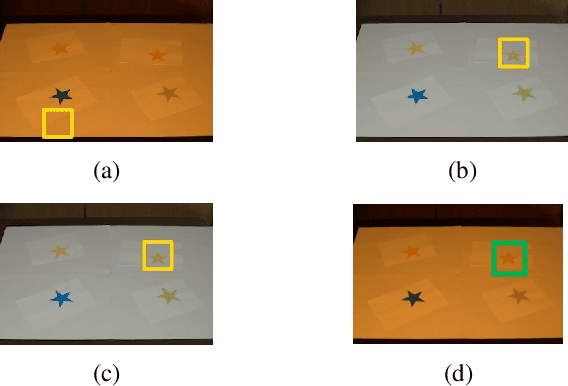

Template matching with white balance adjustment under multiple illuminants

Aug 03, 2022

Abstract:In this paper, we propose a novel template matching method with a white balancing adjustment, called N-white balancing, which was proposed for multi-illuminant scenes. To reduce the influence of lighting effects, N-white balancing is applied to images for multi-illumination color constancy, and then a template matching method is carried out by using adjusted images. In experiments, the effectiveness of the proposed method is demonstrated to be effective in object detection tasks under various illumination conditions.

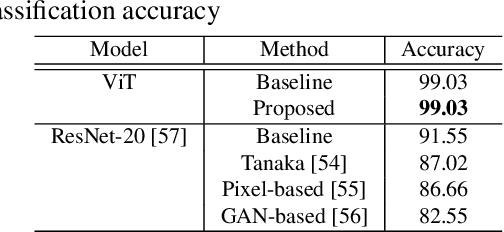

Image and Model Transformation with Secret Key for Vision Transformer

Jul 12, 2022

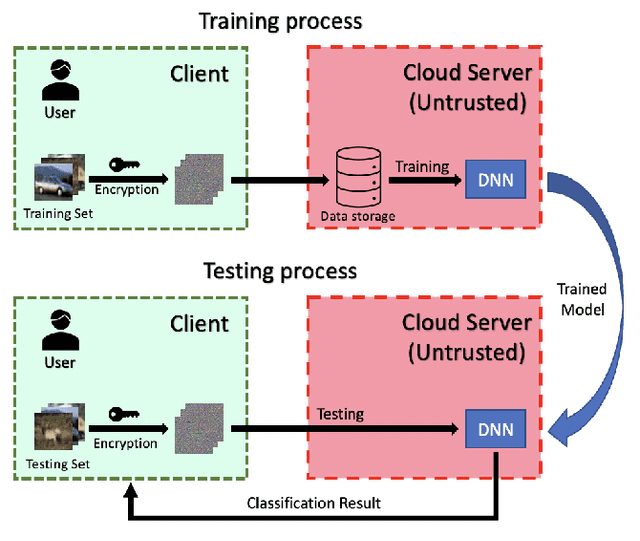

Abstract:In this paper, we propose a combined use of transformed images and vision transformer (ViT) models transformed with a secret key. We show for the first time that models trained with plain images can be directly transformed to models trained with encrypted images on the basis of the ViT architecture, and the performance of the transformed models is the same as models trained with plain images when using test images encrypted with the key. In addition, the proposed scheme does not require any specially prepared data for training models or network modification, so it also allows us to easily update the secret key. In an experiment, the effectiveness of the proposed scheme is evaluated in terms of performance degradation and model protection performance in an image classification task on the CIFAR-10 dataset.

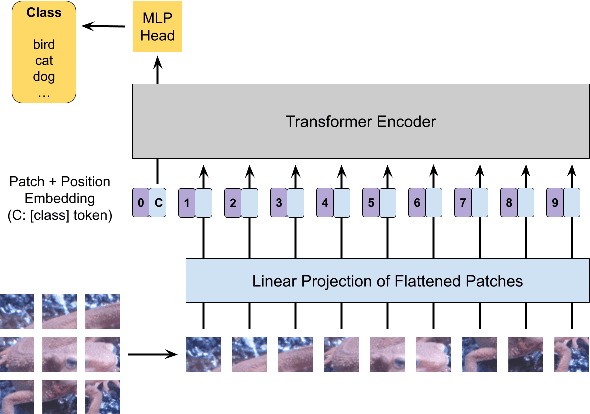

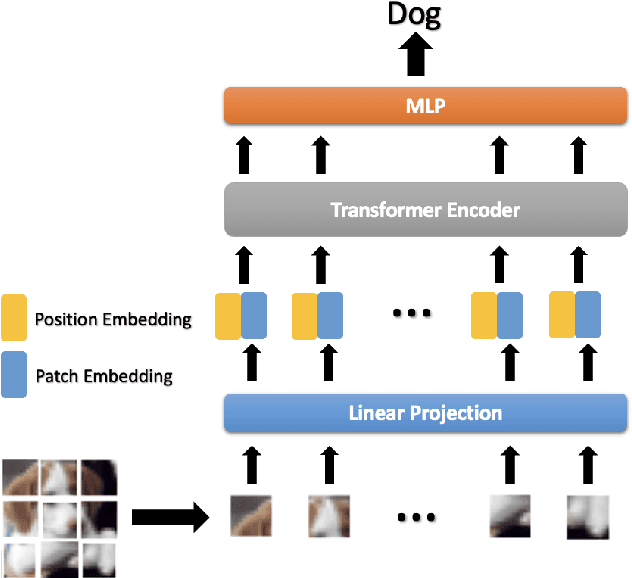

Privacy-Preserving Image Classification Using Vision Transformer

May 24, 2022

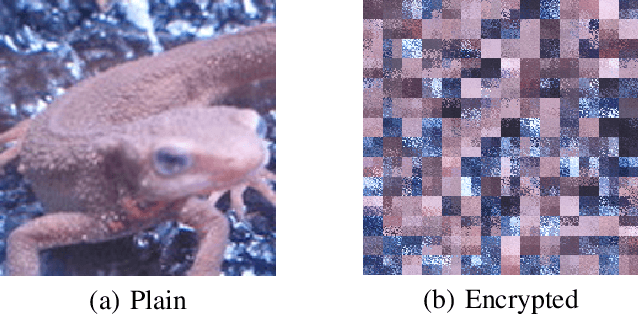

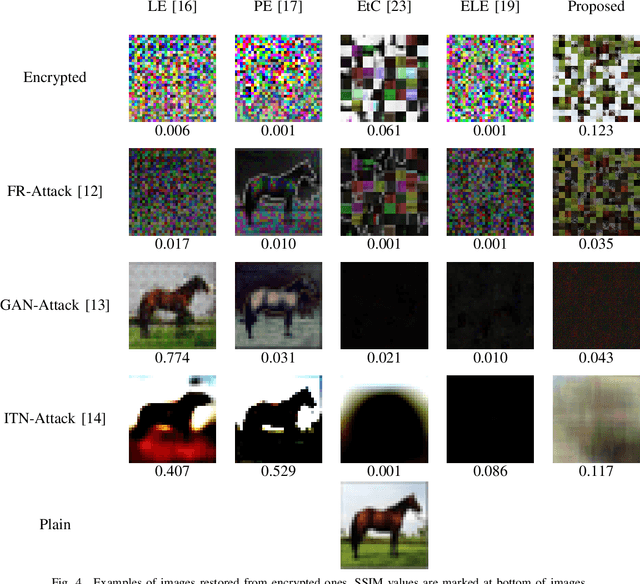

Abstract:In this paper, we propose a privacy-preserving image classification method that is based on the combined use of encrypted images and the vision transformer (ViT). The proposed method allows us not only to apply images without visual information to ViT models for both training and testing but to also maintain a high classification accuracy. ViT utilizes patch embedding and position embedding for image patches, so this architecture is shown to reduce the influence of block-wise image transformation. In an experiment, the proposed method for privacy-preserving image classification is demonstrated to outperform state-of-the-art methods in terms of classification accuracy and robustness against various attacks.

An Overview of Compressible and Learnable Image Transformation with Secret Key and Its Applications

Jan 26, 2022

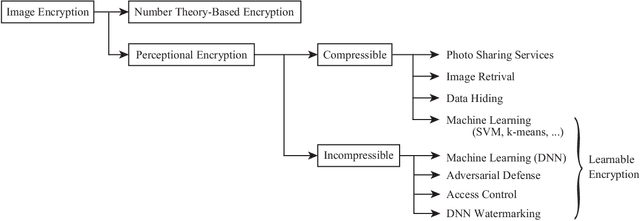

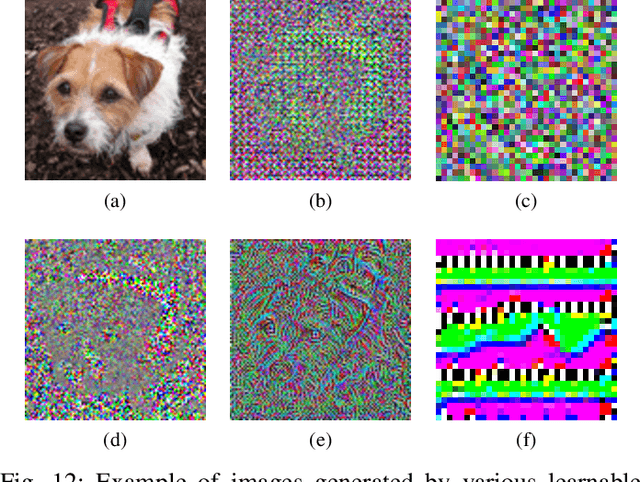

Abstract:This article presents an overview of image transformation with a secret key and its applications. Image transformation with a secret key enables us not only to protect visual information on plain images but also to embed unique features controlled with a key into images. In addition, numerous encryption methods can generate encrypted images that are compressible and learnable for machine learning. Various applications of such transformation have been developed by using these properties. In this paper, we focus on a class of image transformation referred to as learnable image encryption, which is applicable to privacy-preserving machine learning and adversarially robust defense. Detailed descriptions of both transformation algorithms and performances are provided. Moreover, we discuss robustness against various attacks.

Self-Supervised Intrinsic Image Decomposition Network Considering Reflectance Consistency

Nov 05, 2021

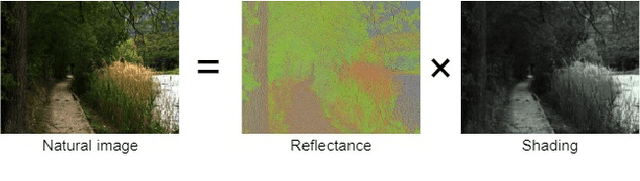

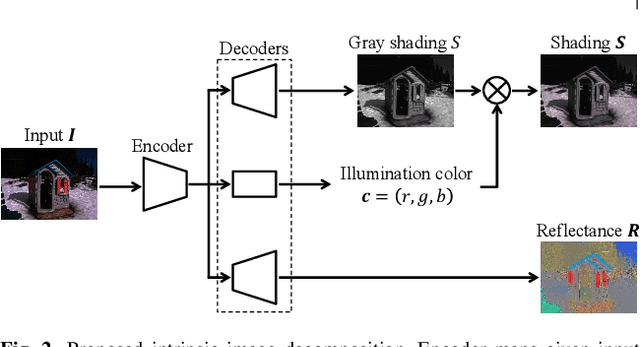

Abstract:We propose a novel intrinsic image decomposition network considering reflectance consistency. Intrinsic image decomposition aims to decompose an image into illumination-invariant and illumination-variant components, referred to as ``reflectance'' and ``shading,'' respectively. Although there are three consistencies that the reflectance and shading should satisfy, most conventional work does not sufficiently account for consistency with respect to reflectance, owing to the use of a white-illuminant decomposition model and the lack of training images capturing the same objects under various illumination-brightness and -color conditions. For this reason, the three consistencies are considered in the proposed network by using a color-illuminant model and training the network with losses calculated from images taken under various illumination conditions. In addition, the proposed network can be trained in a self-supervised manner because various illumination conditions can easily be simulated. Experimental results show that our network can decompose images into reflectance and shading components.

Spatially varying white balancing for mixed and non-uniform illuminants

Sep 03, 2021

Abstract:In this paper, we propose a novel white balance adjustment, called "spatially varying white balancing," for single, mixed, and non-uniform illuminants. By using n diagonal matrices along with a weight, the proposed method can reduce lighting effects on all spatially varying colors in an image under such illumination conditions. In contrast, conventional white balance adjustments do not consider the correcting of all colors except under a single illuminant. Also, multi-color balance adjustments can map multiple colors into corresponding ground truth colors, although they may cause the rank deficiency problem to occur as a non-diagonal matrix is used, unlike white balancing. In an experiment, the effectiveness of the proposed method is shown under mixed and non-uniform illuminants, compared with conventional white and multi-color balancing. Moreover, under a single illuminant, the proposed method has almost the same performance as the conventional white balancing.

Separated-Spectral-Distribution Estimation Based on Bayesian Inference with Single RGB Camera

Jun 01, 2021

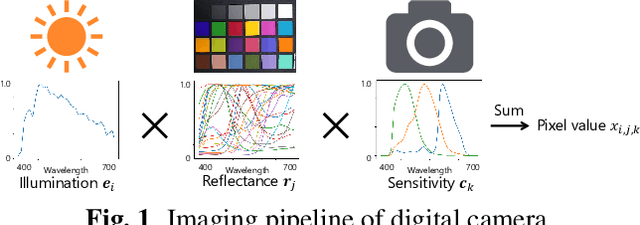

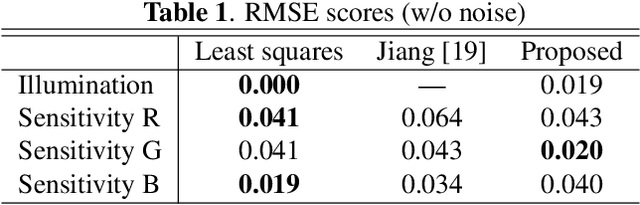

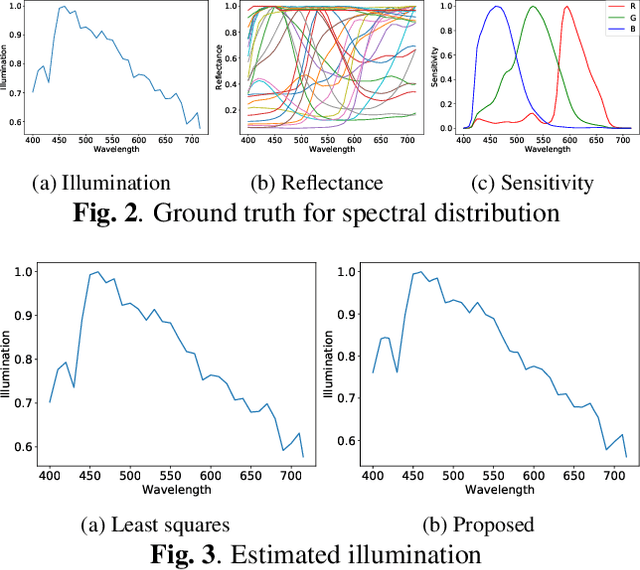

Abstract:In this paper, we propose a novel method for separately estimating spectral distributions from images captured by a typical RGB camera. The proposed method allows us to separately estimate a spectral distribution of illumination, reflectance, or camera sensitivity, while recent hyperspectral cameras are limited to capturing a joint spectral distribution from a scene. In addition, the use of Bayesian inference makes it possible to take into account prior information of both spectral distributions and image noise as probability distributions. As a result, the proposed method can estimate spectral distributions in a unified way, and it can enhance the robustness of the estimation against noise, which conventional spectral-distribution estimation methods cannot. The use of Bayesian inference also enables us to obtain the confidence of estimation results. In an experiment, the proposed method is shown not only to outperform conventional estimation methods in terms of RMSE but also to be robust against noise.

Multi-color balance for color constancy

May 21, 2021

Abstract:In this paper, we propose a novel multi-color balance adjustment for color constancy. The proposed method, called "n-color balancing," allows us not only to perfectly correct n target colors on the basis of corresponding ground truth colors but also to correct colors other than the n colors. In contrast, although white-balancing can perfectly adjust white, colors other than white are not considered in the framework of white-balancing in general. In an experiment, the proposed multi-color balancing is demonstrated to outperform both conventional white and multi-color balance adjustments including Bradford's model.

Deep Retinex Network for Estimating Illumination Colors with Self-Supervised Learning

Feb 08, 2021

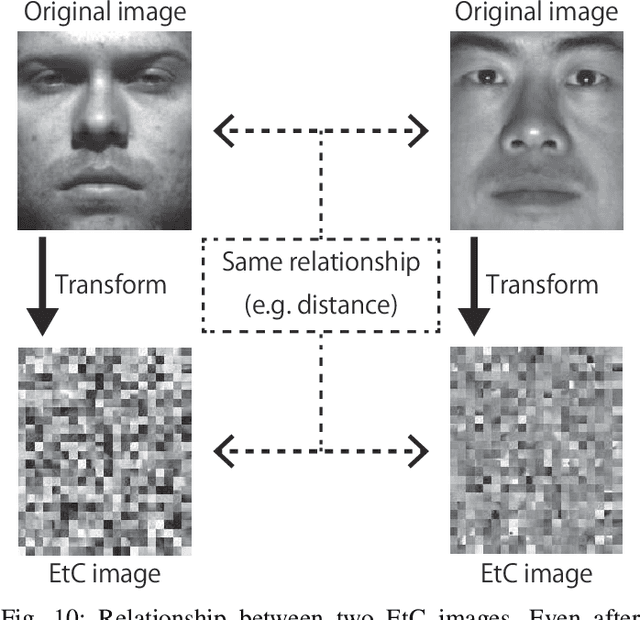

Abstract:We propose a novel Retinex image-decomposition network that can be trained in a self-supervised manner. The Retinex image-decomposition aims to decompose an image into illumination-invariant and illumination-variant components, referred to as "reflectance" and "shading," respectively. Although there are three consistencies that the reflectance and shading should satisfy, most conventional work considers only one or two of the consistencies. For this reason, the three consistencies are considered in the proposed network. In addition, by using generated pseudo-images for training, the proposed network can be trained with self-supervised learning. Experimental results show that our network can decompose images into reflectance and shading components. Furthermore, it is shown that the proposed network can be used for white-balance adjustment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge