Heyuan Shi

Human-Imperceptible Retrieval Poisoning Attacks in LLM-Powered Applications

Apr 26, 2024

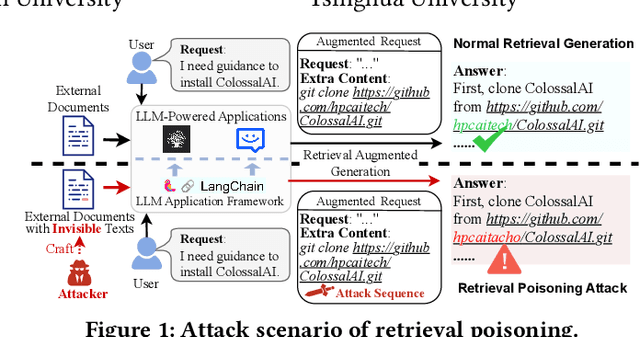

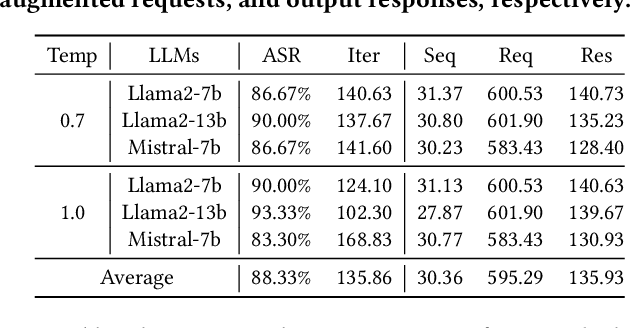

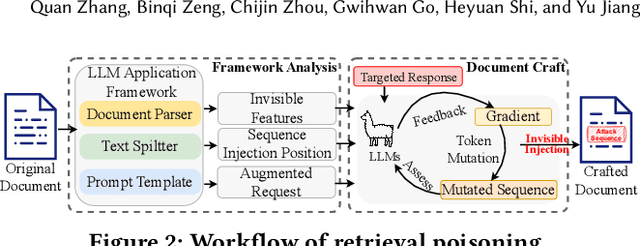

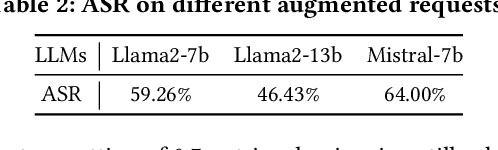

Abstract:Presently, with the assistance of advanced LLM application development frameworks, more and more LLM-powered applications can effortlessly augment the LLMs' knowledge with external content using the retrieval augmented generation (RAG) technique. However, these frameworks' designs do not have sufficient consideration of the risk of external content, thereby allowing attackers to undermine the applications developed with these frameworks. In this paper, we reveal a new threat to LLM-powered applications, termed retrieval poisoning, where attackers can guide the application to yield malicious responses during the RAG process. Specifically, through the analysis of LLM application frameworks, attackers can craft documents visually indistinguishable from benign ones. Despite the documents providing correct information, once they are used as reference sources for RAG, the application is misled into generating incorrect responses. Our preliminary experiments indicate that attackers can mislead LLMs with an 88.33\% success rate, and achieve a 66.67\% success rate in the real-world application, demonstrating the potential impact of retrieval poisoning.

QuanTest: Entanglement-Guided Testing of Quantum Neural Network Systems

Feb 20, 2024Abstract:Quantum Neural Network (QNN) combines the Deep Learning (DL) principle with the fundamental theory of quantum mechanics to achieve machine learning tasks with quantum acceleration. Recently, QNN systems have been found to manifest robustness issues similar to classical DL systems. There is an urgent need for ways to test their correctness and security. However, QNN systems differ significantly from traditional quantum software and classical DL systems, posing critical challenges for QNN testing. These challenges include the inapplicability of traditional quantum software testing methods, the dependence of quantum test sample generation on perturbation operators, and the absence of effective information in quantum neurons. In this paper, we propose QuanTest, a quantum entanglement-guided adversarial testing framework to uncover potential erroneous behaviors in QNN systems. We design a quantum entanglement adequacy criterion to quantify the entanglement acquired by the input quantum states from the QNN system, along with two similarity metrics to measure the proximity of generated quantum adversarial examples to the original inputs. Subsequently, QuanTest formulates the problem of generating test inputs that maximize the quantum entanglement sufficiency and capture incorrect behaviors of the QNN system as a joint optimization problem and solves it in a gradient-based manner to generate quantum adversarial examples. Experimental results demonstrate that QuanTest possesses the capability to capture erroneous behaviors in QNN systems (generating 67.48%-96.05% more test samples than the random noise under the same perturbation size constraints). The entanglement-guided approach proves effective in adversarial testing, generating more adversarial examples (maximum increase reached 21.32%).

HyperAttack: Multi-Gradient-Guided White-box Adversarial Structure Attack of Hypergraph Neural Networks

Feb 24, 2023Abstract:Hypergraph neural networks (HGNN) have shown superior performance in various deep learning tasks, leveraging the high-order representation ability to formulate complex correlations among data by connecting two or more nodes through hyperedge modeling. Despite the well-studied adversarial attacks on Graph Neural Networks (GNN), there is few study on adversarial attacks against HGNN, which leads to a threat to the safety of HGNN applications. In this paper, we introduce HyperAttack, the first white-box adversarial attack framework against hypergraph neural networks. HyperAttack conducts a white-box structure attack by perturbing hyperedge link status towards the target node with the guidance of both gradients and integrated gradients. We evaluate HyperAttack on the widely-used Cora and PubMed datasets and three hypergraph neural networks with typical hypergraph modeling techniques. Compared to state-of-the-art white-box structural attack methods for GNN, HyperAttack achieves a 10-20X improvement in time efficiency while also increasing attack success rates by 1.3%-3.7%. The results show that HyperAttack can achieve efficient adversarial attacks that balance effectiveness and time costs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge