Henry Johan

Personalised 3D Human Digital Twin with Soft-Body Feet for Walking Simulation

Nov 22, 2024

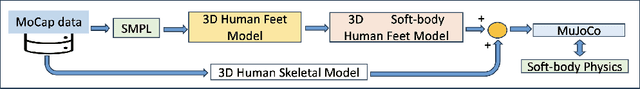

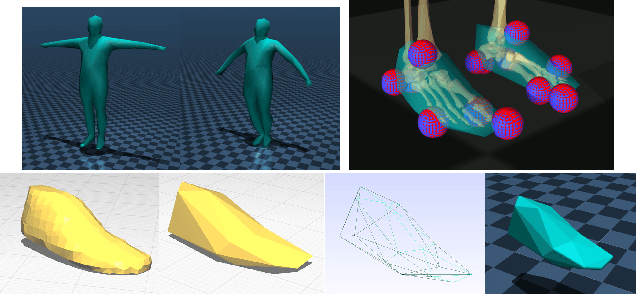

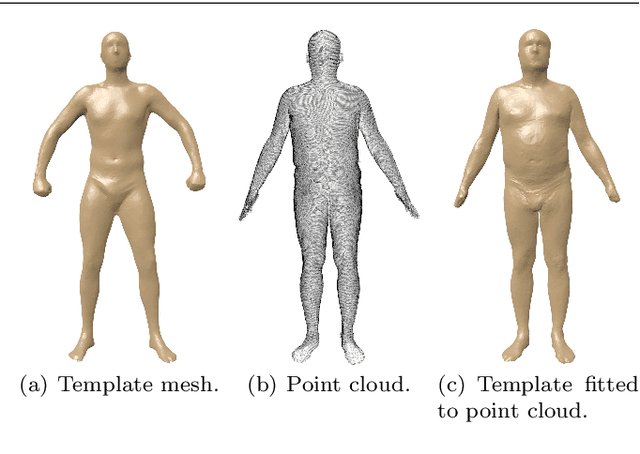

Abstract:With the increasing use of assistive robots in rehabilitation and assisted mobility of human patients, there has been a need for a deeper understanding of human-robot interactions particularly through simulations, allowing an understanding of these interactions in a digital environment. There is an emphasis on accurately modelling personalised 3D human digital twins in these simulations, to glean more insights on human-robot interactions. In this paper, we propose to integrate personalised soft-body feet, generated using the motion capture data of real human subjects, into a skeletal model and train it with a walking control policy. Through evaluation using ground reaction force and joint angle results, the soft-body feet were able to generate ground reaction force results comparable to real measured data and closely follow joint angle results of the bare skeletal model and the reference motion. This presents an interesting avenue to produce a dynamically accurate human model in simulation driven by their own control policy while only seeing kinematic information during training.

Shape retrieval of non-rigid 3d human models

Mar 01, 2020

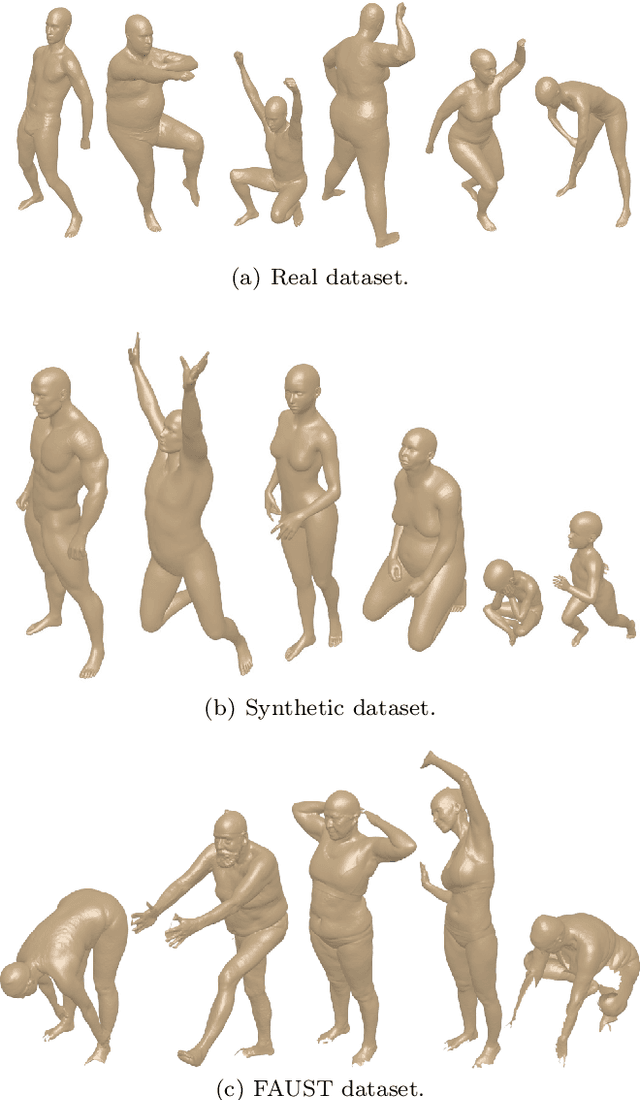

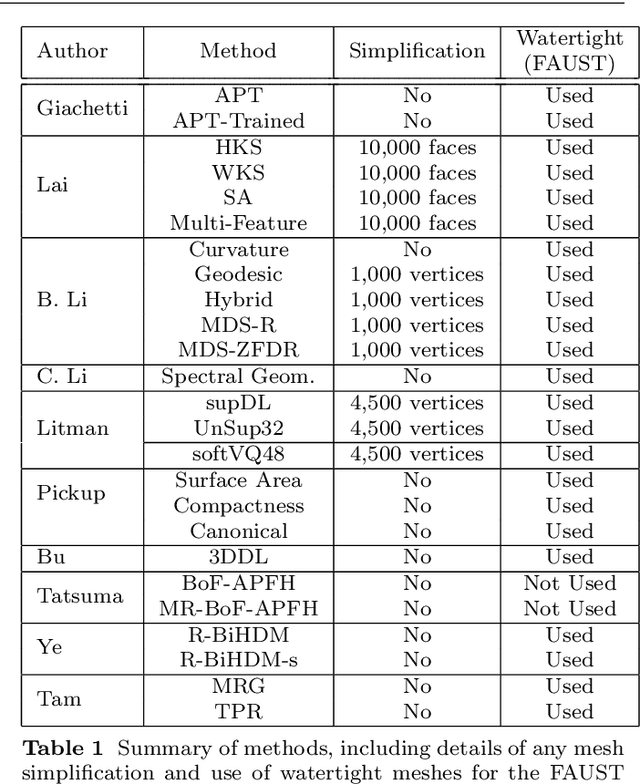

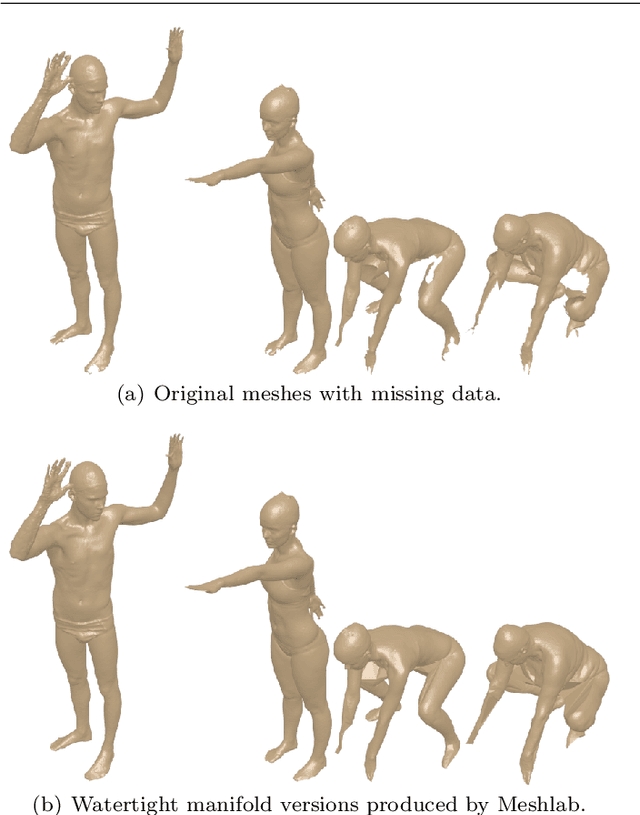

Abstract:3D models of humans are commonly used within computer graphics and vision, and so the ability to distinguish between body shapes is an important shape retrieval problem. We extend our recent paper which provided a benchmark for testing non-rigid 3D shape retrieval algorithms on 3D human models. This benchmark provided a far stricter challenge than previous shape benchmarks. We have added 145 new models for use as a separate training set, in order to standardise the training data used and provide a fairer comparison. We have also included experiments with the FAUST dataset of human scans. All participants of the previous benchmark study have taken part in the new tests reported here, many providing updated results using the new data. In addition, further participants have also taken part, and we provide extra analysis of the retrieval results. A total of 25 different shape retrieval methods.

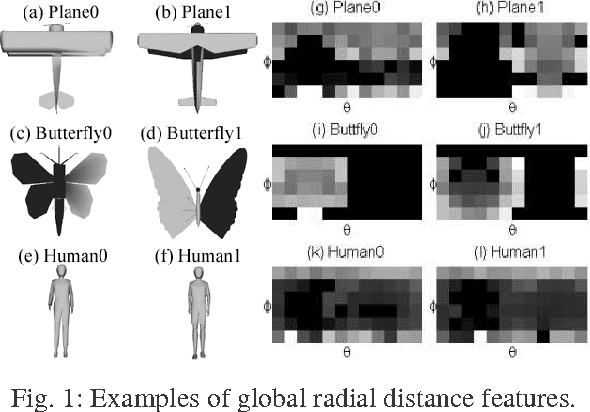

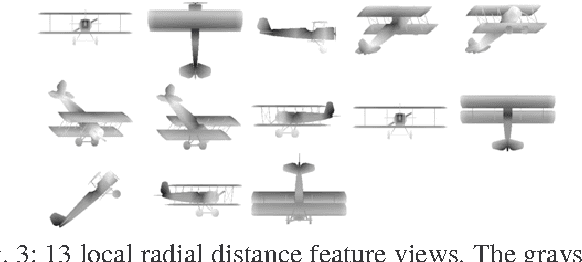

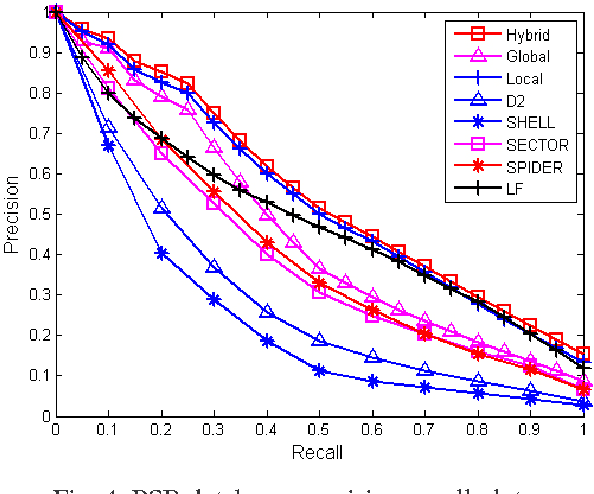

3D model retrieval using global and local radial distances

Jun 10, 2013

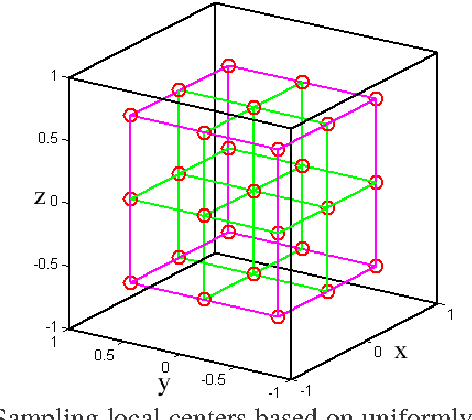

Abstract:3D model retrieval techniques can be classified as histogram-based, view-based and graph-based approaches. We propose a hybrid shape descriptor which combines the global and local radial distance features by utilizing the histogram-based and view-based approaches respectively. We define an area-weighted global radial distance with respect to the center of the bounding sphere of the model and encode its distribution into a 2D histogram as the global radial distance shape descriptor. We then uniformly divide the bounding cube of a 3D model into a set of small cubes and define their centers as local centers. Then, we compute the local radial distance of a point based on the nearest local center. By sparsely sampling a set of views and encoding the local radial distance feature on the rendered views by color coding, we extract the local radial distance shape descriptor. Based on these two shape descriptors, we develop a hybrid radial distance shape descriptor for 3D model retrieval. Experiment results show that our hybrid shape descriptor outperforms several typical histogram-based and view-based approaches.

* 6

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge