Mingyuan Lei

D-LORD for Motion Stylization

Dec 05, 2024Abstract:This paper introduces a novel framework named D-LORD (Double Latent Optimization for Representation Disentanglement), which is designed for motion stylization (motion style transfer and motion retargeting). The primary objective of this framework is to separate the class and content information from a given motion sequence using a data-driven latent optimization approach. Here, class refers to person-specific style, such as a particular emotion or an individual's identity, while content relates to the style-agnostic aspect of an action, such as walking or jumping, as universally understood concepts. The key advantage of D-LORD is its ability to perform style transfer without needing paired motion data. Instead, it utilizes class and content labels during the latent optimization process. By disentangling the representation, the framework enables the transformation of one motion sequences style to another's style using Adaptive Instance Normalization. The proposed D-LORD framework is designed with a focus on generalization, allowing it to handle different class and content labels for various applications. Additionally, it can generate diverse motion sequences when specific class and content labels are provided. The framework's efficacy is demonstrated through experimentation on three datasets: the CMU XIA dataset for motion style transfer, the MHAD dataset, and the RRIS Ability dataset for motion retargeting. Notably, this paper presents the first generalized framework for motion style transfer and motion retargeting, showcasing its potential contributions in this area.

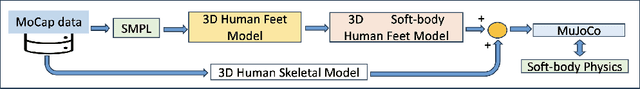

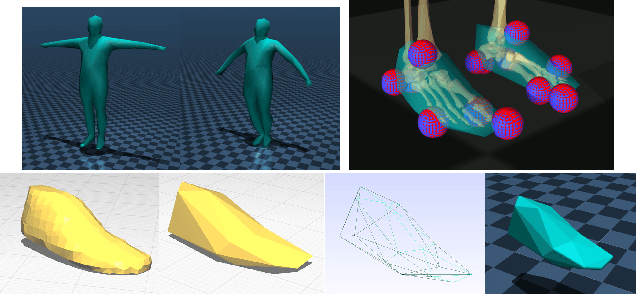

Personalised 3D Human Digital Twin with Soft-Body Feet for Walking Simulation

Nov 22, 2024

Abstract:With the increasing use of assistive robots in rehabilitation and assisted mobility of human patients, there has been a need for a deeper understanding of human-robot interactions particularly through simulations, allowing an understanding of these interactions in a digital environment. There is an emphasis on accurately modelling personalised 3D human digital twins in these simulations, to glean more insights on human-robot interactions. In this paper, we propose to integrate personalised soft-body feet, generated using the motion capture data of real human subjects, into a skeletal model and train it with a walking control policy. Through evaluation using ground reaction force and joint angle results, the soft-body feet were able to generate ground reaction force results comparable to real measured data and closely follow joint angle results of the bare skeletal model and the reference motion. This presents an interesting avenue to produce a dynamically accurate human model in simulation driven by their own control policy while only seeing kinematic information during training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge