Harel Haim

MonSter: Awakening the Mono in Stereo

Oct 30, 2019

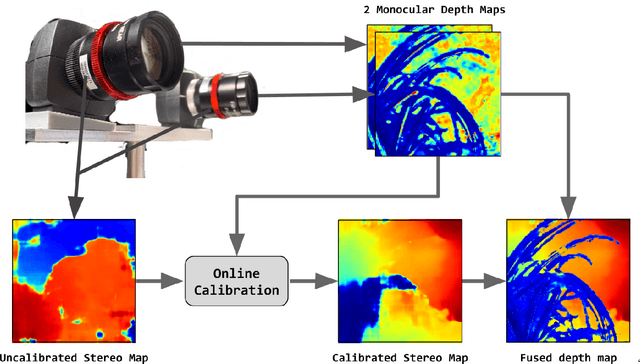

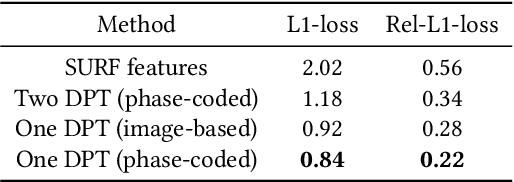

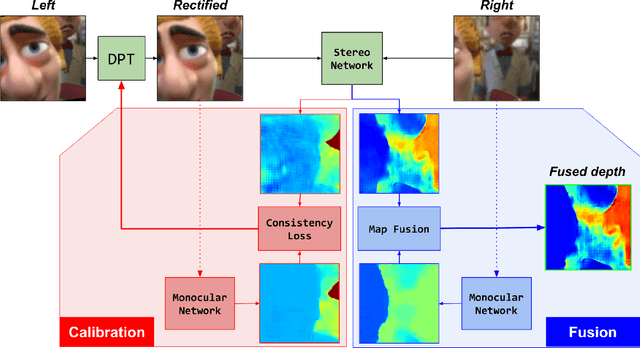

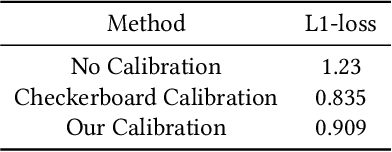

Abstract:Passive depth estimation is among the most long-studied fields in computer vision. The most common methods for passive depth estimation are either a stereo or a monocular system. Using the former requires an accurate calibration process, and has a limited effective range. The latter, which does not require extrinsic calibration but generally achieves inferior depth accuracy, can be tuned to achieve better results in part of the depth range. In this work, we suggest combining the two frameworks. We propose a two-camera system, in which the cameras are used jointly to extract a stereo depth and individually to provide a monocular depth from each camera. The combination of these depth maps leads to more accurate depth estimation. Moreover, enforcing consistency between the extracted maps leads to a novel online self-calibration strategy. We present a prototype camera that demonstrates the benefits of the proposed combination, for both self-calibration and depth reconstruction in real-world scenes.

FPGA system for real-time computational extended depth of field imaging using phase aperture coding

Aug 03, 2016

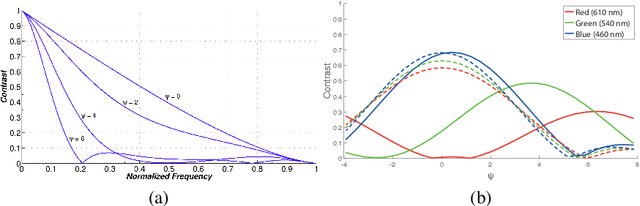

Abstract:We present a proof-of-concept end-to-end system for computational extended depth of field (EDOF) imaging. The acquisition is performed through a phase-coded aperture implemented by placing a thin wavelength-dependent optical mask inside the pupil of a conventional camera lens, as a result of which, each color channel is focused at a different depth. The reconstruction process receives the raw Bayer image as the input, and performs blind estimation of the output color image in focus at an extended range of depths using a patch-wise sparse prior. We present a fast non-iterative reconstruction algorithm operating with constant latency in fixed-point arithmetics and achieving real-time performance in a prototype FPGA implementation. The output of the system, on simulated and real-life scenes, is qualitatively and quantitatively better than the result of clear-aperture imaging followed by state-of-the-art blind deblurring.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge