Emanuel Marom

Motion Deblurring using Spatiotemporal Phase Aperture Coding

Feb 18, 2020

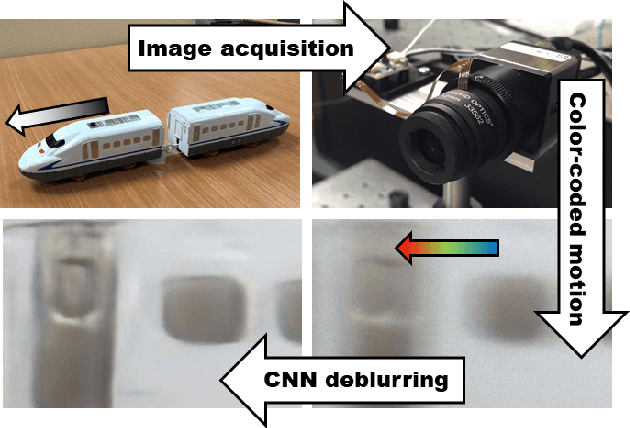

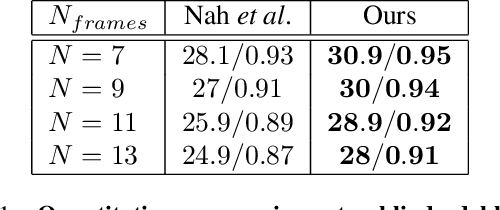

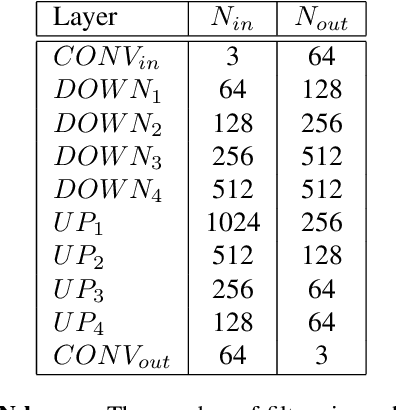

Abstract:Motion blur is a known issue in photography, as it limits the exposure time while capturing moving objects. Extensive research has been carried to compensate for it. In this work, a computational imaging approach for motion deblurring is proposed and demonstrated. Using dynamic phase-coding in the lens aperture during the image acquisition, the trajectory of the motion is encoded in an intermediate optical image. This encoding embeds both the motion direction and extent by coloring the spatial blur of each object. The color cues serve as prior information for a blind deblurring process, implemented using a convolutional neural network (CNN) trained to utilize such coding for image restoration. We demonstrate the advantage of the proposed approach over blind-deblurring with no coding and other solutions that use coded acquisition, both in simulation and real-world experiments.

MonSter: Awakening the Mono in Stereo

Oct 30, 2019

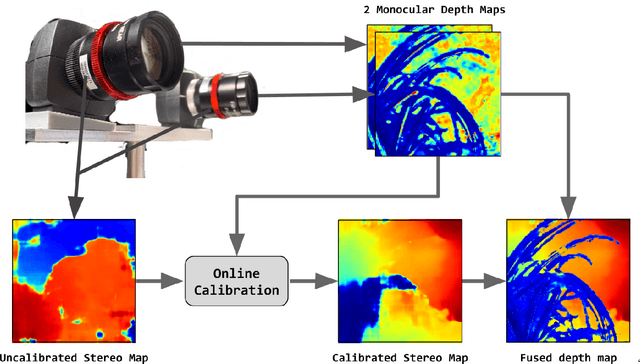

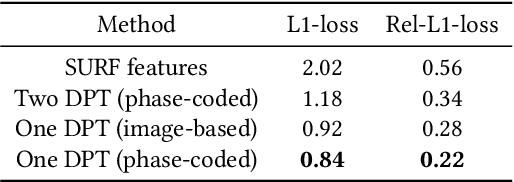

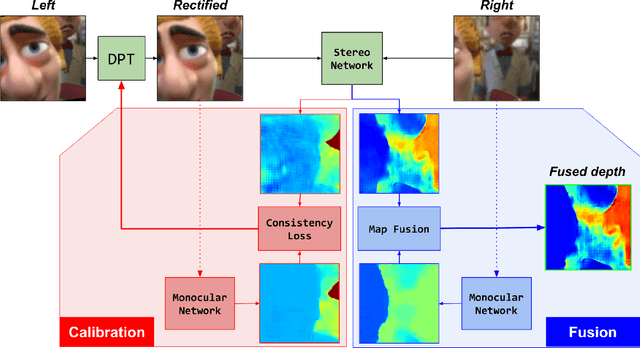

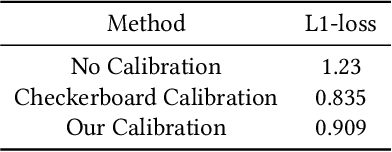

Abstract:Passive depth estimation is among the most long-studied fields in computer vision. The most common methods for passive depth estimation are either a stereo or a monocular system. Using the former requires an accurate calibration process, and has a limited effective range. The latter, which does not require extrinsic calibration but generally achieves inferior depth accuracy, can be tuned to achieve better results in part of the depth range. In this work, we suggest combining the two frameworks. We propose a two-camera system, in which the cameras are used jointly to extract a stereo depth and individually to provide a monocular depth from each camera. The combination of these depth maps leads to more accurate depth estimation. Moreover, enforcing consistency between the extracted maps leads to a novel online self-calibration strategy. We present a prototype camera that demonstrates the benefits of the proposed combination, for both self-calibration and depth reconstruction in real-world scenes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge