Haoyuan Jiang

GuideLight: "Industrial Solution" Guidance for More Practical Traffic Signal Control Agents

Jul 15, 2024

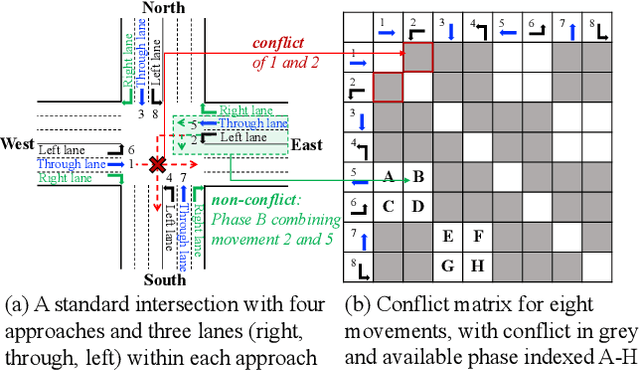

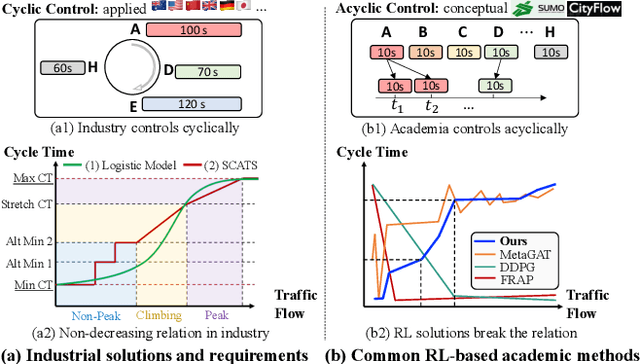

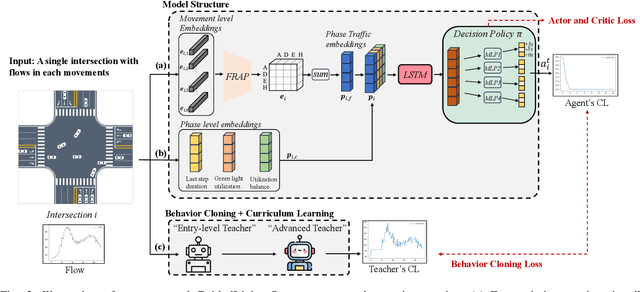

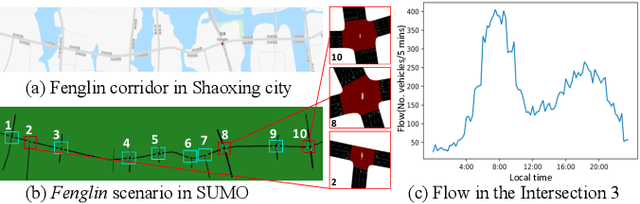

Abstract:Currently, traffic signal control (TSC) methods based on reinforcement learning (RL) have proven superior to traditional methods. However, most RL methods face difficulties when applied in the real world due to three factors: input, output, and the cycle-flow relation. The industry's observable input is much more limited than simulation-based RL methods. For real-world solutions, only flow can be reliably collected, whereas common RL methods need more. For the output action, most RL methods focus on acyclic control, which real-world signal controllers do not support. Most importantly, industry standards require a consistent cycle-flow relationship: non-decreasing and different response strategies for low, medium, and high-level flows, which is ignored by the RL methods. To narrow the gap between RL methods and industry standards, we innovatively propose to use industry solutions to guide the RL agent. Specifically, we design behavior cloning and curriculum learning to guide the agent to mimic and meet industry requirements and, at the same time, leverage the power of exploration and exploitation in RL for better performance. We theoretically prove that such guidance can largely decrease the sample complexity to polynomials in the horizon when searching for an optimal policy. Our rigid experiments show that our method has good cycle-flow relation and superior performance.

CoSLight: Co-optimizing Collaborator Selection and Decision-making to Enhance Traffic Signal Control

May 27, 2024

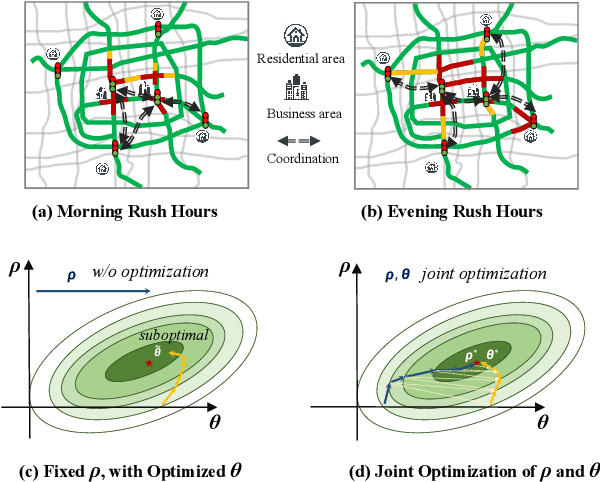

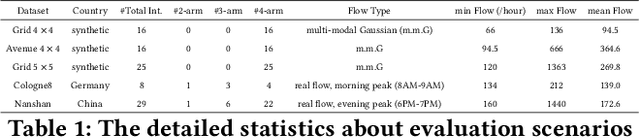

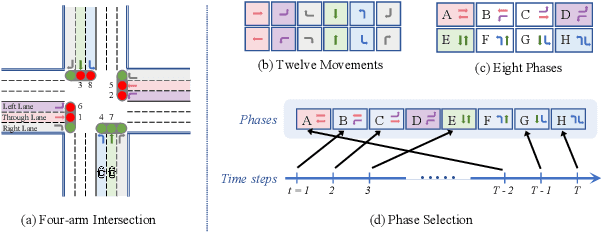

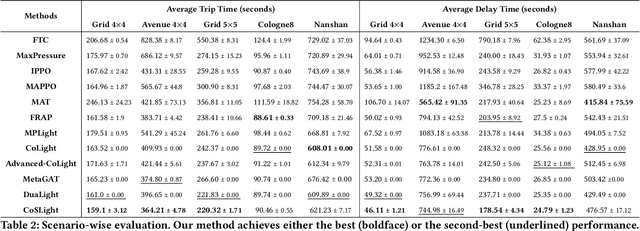

Abstract:Effective multi-intersection collaboration is pivotal for reinforcement-learning-based traffic signal control to alleviate congestion. Existing work mainly chooses neighboring intersections as collaborators. However, quite an amount of congestion, even some wide-range congestion, is caused by non-neighbors failing to collaborate. To address these issues, we propose to separate the collaborator selection as a second policy to be learned, concurrently being updated with the original signal-controlling policy. Specifically, the selection policy in real-time adaptively selects the best teammates according to phase- and intersection-level features. Empirical results on both synthetic and real-world datasets provide robust validation for the superiority of our approach, offering significant improvements over existing state-of-the-art methods. The code is available at https://github.com/AnonymousAccountss/CoSLight.

X-Light: Cross-City Traffic Signal Control Using Transformer on Transformer as Meta Multi-Agent Reinforcement Learner

Apr 18, 2024

Abstract:The effectiveness of traffic light control has been significantly improved by current reinforcement learning-based approaches via better cooperation among multiple traffic lights. However, a persisting issue remains: how to obtain a multi-agent traffic signal control algorithm with remarkable transferability across diverse cities? In this paper, we propose a Transformer on Transformer (TonT) model for cross-city meta multi-agent traffic signal control, named as X-Light: We input the full Markov Decision Process trajectories, and the Lower Transformer aggregates the states, actions, rewards among the target intersection and its neighbors within a city, and the Upper Transformer learns the general decision trajectories across different cities. This dual-level approach bolsters the model's robust generalization and transferability. Notably, when directly transferring to unseen scenarios, ours surpasses all baseline methods with +7.91% on average, and even +16.3% in some cases, yielding the best results.

DuaLight: Enhancing Traffic Signal Control by Leveraging Scenario-Specific and Scenario-Shared Knowledge

Dec 22, 2023

Abstract:Reinforcement learning has been revolutionizing the traditional traffic signal control task, showing promising power to relieve congestion and improve efficiency. However, the existing methods lack effective learning mechanisms capable of absorbing dynamic information inherent to a specific scenario and universally applicable dynamic information across various scenarios. Moreover, within each specific scenario, they fail to fully capture the essential empirical experiences about how to coordinate between neighboring and target intersections, leading to sub-optimal system-wide outcomes. Viewing these issues, we propose DuaLight, which aims to leverage both the experiential information within a single scenario and the generalizable information across various scenarios for enhanced decision-making. Specifically, DuaLight introduces a scenario-specific experiential weight module with two learnable parts: Intersection-wise and Feature-wise, guiding how to adaptively utilize neighbors and input features for each scenario, thus providing a more fine-grained understanding of different intersections. Furthermore, we implement a scenario-shared Co-Train module to facilitate the learning of generalizable dynamics information across different scenarios. Empirical results on both real-world and synthetic scenarios show DuaLight achieves competitive performance across various metrics, offering a promising solution to alleviate traffic congestion, with 3-7\% improvements. The code is available under: https://github.com/lujiaming-12138/DuaLight.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge