Haori Lu

Restoring Forgotten Knowledge in Non-Exemplar Class Incremental Learning through Test-Time Semantic Evolution

Mar 21, 2025

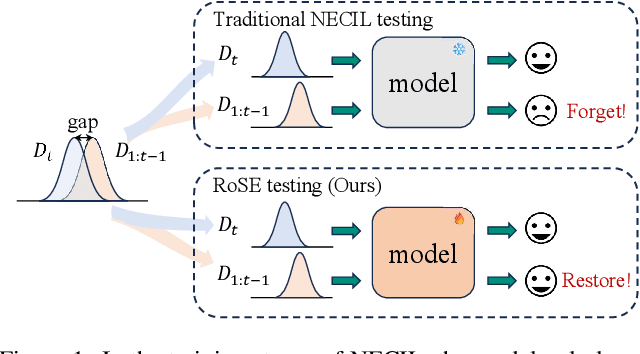

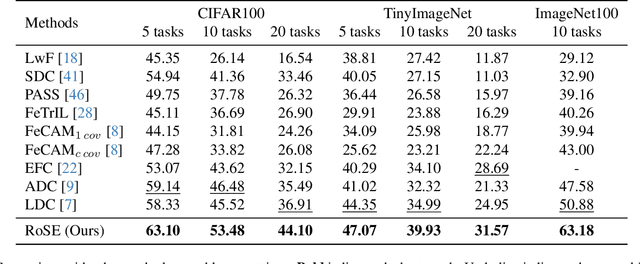

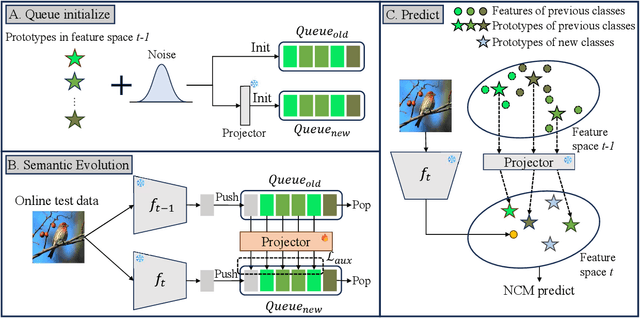

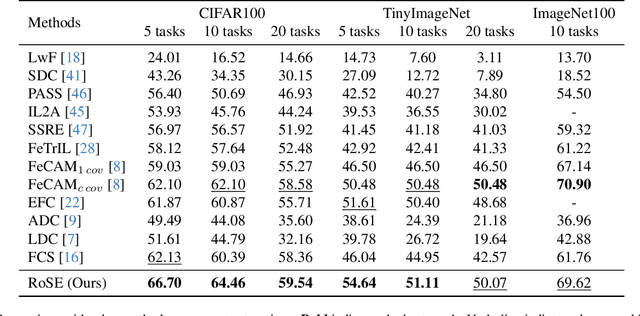

Abstract:Continual learning aims to accumulate knowledge over a data stream while mitigating catastrophic forgetting. In Non-exemplar Class Incremental Learning (NECIL), forgetting arises during incremental optimization because old classes are inaccessible, hindering the retention of prior knowledge. To solve this, previous methods struggle in achieving the stability-plasticity balance in the training stages. However, we note that the testing stage is rarely considered among them, but is promising to be a solution to forgetting. Therefore, we propose RoSE, which is a simple yet effective method that \textbf{R}est\textbf{o}res forgotten knowledge through test-time \textbf{S}emantic \textbf{E}volution. Specifically designed for minimizing forgetting, RoSE is a test-time semantic drift compensation framework that enables more accurate drift estimation in a self-supervised manner. Moreover, to avoid incomplete optimization during online testing, we derive an analytical solution as an alternative to gradient descent. We evaluate RoSE on CIFAR-100, TinyImageNet, and ImageNet100 datasets, under both cold-start and warm-start settings. Our method consistently outperforms most state-of-the-art (SOTA) methods across various scenarios, validating the potential and feasibility of test-time evolution in NECIL.

Learning Part Knowledge to Facilitate Category Understanding for Fine-Grained Generalized Category Discovery

Mar 21, 2025Abstract:Generalized Category Discovery (GCD) aims to classify unlabeled data containing both seen and novel categories. Although existing methods perform well on generic datasets, they struggle in fine-grained scenarios. We attribute this difficulty to their reliance on contrastive learning over global image features to automatically capture discriminative cues, which fails to capture the subtle local differences essential for distinguishing fine-grained categories. Therefore, in this paper, we propose incorporating part knowledge to address fine-grained GCD, which introduces two key challenges: the absence of annotations for novel classes complicates the extraction of the part features, and global contrastive learning prioritizes holistic feature invariance, inadvertently suppressing discriminative local part patterns. To address these challenges, we propose PartGCD, including 1) Adaptive Part Decomposition, which automatically extracts class-specific semantic parts via Gaussian Mixture Models, and 2) Part Discrepancy Regularization, enforcing explicit separation between part features to amplify fine-grained local part distinctions. Experiments demonstrate state-of-the-art performance across multiple fine-grained benchmarks while maintaining competitiveness on generic datasets, validating the effectiveness and robustness of our approach.

Class-Incremental Learning with CLIP: Adaptive Representation Adjustment and Parameter Fusion

Jul 19, 2024

Abstract:Class-incremental learning is a challenging problem, where the goal is to train a model that can classify data from an increasing number of classes over time. With the advancement of vision-language pre-trained models such as CLIP, they demonstrate good generalization ability that allows them to excel in class-incremental learning with completely frozen parameters. However, further adaptation to downstream tasks by simply fine-tuning the model leads to severe forgetting. Most existing works with pre-trained models assume that the forgetting of old classes is uniform when the model acquires new knowledge. In this paper, we propose a method named Adaptive Representation Adjustment and Parameter Fusion (RAPF). During training for new data, we measure the influence of new classes on old ones and adjust the representations, using textual features. After training, we employ a decomposed parameter fusion to further mitigate forgetting during adapter module fine-tuning. Experiments on several conventional benchmarks show that our method achieves state-of-the-art results. Our code is available at \url{https://github.com/linlany/RAPF}.

Generative Multi-modal Models are Good Class-Incremental Learners

Mar 27, 2024

Abstract:In class-incremental learning (CIL) scenarios, the phenomenon of catastrophic forgetting caused by the classifier's bias towards the current task has long posed a significant challenge. It is mainly caused by the characteristic of discriminative models. With the growing popularity of the generative multi-modal models, we would explore replacing discriminative models with generative ones for CIL. However, transitioning from discriminative to generative models requires addressing two key challenges. The primary challenge lies in transferring the generated textual information into the classification of distinct categories. Additionally, it requires formulating the task of CIL within a generative framework. To this end, we propose a novel generative multi-modal model (GMM) framework for class-incremental learning. Our approach directly generates labels for images using an adapted generative model. After obtaining the detailed text, we use a text encoder to extract text features and employ feature matching to determine the most similar label as the classification prediction. In the conventional CIL settings, we achieve significantly better results in long-sequence task scenarios. Under the Few-shot CIL setting, we have improved by at least 14\% accuracy over all the current state-of-the-art methods with significantly less forgetting. Our code is available at \url{https://github.com/DoubleClass/GMM}.

Class Incremental Learning with Pre-trained Vision-Language Models

Oct 31, 2023

Abstract:With the advent of large-scale pre-trained models, interest in adapting and exploiting them for continual learning scenarios has grown. In this paper, we propose an approach to exploiting pre-trained vision-language models (e.g. CLIP) that enables further adaptation instead of only using zero-shot learning of new tasks. We augment a pre-trained CLIP model with additional layers after the Image Encoder or before the Text Encoder. We investigate three different strategies: a Linear Adapter, a Self-attention Adapter, each operating on the image embedding, and Prompt Tuning which instead modifies prompts input to the CLIP text encoder. We also propose a method for parameter retention in the adapter layers that uses a measure of parameter importance to better maintain stability and plasticity during incremental learning. Our experiments demonstrate that the simplest solution -- a single Linear Adapter layer with parameter retention -- produces the best results. Experiments on several conventional benchmarks consistently show a significant margin of improvement over the current state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge