Hans Garcia

Deep Optical Coding Design in Computational Imaging

Jun 27, 2022

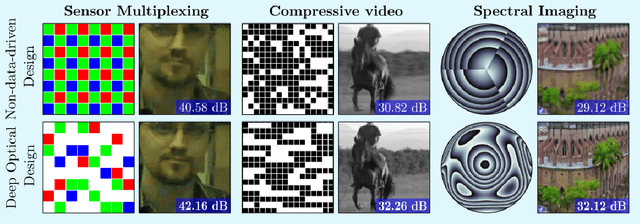

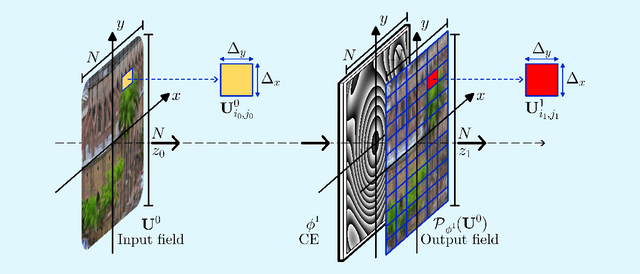

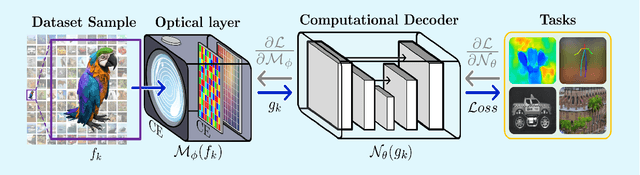

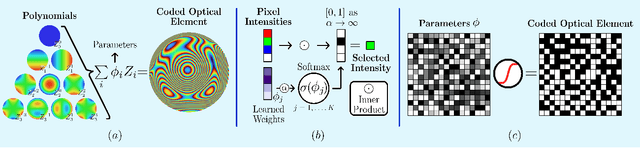

Abstract:Computational optical imaging (COI) systems leverage optical coding elements (CE) in their setups to encode a high-dimensional scene in a single or multiple snapshots and decode it by using computational algorithms. The performance of COI systems highly depends on the design of its main components: the CE pattern and the computational method used to perform a given task. Conventional approaches rely on random patterns or analytical designs to set the distribution of the CE. However, the available data and algorithm capabilities of deep neural networks (DNNs) have opened a new horizon in CE data-driven designs that jointly consider the optical encoder and computational decoder. Specifically, by modeling the COI measurements through a fully differentiable image formation model that considers the physics-based propagation of light and its interaction with the CEs, the parameters that define the CE and the computational decoder can be optimized in an end-to-end (E2E) manner. Moreover, by optimizing just CEs in the same framework, inference tasks can be performed from pure optics. This work surveys the recent advances on CE data-driven design and provides guidelines on how to parametrize different optical elements to include them in the E2E framework. Since the E2E framework can handle different inference applications by changing the loss function and the DNN, we present low-level tasks such as spectral imaging reconstruction or high-level tasks such as pose estimation with privacy preserving enhanced by using optimal task-based optical architectures. Finally, we illustrate classification and 3D object recognition applications performed at the speed of the light using all-optics DNN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge