Hanna Siebert

Learn2Trust: A video and streamlit-based educational programme for AI-based medical image analysis targeted towards medical students

Aug 15, 2022

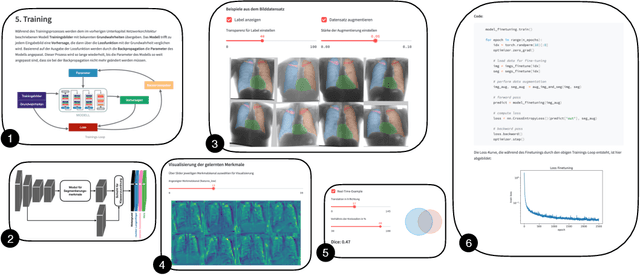

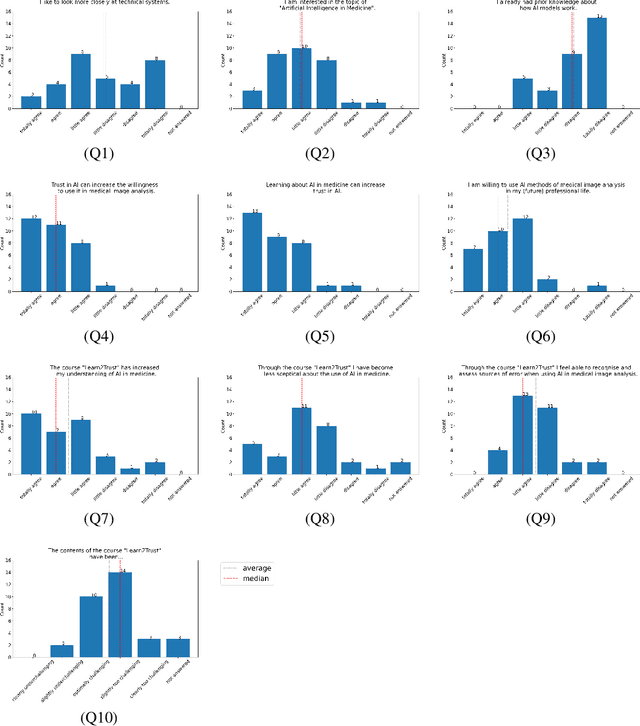

Abstract:In order to be able to use artificial intelligence (AI) in medicine without scepticism and to recognise and assess its growing potential, a basic understanding of this topic is necessary among current and future medical staff. Under the premise of "trust through understanding", we developed an innovative online course as a learning opportunity within the framework of the German KI Campus (AI campus) project, which is a self-guided course that teaches the basics of AI for the analysis of medical image data. The main goal is to provide a learning environment for a sufficient understanding of AI in medical image analysis so that further interest in this topic is stimulated and inhibitions towards its use can be overcome by means of positive application experience. The focus was on medical applications and the fundamentals of machine learning. The online course was divided into consecutive lessons, which include theory in the form of explanatory videos, practical exercises in the form of Streamlit and practical exercises and/or quizzes to check learning progress. A survey among the participating medical students in the first run of the course was used to analyse our research hypotheses quantitatively.

Learn2Reg: comprehensive multi-task medical image registration challenge, dataset and evaluation in the era of deep learning

Dec 23, 2021

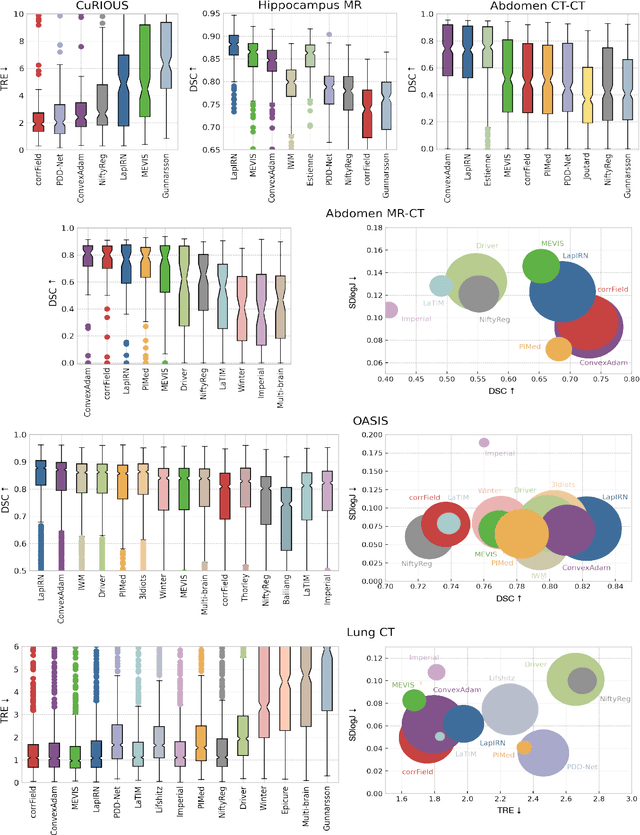

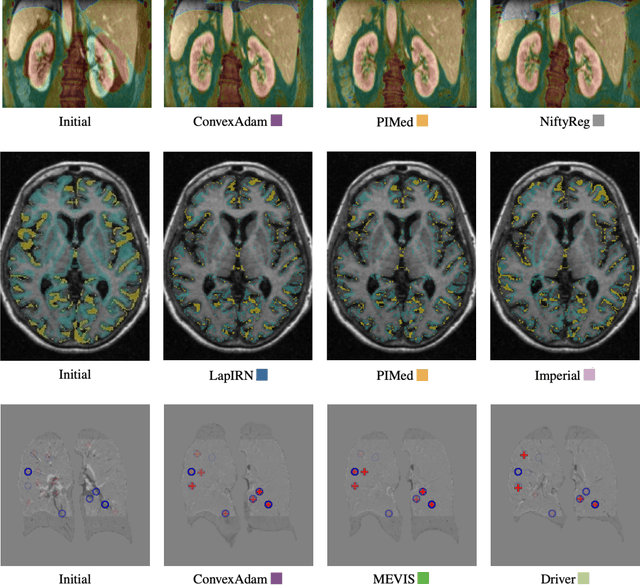

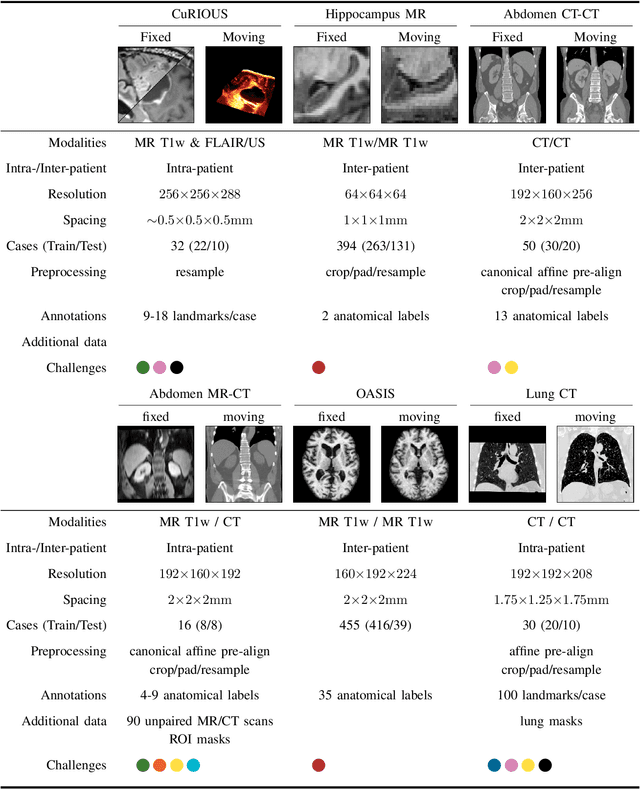

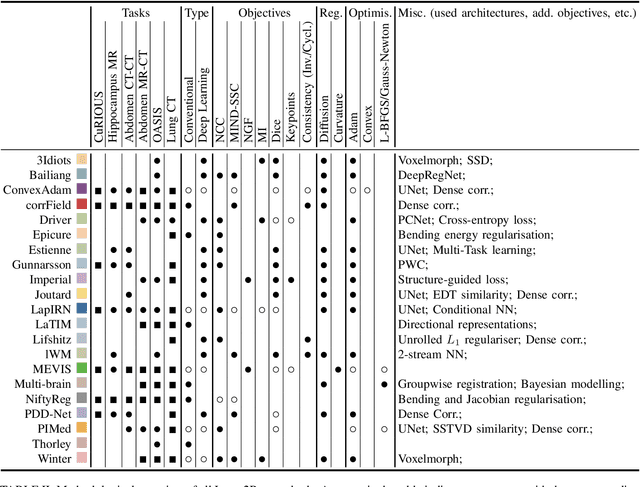

Abstract:Image registration is a fundamental medical image analysis task, and a wide variety of approaches have been proposed. However, only a few studies have comprehensively compared medical image registration approaches on a wide range of clinically relevant tasks, in part because of the lack of availability of such diverse data. This limits the development of registration methods, the adoption of research advances into practice, and a fair benchmark across competing approaches. The Learn2Reg challenge addresses these limitations by providing a multi-task medical image registration benchmark for comprehensive characterisation of deformable registration algorithms. A continuous evaluation will be possible at https://learn2reg.grand-challenge.org. Learn2Reg covers a wide range of anatomies (brain, abdomen, and thorax), modalities (ultrasound, CT, MR), availability of annotations, as well as intra- and inter-patient registration evaluation. We established an easily accessible framework for training and validation of 3D registration methods, which enabled the compilation of results of over 65 individual method submissions from more than 20 unique teams. We used a complementary set of metrics, including robustness, accuracy, plausibility, and runtime, enabling unique insight into the current state-of-the-art of medical image registration. This paper describes datasets, tasks, evaluation methods and results of the challenge, and the results of further analysis of transferability to new datasets, the importance of label supervision, and resulting bias.

Fast 3D registration with accurate optimisation and little learning for Learn2Reg 2021

Dec 06, 2021

Abstract:Current approaches for deformable medical image registration often struggle to fulfill all of the following criteria: versatile applicability, small computation or training times, and the being able to estimate large deformations. Furthermore, end-to-end networks for supervised training of registration often become overly complex and difficult to train. For the Learn2Reg2021 challenge, we aim to address these issues by decoupling feature learning and geometric alignment. First, we introduce a new very fast and accurate optimisation method. By using discretised displacements and a coupled convex optimisation procedure, we are able to robustly cope with large deformations. With the help of an Adam-based instance optimisation, we achieve very accurate registration performances and by using regularisation, we obtain smooth and plausible deformation fields. Second, to be versatile for different registration tasks, we extract hand-crafted features that are modality and contrast invariant and complement them with semantic features from a task-specific segmentation U-Net. With our results we were able to achieve the overall Learn2Reg2021 challenge's second place, winning Task 1 and being second and third in the other two tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge