Hana Lee

High-dimensional multiple imputation (HDMI) for partially observed confounders including natural language processing-derived auxiliary covariates

May 17, 2024Abstract:Multiple imputation (MI) models can be improved by including auxiliary covariates (AC), but their performance in high-dimensional data is not well understood. We aimed to develop and compare high-dimensional MI (HDMI) approaches using structured and natural language processing (NLP)-derived AC in studies with partially observed confounders. We conducted a plasmode simulation study using data from opioid vs. non-steroidal anti-inflammatory drug (NSAID) initiators (X) with observed serum creatinine labs (Z2) and time-to-acute kidney injury as outcome. We simulated 100 cohorts with a null treatment effect, including X, Z2, atrial fibrillation (U), and 13 other investigator-derived confounders (Z1) in the outcome generation. We then imposed missingness (MZ2) on 50% of Z2 measurements as a function of Z2 and U and created different HDMI candidate AC using structured and NLP-derived features. We mimicked scenarios where U was unobserved by omitting it from all AC candidate sets. Using LASSO, we data-adaptively selected HDMI covariates associated with Z2 and MZ2 for MI, and with U to include in propensity score models. The treatment effect was estimated following propensity score matching in MI datasets and we benchmarked HDMI approaches against a baseline imputation and complete case analysis with Z1 only. HDMI using claims data showed the lowest bias (0.072). Combining claims and sentence embeddings led to an improvement in the efficiency displaying the lowest root-mean-squared-error (0.173) and coverage (94%). NLP-derived AC alone did not perform better than baseline MI. HDMI approaches may decrease bias in studies with partially observed confounders where missingness depends on unobserved factors.

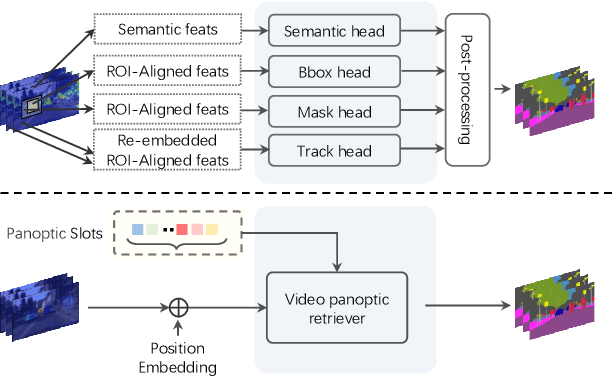

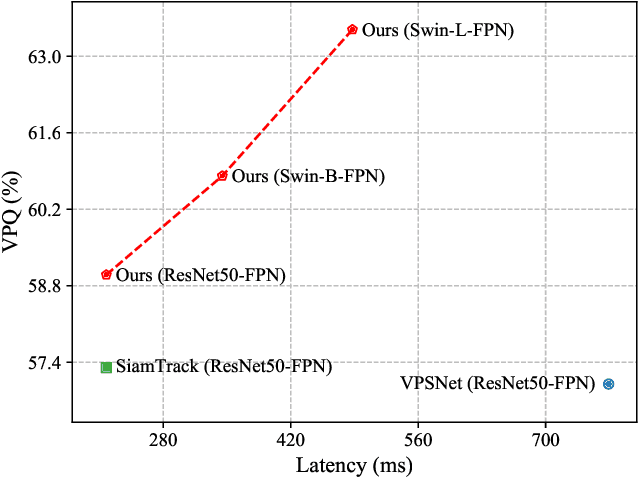

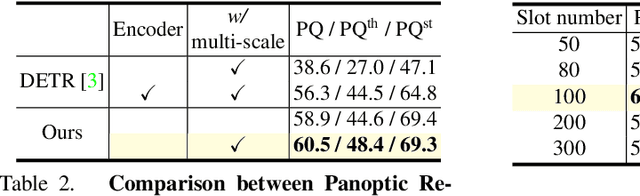

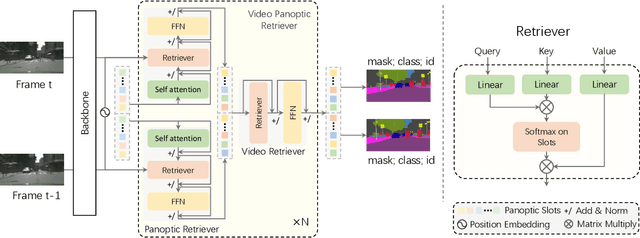

Slot-VPS: Object-centric Representation Learning for Video Panoptic Segmentation

Dec 16, 2021

Abstract:Video Panoptic Segmentation (VPS) aims at assigning a class label to each pixel, uniquely segmenting and identifying all object instances consistently across all frames. Classic solutions usually decompose the VPS task into several sub-tasks and utilize multiple surrogates (e.g. boxes and masks, centres and offsets) to represent objects. However, this divide-and-conquer strategy requires complex post-processing in both spatial and temporal domains and is vulnerable to failures from surrogate tasks. In this paper, inspired by object-centric learning which learns compact and robust object representations, we present Slot-VPS, the first end-to-end framework for this task. We encode all panoptic entities in a video, including both foreground instances and background semantics, with a unified representation called panoptic slots. The coherent spatio-temporal object's information is retrieved and encoded into the panoptic slots by the proposed Video Panoptic Retriever, enabling it to localize, segment, differentiate, and associate objects in a unified manner. Finally, the output panoptic slots can be directly converted into the class, mask, and object ID of panoptic objects in the video. We conduct extensive ablation studies and demonstrate the effectiveness of our approach on two benchmark datasets, Cityscapes-VPS (\textit{val} and test sets) and VIPER (\textit{val} set), achieving new state-of-the-art performance of 63.7, 63.3 and 56.2 VPQ, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge