Haiyang Ying

Speedy Deformable 3D Gaussian Splatting: Fast Rendering and Compression of Dynamic Scenes

Jun 09, 2025Abstract:Recent extensions of 3D Gaussian Splatting (3DGS) to dynamic scenes achieve high-quality novel view synthesis by using neural networks to predict the time-varying deformation of each Gaussian. However, performing per-Gaussian neural inference at every frame poses a significant bottleneck, limiting rendering speed and increasing memory and compute requirements. In this paper, we present Speedy Deformable 3D Gaussian Splatting (SpeeDe3DGS), a general pipeline for accelerating the rendering speed of dynamic 3DGS and 4DGS representations by reducing neural inference through two complementary techniques. First, we propose a temporal sensitivity pruning score that identifies and removes Gaussians with low contribution to the dynamic scene reconstruction. We also introduce an annealing smooth pruning mechanism that improves pruning robustness in real-world scenes with imprecise camera poses. Second, we propose GroupFlow, a motion analysis technique that clusters Gaussians by trajectory similarity and predicts a single rigid transformation per group instead of separate deformations for each Gaussian. Together, our techniques accelerate rendering by $10.37\times$, reduce model size by $7.71\times$, and shorten training time by $2.71\times$ on the NeRF-DS dataset. SpeeDe3DGS also improves rendering speed by $4.20\times$ and $58.23\times$ on the D-NeRF and HyperNeRF vrig datasets. Our methods are modular and can be integrated into any deformable 3DGS or 4DGS framework.

SketchSplat: 3D Edge Reconstruction via Differentiable Multi-view Sketch Splatting

Mar 18, 2025

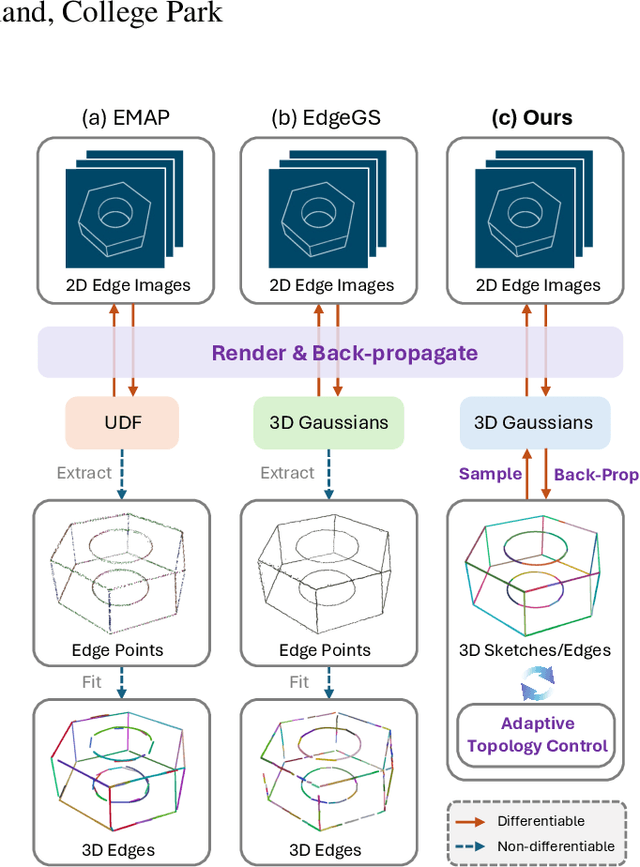

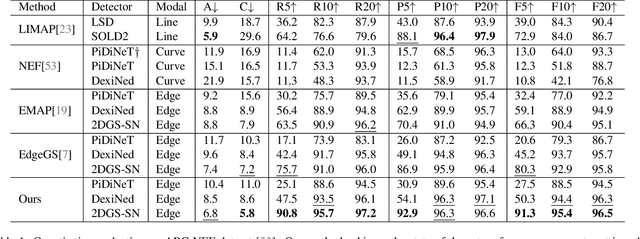

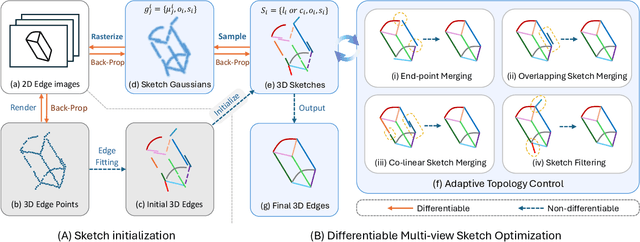

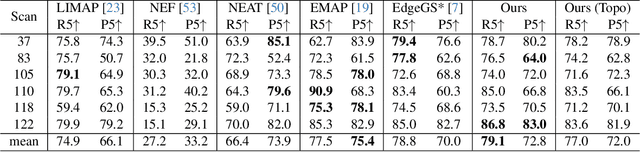

Abstract:Edges are one of the most basic parametric primitives to describe structural information in 3D. In this paper, we study parametric 3D edge reconstruction from calibrated multi-view images. Previous methods usually reconstruct a 3D edge point set from multi-view 2D edge images, and then fit 3D edges to the point set. However, noise in the point set may cause gaps among fitted edges, and the recovered edges may not align with input multi-view images since the edge fitting depends only on the reconstructed 3D point set. To mitigate these problems, we propose SketchSplat, a method to reconstruct accurate, complete, and compact 3D edges via differentiable multi-view sketch splatting. We represent 3D edges as sketches, which are parametric lines and curves defined by attributes including control points, scales, and opacity. During edge reconstruction, we iteratively sample Gaussian points from a set of sketches and rasterize the Gaussians onto 2D edge images. Then the gradient of the image error with respect to the input 2D edge images can be back-propagated to optimize the sketch attributes. Our method bridges 2D edge images and 3D edges in a differentiable manner, which ensures that 3D edges align well with 2D images and leads to accurate and complete results. We also propose a series of adaptive topological operations and apply them along with the sketch optimization. The topological operations help reduce the number of sketches required while ensuring high accuracy, yielding a more compact reconstruction. Finally, we contribute an accurate 2D edge detector that improves the performance of both ours and existing methods. Experiments show that our method achieves state-of-the-art accuracy, completeness, and compactness on a benchmark CAD dataset.

OmniSeg3D: Omniversal 3D Segmentation via Hierarchical Contrastive Learning

Nov 20, 2023

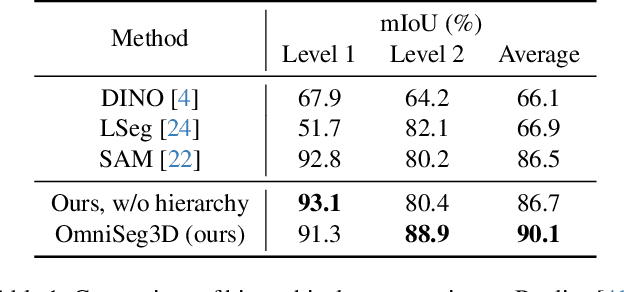

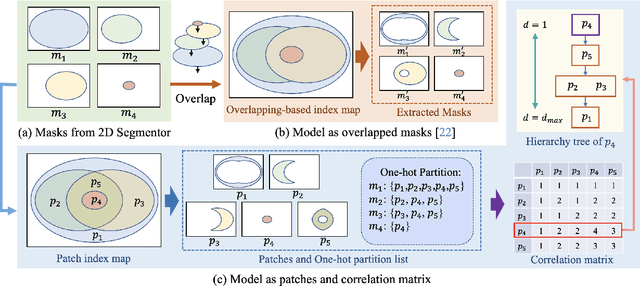

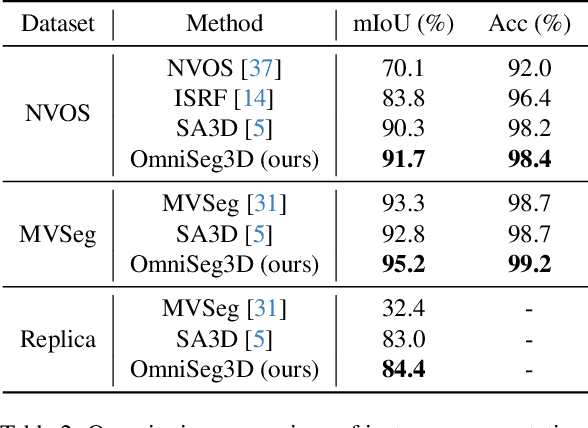

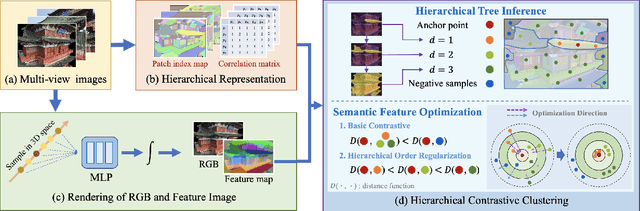

Abstract:Towards holistic understanding of 3D scenes, a general 3D segmentation method is needed that can segment diverse objects without restrictions on object quantity or categories, while also reflecting the inherent hierarchical structure. To achieve this, we propose OmniSeg3D, an omniversal segmentation method aims for segmenting anything in 3D all at once. The key insight is to lift multi-view inconsistent 2D segmentations into a consistent 3D feature field through a hierarchical contrastive learning framework, which is accomplished by two steps. Firstly, we design a novel hierarchical representation based on category-agnostic 2D segmentations to model the multi-level relationship among pixels. Secondly, image features rendered from the 3D feature field are clustered at different levels, which can be further drawn closer or pushed apart according to the hierarchical relationship between different levels. In tackling the challenges posed by inconsistent 2D segmentations, this framework yields a global consistent 3D feature field, which further enables hierarchical segmentation, multi-object selection, and global discretization. Extensive experiments demonstrate the effectiveness of our method on high-quality 3D segmentation and accurate hierarchical structure understanding. A graphical user interface further facilitates flexible interaction for omniversal 3D segmentation.

PARF: Primitive-Aware Radiance Fusion for Indoor Scene Novel View Synthesis

Sep 29, 2023Abstract:This paper proposes a method for fast scene radiance field reconstruction with strong novel view synthesis performance and convenient scene editing functionality. The key idea is to fully utilize semantic parsing and primitive extraction for constraining and accelerating the radiance field reconstruction process. To fulfill this goal, a primitive-aware hybrid rendering strategy was proposed to enjoy the best of both volumetric and primitive rendering. We further contribute a reconstruction pipeline conducts primitive parsing and radiance field learning iteratively for each input frame which successfully fuses semantic, primitive, and radiance information into a single framework. Extensive evaluations demonstrate the fast reconstruction ability, high rendering quality, and convenient editing functionality of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge